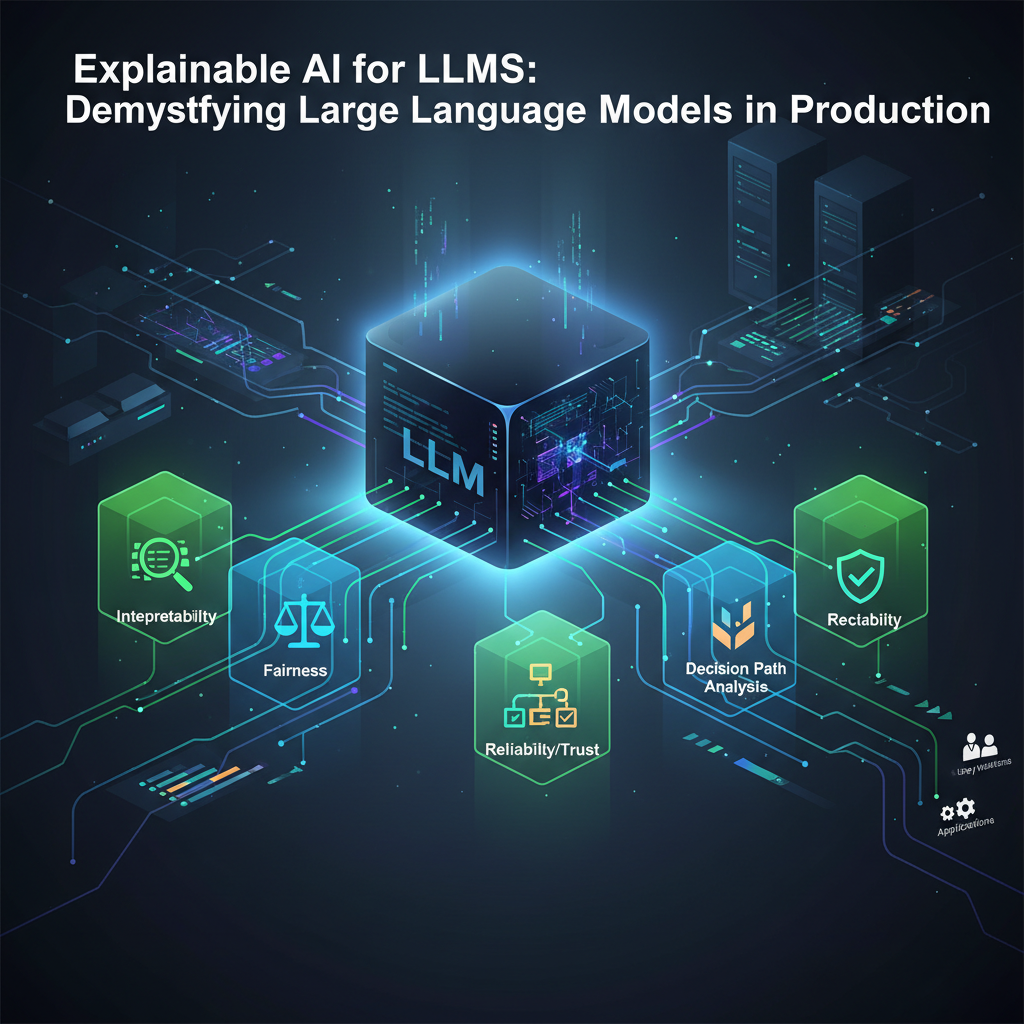

Explainable AI for LLMs: Demystifying Large Language Models in Production

Large Language Models (LLMs) are transforming industries, but their 'black box' nature poses challenges. This post explores why Explainable AI (XAI) is crucial for understanding, trusting, and ensuring the fairness and reliability of LLMs in real-world applications.

The age of Large Language Models (LLMs) is upon us. From powering sophisticated chatbots and content generation platforms to assisting in scientific discovery and critical decision-making systems, LLMs like GPT-4, Llama 2, and Claude are rapidly becoming indispensable tools across virtually every industry. Their ability to understand, generate, and process human language at an unprecedented scale has unlocked capabilities previously confined to science fiction. However, with great power comes great responsibility – and a significant challenge: understanding why these incredibly complex models behave the way they do.

This is where Explainable AI (XAI) steps in, particularly for LLMs deployed in production environments. Unlike traditional software, LLMs are often "black boxes," making it difficult to trace their reasoning, pinpoint sources of error, or assure their fairness and reliability. As LLMs move from research labs to real-world applications, the demand for transparency, trust, and accountability has never been higher. This post delves into the critical intersection of XAI and LLMs in production, exploring why it's essential, the latest advancements, and its profound practical implications for AI practitioners and enthusiasts alike.

The Imperative for Explainable LLMs

The widespread adoption of LLMs in high-stakes applications has brought their inherent "black box" nature into sharp focus. With billions, or even trillions, of parameters, these models learn intricate patterns from vast datasets, making their internal workings incredibly opaque. When an LLM produces an unexpected, incorrect, biased, or even harmful output, merely knowing what it said is no longer sufficient; we need to understand why.

Several factors underscore the critical need for XAI in the LLM production lifecycle:

- Amplified "Black Box" Problem: Compared to traditional deep learning models, LLMs present an even more formidable challenge. Their sheer scale and the emergent properties they exhibit make direct interpretation of individual neuron activations or weights practically impossible. We're dealing with models that can "hallucinate" (generate factually incorrect but plausible-sounding information), exhibit subtle biases, or fail to follow instructions in non-obvious ways. Without XAI, debugging these issues is akin to navigating a maze blindfolded.

- Regulatory Scrutiny and Compliance: Governments and regulatory bodies worldwide are enacting legislation to govern AI, such as the EU AI Act and the NIST AI Risk Management Framework. These frameworks increasingly demand transparency, fairness, and accountability from AI systems, especially those deployed in sensitive or critical domains. XAI is not just a technical nice-to-have; it's becoming a legal and ethical necessity for demonstrating compliance and mitigating legal risks.

- Building Trust and Fostering User Acceptance: For users, stakeholders, and domain experts to trust and effectively utilize LLMs, they need confidence in the model's reasoning. Imagine an LLM assisting a doctor in diagnosis or a lawyer in drafting a brief. If the LLM's recommendation is opaque, it undermines trust and hinders adoption. Explanations provide the necessary bridge between complex AI outputs and human understanding, fostering acceptance and responsible use.

- Debugging, Improvement, and Robustness: For developers, XAI techniques are invaluable tools for debugging. They help identify the root causes of errors – whether it's an issue with the prompt, biases in the training data, or inherent model limitations. By understanding why an LLM failed, practitioners can iterate more effectively, fine-tune models, and develop more robust and reliable systems.

- Mitigating Hallucinations and Bias: Hallucinations and biases are two of the most significant challenges in deploying LLMs. XAI can help identify when an LLM is veering off course or exhibiting undesirable biases. For instance, an explanation might reveal that an LLM's biased response stemmed from a specific phrase in the input, allowing for targeted intervention or prompt refinement.

Recent Developments and Emerging Trends in XAI for LLMs

The field of XAI for LLMs is rapidly evolving, driven by the urgent need to address the challenges outlined above. Researchers and practitioners are exploring diverse approaches, often adapting existing XAI techniques or developing novel methods tailored to the unique architecture and behavior of transformer-based LLMs.

1. Prompt-Based Explanations (Self-Correction/Chain-of-Thought)

One of the most intuitive and powerful approaches leverages the LLM's own generative capabilities to explain its reasoning.

- Concept: Instead of relying on external models or post-hoc analysis, this method asks the LLM to articulate its thought process. Techniques like Chain-of-Thought (CoT) prompting encourage the model to output intermediate reasoning steps before arriving at a final answer. For example, instead of just asking "Is this statement true or false?", one might prompt, "Break down your reasoning step-by-step to determine if this statement is true or false, then give your final answer."

- Development: Advanced prompting strategies are being developed to elicit more coherent, faithful, and even critical explanations directly from the LLM. This includes asking the model to:

- Justify its choices: "Explain why you chose this particular option."

- Critique its own output: "Review your previous answer. Are there any potential flaws or alternative interpretations? Explain your self-correction process."

- Provide counterfactuals: "If the input had been slightly different in X way, how would your answer change and why?"

- Technical Deep Dive: CoT works by providing a few-shot example that includes not just the question and answer, but also the intermediate reasoning steps. This primes the LLM to generate similar reasoning for new, unseen questions. The underlying mechanism is that the LLM, being a sequence generator, learns to produce these reasoning steps as part of the desired output sequence. While powerful, the "explanation" itself is generated by the same black box, raising questions about its faithfulness (does it truly reflect the model's internal computation, or is it a post-hoc rationalization designed to sound plausible?) and consistency (does it always provide the same explanation for the same underlying reasoning?).

2. Post-hoc Local Explanations Adapted for LLMs

Traditional XAI methods, originally designed for simpler models or image classification, are being adapted for the complexities of LLMs. These methods aim to explain individual predictions.

- Concept: Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) work by perturbing the input and observing changes in the model's output to identify which input features are most influential. For LLMs, this translates to understanding which tokens, phrases, or sentences in the input most influenced a specific output token or the overall generation.

- Development:

- Attention Mechanisms: Transformer models inherently provide attention weights, indicating how much the model "attends" to different input tokens when processing each output token. Visualizing these attention maps can offer insights into token-level dependencies. Research focuses on making these attention weights more reliably interpretable, as raw attention scores don't always directly correlate with importance. Techniques like attention rollout or gradient-based attention attribution aim to aggregate attention across multiple layers to provide a more holistic view.

- Perturbation-based methods: Adapting LIME/SHAP involves masking or replacing input tokens/phrases and observing the impact on the LLM's output. For instance, one might remove a key adjective from a sentiment analysis prompt and see if the sentiment prediction changes significantly. Challenges include the discrete nature of tokens (making continuous perturbations difficult) and the computational cost of querying large LLMs multiple times.

- Gradient-based methods: Techniques like Integrated Gradients or LRP (Layer-wise Relevance Propagation) aim to attribute the model's output back to specific input features by propagating gradients or relevance scores through the network. These can highlight salient input tokens that contributed most to a particular output.

- Technical Deep Dive: For instance, in a sentiment analysis task, a LIME-like approach might generate local explanations by randomly masking words in the input sentence ("The movie was incredibly boring and uninspired") and observing how the sentiment prediction changes. If removing "incredibly" or "uninspired" flips the sentiment from negative to neutral, those words are identified as highly influential. SHAP values, on the other hand, provide a fairer distribution of importance by considering all possible permutations of feature subsets.

3. Concept-Based Explanations

Moving beyond just input features, concept-based explanations aim to explain LLM behavior in terms of higher-level, human-understandable concepts.

- Concept: Instead of saying "token 'money' was important," we want to say "the model identified a financial concept." This provides more intuitive and actionable insights for human users, especially domain experts.

- Development:

- Concept Bottleneck Models (CBMs): While originally for vision, the idea is to force the model to predict human-defined concepts as an intermediate step before making its final prediction. For LLMs, this could involve training a component to identify concepts like "sentiment," "topic," "entity type," or "argument strength" from the input, and then using these concepts to predict the final output.

- TCAV (Testing with Concept Activation Vectors): TCAV quantifies the degree to which a human-defined concept (e.g., "violence," "legal terminology," "positive emotion") influences a model's prediction. It works by identifying activation patterns in the model's internal layers that correspond to examples of a given concept.

- Probing and Concept Extraction: Researchers are developing methods to "probe" LLMs to see what concepts they have implicitly learned. This might involve training a small, interpretable classifier on the internal representations (embeddings) of the LLM to predict specific concepts.

- Benefit: This approach offers a more abstract and human-centric explanation, making it easier for non-technical users to understand the model's "thinking" and for developers to ensure the model is learning the right concepts.

4. Evaluation of XAI Methods for LLMs

A critical and growing area of research is the rigorous evaluation of the quality of explanations. An explanation is only useful if it's accurate, understandable, and reliable.

- Trend: Developing metrics for:

- Faithfulness: Does the explanation truly reflect the model's actual internal reasoning, or is it a post-hoc rationalization? This is often measured by how well the explanation can predict the model's behavior or by perturbing important features identified by the explanation and observing the impact on the model's output.

- Plausibility/Understandability: Is the explanation comprehensible and believable to a human? This often requires human evaluation studies.

- Stability/Robustness: Do similar inputs yield similar explanations? A good explanation method should not produce wildly different explanations for infinitesimally different inputs.

- Actionability: Does the explanation provide insights that can be used to debug, improve, or control the model?

- Challenge: Quantifying the "goodness" of an explanation is inherently difficult and often requires human judgment, making standardized metrics challenging to establish.

5. Interactive XAI Interfaces

To make XAI tools practical for real-world use, user-friendly interfaces are essential.

- Trend: Developing interactive dashboards and visualization tools that allow practitioners to:

- Probe LLMs with different inputs.

- Visualize attention maps, salient tokens, or concept activations.

- Interactively explore counterfactuals ("What if I changed this word?").

- Compare explanations from different XAI methods.

- Benefit: These interfaces democratize access to XAI, making it easier for developers, product managers, and even end-users to understand and debug LLMs without needing deep expertise in XAI algorithms.

6. XAI for Retrieval-Augmented Generation (RAG)

As RAG architectures become standard for grounding LLMs in external knowledge bases, XAI's scope expands.

- Trend: Explanations for RAG systems need to cover not just the LLM's generation but also the retrieval process. This includes:

- Explaining why certain documents or passages were retrieved.

- Showing how the retrieved information influenced the LLM's final answer.

- Identifying instances where the LLM hallucinated despite having relevant retrieved context, or ignored relevant context.

- Benefit: This adds a crucial layer of transparency to the entire RAG pipeline, helping to diagnose issues related to information retrieval, contextual grounding, and factual accuracy. For example, an XAI system for RAG might highlight the specific sentences from a retrieved document that were most influential in forming a part of the LLM's answer, alongside an explanation of the query terms that led to that document's retrieval.

Practical Applications for AI Practitioners and Enthusiasts

The advancements in XAI for LLMs are not just theoretical; they have profound practical implications across the entire LLM lifecycle, from development and deployment to auditing and user interaction.

1. Debugging and Error Analysis

- Practitioner: XAI becomes a diagnostic tool. If an LLM is hallucinating or generating biased content, attention maps can pinpoint which parts of the prompt or context led to an incorrect answer. For instance, if an LLM incorrectly summarizes a legal document, an XAI tool could highlight the specific phrases it misinterpreted or overlooked, allowing the developer to refine the prompt or even fine-tune the model on similar cases.

- Enthusiast: Understand common failure modes of LLMs. By seeing how an XAI tool identifies problematic inputs or internal reasoning paths, enthusiasts can learn to craft better, more robust prompts and develop a more nuanced understanding of LLM limitations.

2. Bias Detection and Mitigation

- Practitioner: Use XAI to uncover and quantify biases in LLM outputs related to demographics, stereotypes, or sensitive topics. For example, if an LLM consistently associates certain professions with specific genders, an XAI tool could trace this bias back to patterns in the training data or specific internal activations, enabling targeted data augmentation or model debiasing techniques. Concept-based explanations can highlight if the model is relying on stereotypical concepts when making predictions.

- Enthusiast: Critically evaluate AI outputs for fairness and understand the societal implications of biased models, contributing to more responsible AI development.

3. Trust and Adoption in Critical Domains

- Practitioner: In high-stakes fields like healthcare (e.g., medical diagnosis assistance), finance (e.g., loan approval explanations), or legal tech (e.g., case brief generation), XAI is essential. Explanations can justify why an LLM recommended a certain diagnosis, flagged a transaction as fraudulent, or suggested a particular legal argument. This transparency builds trust with domain experts, regulators, and end-users, facilitating broader adoption.

- Enthusiast: Appreciate the complexities of deploying AI in critical environments and the paramount importance of transparency in ensuring safety and ethical use.

4. Prompt Engineering and Model Improvement

- Practitioner: XAI provides invaluable insights into how LLMs interpret prompts. Understanding why a certain prompt works or fails allows for more systematic and effective prompt engineering. If an XAI method shows that an LLM is misinterpreting a specific instruction, the prompt can be rephrased for clarity. This also informs fine-tuning strategies, helping developers focus on specific areas where the model's understanding is weak.

- Enthusiast: Learn to craft more effective prompts by gaining a deeper understanding of the LLM's "reasoning" and how different phrasing impacts its internal processes.

5. Compliance and Auditability

- Practitioner: Generate audit trails and explanations required by regulatory bodies for AI systems. XAI tools can provide documented evidence of the model's decision-making process, demonstrating due diligence and accountability, which is crucial for legal and ethical compliance. This is particularly relevant for "right to explanation" clauses in regulations like GDPR.

- Enthusiast: Understand the legal and ethical landscape surrounding AI and the importance of responsible AI development, advocating for transparency and accountability.

6. Educational Tools

- Practitioner/Enthusiast: XAI can serve as a powerful educational tool to demystify LLMs. By visualizing internal processes and understanding how different inputs lead to different outputs, users and developers can build a more intuitive grasp of LLM capabilities, limitations, and underlying mechanisms. This fosters a deeper understanding and encourages responsible innovation.

Conclusion

Explainable AI for Large Language Models in production is no longer a niche academic interest; it is a critical necessity for the safe, ethical, and effective deployment of these powerful systems. As LLMs become increasingly integrated into the fabric of our daily lives and critical infrastructure, the ability to understand, trust, and control their behavior through robust XAI techniques will be paramount.

The ongoing research and development in prompt-based explanations, adapted post-hoc methods, concept-based reasoning, and rigorous evaluation frameworks are paving the way for a future where LLMs are not just intelligent, but also intelligible. By embracing XAI, we can unlock the full potential of LLMs, mitigating their inherent risks, fostering trust, and ensuring their responsible and beneficial integration into society. For every AI practitioner and enthusiast, understanding and applying XAI principles to LLMs is becoming an indispensable skill in navigating this transformative era of artificial intelligence.