Vision Transformers & Foundation Models: Reshaping Computer Vision's Future

Explore how Vision Transformers (ViTs) and Foundation Models are revolutionizing computer vision, moving beyond CNNs to create more capable and adaptable AI. Discover the paradigm shift in how machines 'see' and understand the world.

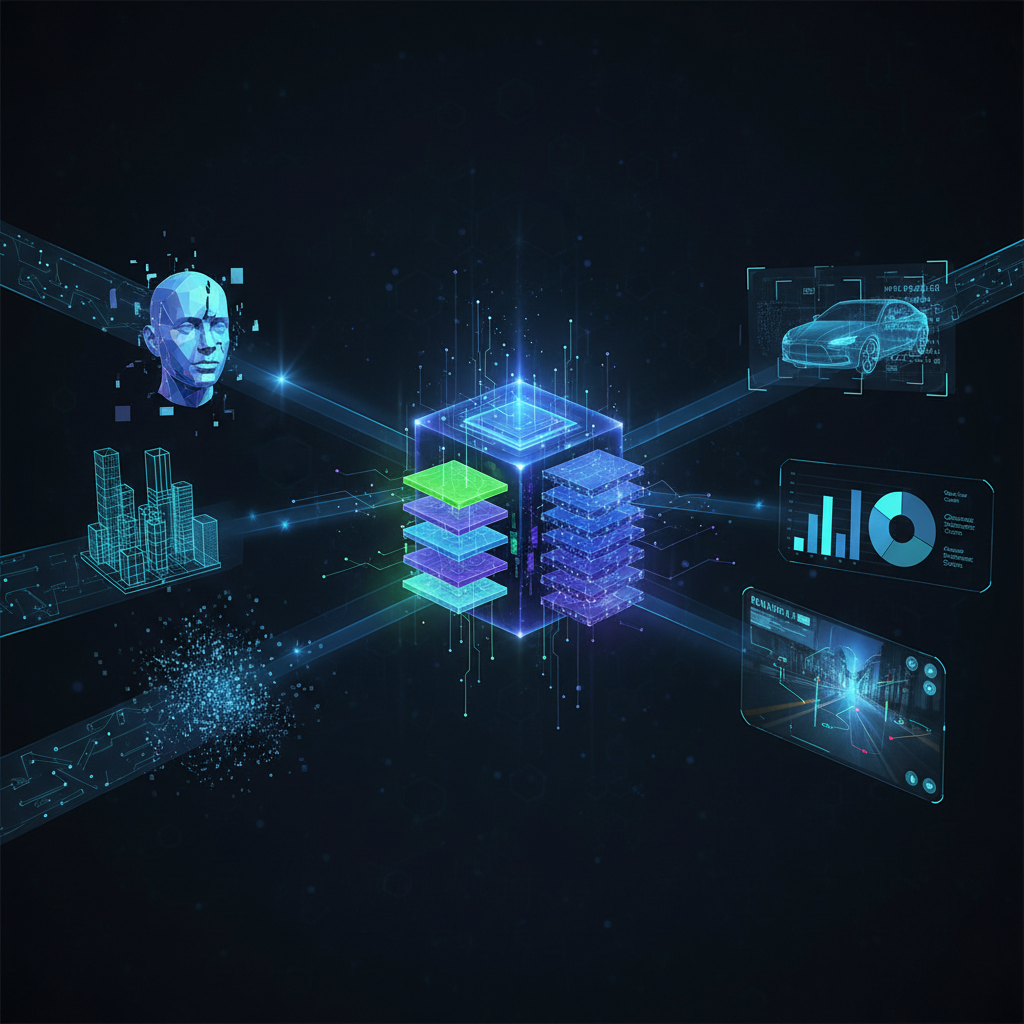

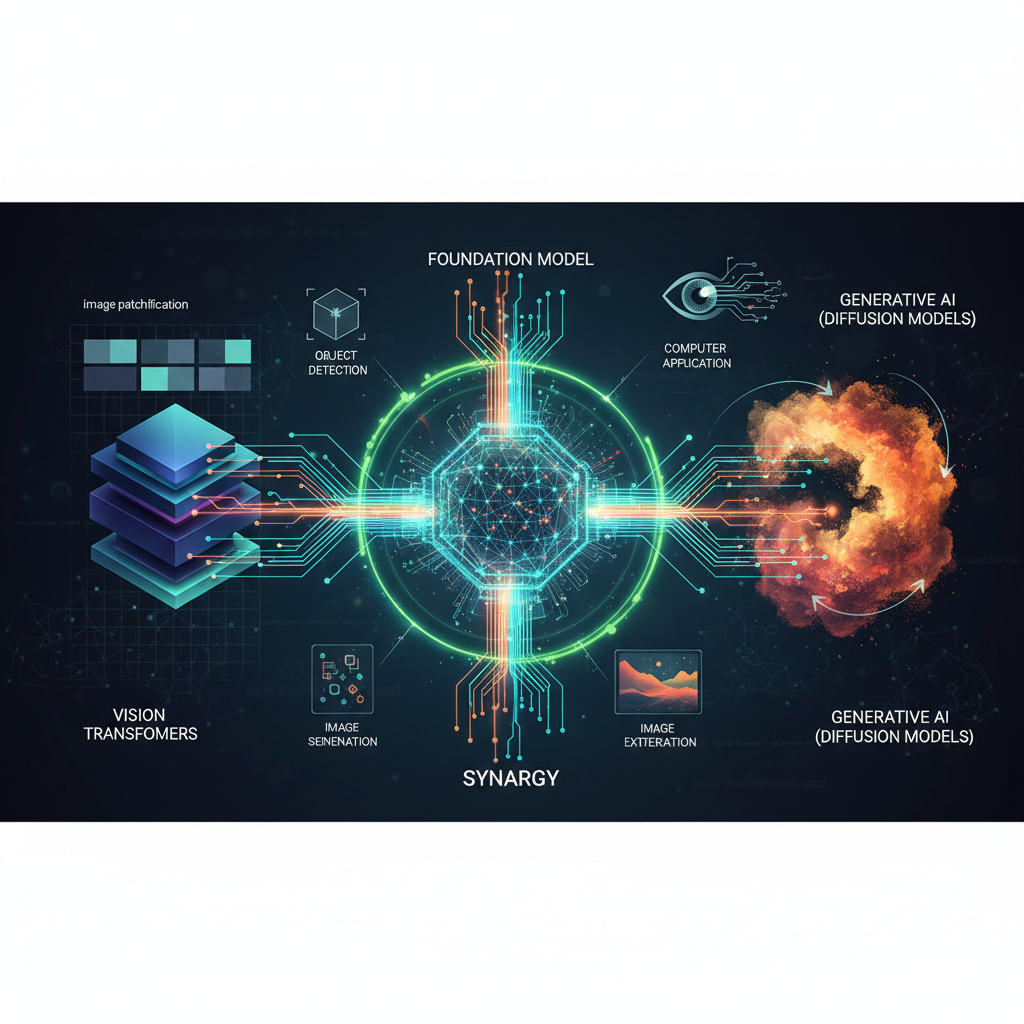

The landscape of computer vision has undergone a seismic shift in recent years, moving beyond the long-dominant convolutional neural networks (CNNs) towards a new paradigm: Foundation Models built upon the transformative power of Vision Transformers (ViTs). This evolution mirrors the revolution seen in natural language processing (NLP) with large language models (LLMs) and promises to redefine how we approach visual intelligence, making AI more capable, adaptable, and accessible than ever before.

This isn't just an incremental improvement; it's a fundamental re-architecture of how machines "see" and understand the world, paving the way for unprecedented multimodal capabilities and a future where AI can reason across different forms of data.

The Dawn of Vision Transformers: A Paradigm Shift

For nearly a decade, CNNs reigned supreme in computer vision. Their hierarchical structure, with local receptive fields and weight sharing, was perfectly suited for capturing spatial hierarchies in images. From AlexNet to ResNet, CNNs pushed the boundaries of image classification, object detection, and segmentation. However, CNNs inherently struggle with capturing long-range dependencies across an entire image due to their localized operations.

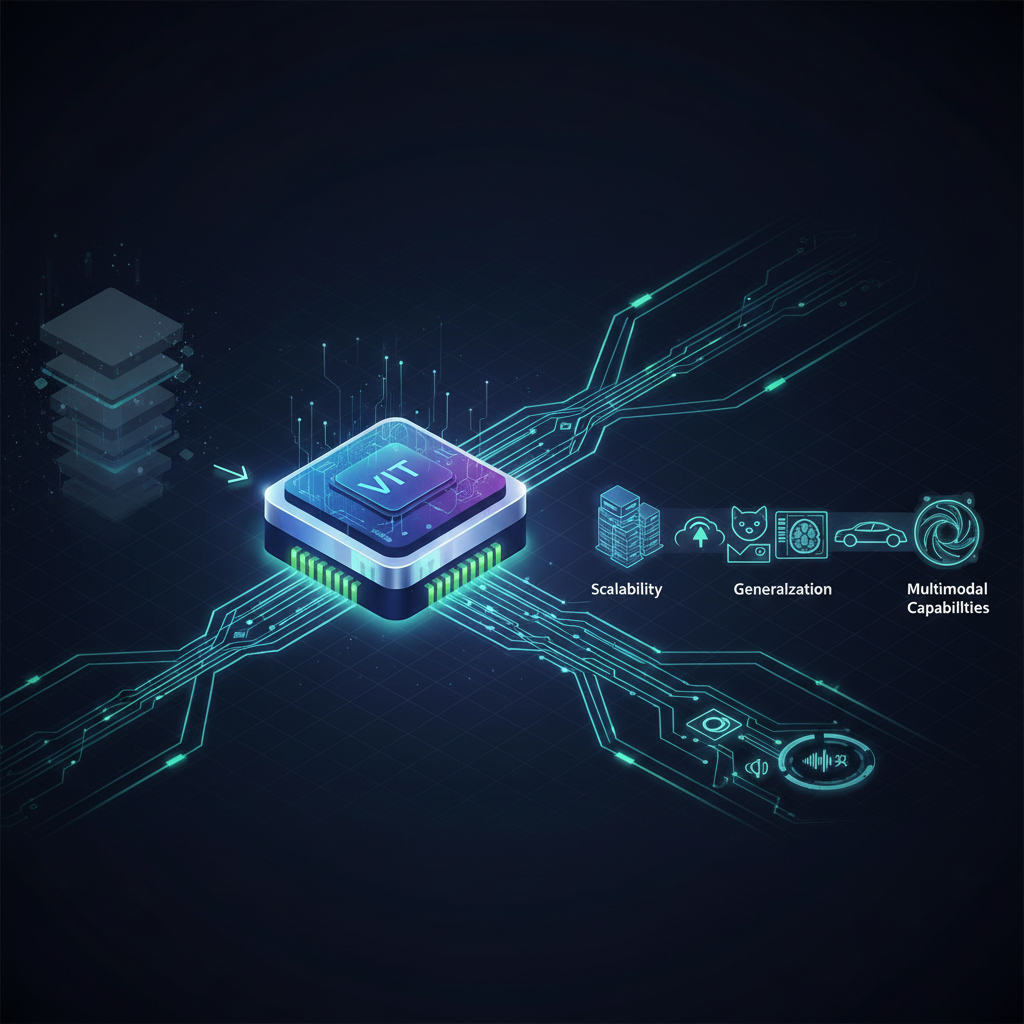

Enter the Transformer architecture, originally conceived for sequence-to-sequence tasks in NLP. Its core innovation – the self-attention mechanism – allows it to weigh the importance of different parts of an input sequence relative to each other, irrespective of their distance. In 2020, Dosovitskiy et al. published "An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale," a seminal paper that introduced the Vision Transformer (ViT). This paper demonstrated that by treating image patches as "words" in a sequence, Transformers could achieve state-of-the-art performance on image classification tasks, often surpassing CNNs, especially when trained on massive datasets.

How ViTs See the World: From Pixels to Patches

The core idea behind ViT is surprisingly elegant:

- Patching: An input image is divided into a grid of fixed-size, non-overlapping patches (e.g., 16x16 pixels).

- Linear Embedding: Each patch is flattened into a 1D vector and then linearly projected into a higher-dimensional embedding space. This transforms the pixel data into a sequence of "tokens."

- Positional Encoding: Since Transformers are permutation-invariant (they don't inherently understand the order of tokens), positional embeddings are added to each patch embedding. This injects spatial information, telling the model where each patch originated in the original image.

- Class Token: A special learnable

[CLS]token (similar to BERT) is prepended to the sequence of patch embeddings. The final state of this token after passing through the Transformer encoder is used for classification. - Transformer Encoder: The sequence of embedded patches (plus the

[CLS]token) is fed into a standard Transformer encoder, which consists of multiple layers of multi-head self-attention (MHSA) and multi-layer perceptron (MLP) blocks. - Classification Head: The output of the

[CLS]token from the final encoder layer is passed through a simple MLP head for classification.

Why the Shift? CNNs excel at local feature extraction but require complex architectures (like ResNets with skip connections) to propagate information globally. ViTs, with their self-attention mechanism, inherently consider global relationships from the very first layer. This allows them to capture long-range dependencies and contextual information more effectively, which is crucial for understanding complex scenes.

Initial Challenges and Solutions: Early ViTs were "data-hungry." They required massive datasets (like JFT-300M with 300 million images) to outperform CNNs. Without sufficient data, they struggled to generalize. This led to innovations like:

- DeiT (Data-efficient Image Transformers): Introduced knowledge distillation, where a ViT is trained to mimic the outputs of a pre-trained CNN teacher, allowing it to achieve competitive performance with much less data.

- Swin Transformers: Addressed the computational cost and quadratic complexity of standard self-attention by introducing a hierarchical architecture with shifted windows. This allows for local attention within windows and cross-window connections, making them more efficient and better suited for dense prediction tasks like object detection and segmentation.

The Self-Supervised Revolution: Learning Without Labels

One of the biggest bottlenecks in traditional supervised learning is the need for vast amounts of meticulously labeled data. This is expensive, time-consuming, and often requires specialized domain expertise. The rise of Foundation Models in vision has been intrinsically linked to the advancements in Self-Supervised Learning (SSL). SSL allows models to learn robust visual representations from unlabeled data by creating "pretext tasks" where the input itself provides the supervision signal.

Key Self-Supervised Learning Methods for Vision

-

Contrastive Learning (e.g., SimCLR, MoCo):

- Idea: Learn to distinguish between different "views" of the same image (positive pairs) and views of different images (negative pairs).

- Mechanism: An image is augmented twice (e.g., random cropping, color jittering) to create two correlated views. These views are passed through an encoder network to produce embeddings. The goal is to maximize the similarity between embeddings of positive pairs and minimize similarity with negative pairs.

- Benefit: Produces highly discriminative features that are useful for downstream tasks.

python# Conceptual Python-like pseudocode for Contrastive Learning # (Simplified for illustration, actual implementations are more complex) class ContrastiveLoss(nn.Module): def __init__(self, temperature=0.07): super().__init__() self.temperature = temperature def forward(self, z_i, z_j, batch_size): # z_i, z_j are normalized embeddings for augmented views i and j # Concatenate all embeddings for similarity calculation z = torch.cat([z_i, z_j], dim=0) # [2*batch_size, embedding_dim] # Compute cosine similarity between all pairs sim_matrix = F.cosine_similarity(z.unsqueeze(1), z.unsqueeze(0), dim=2) # sim_matrix shape: [2*batch_size, 2*batch_size] # Create labels for positive pairs # Positive pairs are (i, i+batch_size) and (i+batch_size, i) labels = torch.arange(2 * batch_size).roll(shifts=batch_size).to(z.device) # Mask out self-similarities (diagonal) mask = torch.eye(2 * batch_size, dtype=torch.bool, device=z.device) sim_matrix = sim_matrix.masked_fill(mask, float('-inf')) # Compute InfoNCE loss logits = sim_matrix / self.temperature loss = F.cross_entropy(logits, labels) return loss# Conceptual Python-like pseudocode for Contrastive Learning # (Simplified for illustration, actual implementations are more complex) class ContrastiveLoss(nn.Module): def __init__(self, temperature=0.07): super().__init__() self.temperature = temperature def forward(self, z_i, z_j, batch_size): # z_i, z_j are normalized embeddings for augmented views i and j # Concatenate all embeddings for similarity calculation z = torch.cat([z_i, z_j], dim=0) # [2*batch_size, embedding_dim] # Compute cosine similarity between all pairs sim_matrix = F.cosine_similarity(z.unsqueeze(1), z.unsqueeze(0), dim=2) # sim_matrix shape: [2*batch_size, 2*batch_size] # Create labels for positive pairs # Positive pairs are (i, i+batch_size) and (i+batch_size, i) labels = torch.arange(2 * batch_size).roll(shifts=batch_size).to(z.device) # Mask out self-similarities (diagonal) mask = torch.eye(2 * batch_size, dtype=torch.bool, device=z.device) sim_matrix = sim_matrix.masked_fill(mask, float('-inf')) # Compute InfoNCE loss logits = sim_matrix / self.temperature loss = F.cross_entropy(logits, labels) return loss -

Masked Autoencoders (MAE):

- Idea: Inspired by BERT in NLP, MAE masks out a large portion of image patches and then trains the model to reconstruct the missing pixels.

- Mechanism: A high percentage (e.g., 75%) of image patches are randomly masked. Only the visible patches are fed into a lightweight encoder (typically a ViT). A separate decoder then takes the encoder's output and the masked tokens to reconstruct the original pixel values of the masked patches.

- Benefit: Forces the model to learn a rich understanding of image structure and context to infer missing information. MAE is particularly effective because the encoder only processes a small fraction of the patches, making it computationally efficient during pre-training.

python# Conceptual MAE training loop pseudocode # (Simplified, actual implementation involves specific ViT encoder/decoder) model = MaskedAutoencoder() # Contains ViT encoder and lightweight decoder optimizer = Adam(model.parameters()) for images in dataloader: # 1. Mask patches visible_patches, masked_patches_indices, mask = model.mask_image(images) # 2. Encode visible patches encoded_features = model.encoder(visible_patches) # 3. Decode and reconstruct masked patches reconstructed_pixels = model.decoder(encoded_features, masked_patches_indices) # 4. Calculate loss (e.g., MSE between original masked pixels and reconstructed) original_masked_pixels = images.get_masked_pixels(masked_patches_indices) loss = F.mse_loss(reconstructed_pixels, original_masked_pixels) # 5. Backpropagate and update weights optimizer.zero_grad() loss.backward() optimizer.step()# Conceptual MAE training loop pseudocode # (Simplified, actual implementation involves specific ViT encoder/decoder) model = MaskedAutoencoder() # Contains ViT encoder and lightweight decoder optimizer = Adam(model.parameters()) for images in dataloader: # 1. Mask patches visible_patches, masked_patches_indices, mask = model.mask_image(images) # 2. Encode visible patches encoded_features = model.encoder(visible_patches) # 3. Decode and reconstruct masked patches reconstructed_pixels = model.decoder(encoded_features, masked_patches_indices) # 4. Calculate loss (e.g., MSE between original masked pixels and reconstructed) original_masked_pixels = images.get_masked_pixels(masked_patches_indices) loss = F.mse_loss(reconstructed_pixels, original_masked_pixels) # 5. Backpropagate and update weights optimizer.zero_grad() loss.backward() optimizer.step() -

Knowledge Distillation (e.g., DINO):

- Idea: Self-supervised learning without labels and without explicit pairs. DINO (self-DIstillation with NO labels) trains a "student" network to match the output of a "teacher" network, where both networks are ViTs.

- Mechanism: The teacher network is an exponential moving average (EMA) of the student network, ensuring a stable target. Both networks receive different augmented views of the same image. The student is trained to predict the teacher's output (a probability distribution over prototypes) for different views.

- Benefit: DINO produces high-quality feature representations, often exhibiting emergent properties like object segmentation without explicit supervision.

Benefits of SSL: SSL is a game-changer because it drastically reduces the reliance on expensive labeled datasets. Models pre-trained with SSL on massive amounts of unlabeled data learn highly generalizable visual representations. These representations can then be fine-tuned on much smaller labeled datasets for specific downstream tasks, achieving superior performance with significantly less data. This makes AI more accessible and applicable to domains where labeled data is scarce.

Multimodal Foundation Models: Bridging Vision and Language

Perhaps the most exciting frontier opened by Foundation Models is multimodal learning, particularly the seamless integration of vision and language. Transformers, with their ability to process sequences of arbitrary modalities, are perfectly suited for this. These models learn joint representations that allow them to understand the relationships between images and text, leading to truly intelligent applications.

Landmark Multimodal Models

-

CLIP (Contrastive Language-Image Pre-training):

- Idea: Learn to associate text descriptions with images by training on a vast dataset of image-text pairs scraped from the internet.

- Mechanism: CLIP simultaneously trains an image encoder (a ViT) and a text encoder (a Transformer) to produce embeddings. During training, it pushes embeddings of matching image-text pairs closer together and pushes non-matching pairs further apart in a shared embedding space.

- Impact: Enables "zero-shot" classification. Given a new image, CLIP can classify it into arbitrary categories by comparing the image's embedding to the embeddings of text descriptions of those categories (e.g., "a photo of a dog," "a photo of a cat"). This means it can classify objects it has never explicitly seen during training. It also powers powerful image search engines and content filtering.

python# Conceptual CLIP inference for zero-shot classification from transformers import CLIPProcessor, CLIPModel model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32") processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32") image = load_image("my_dog.jpg") candidate_labels = ["a photo of a dog", "a photo of a cat", "a photo of a car"] inputs = processor(text=candidate_labels, images=image, return_tensors="pt", padding=True) with torch.no_grad(): outputs = model(**inputs) logits_per_image = outputs.logits_per_image # this is the dot product of image and text features probs = logits_per_image.softmax(dim=1) # convert to probabilities print(f"Image classified as: {candidate_labels[probs.argmax()]}") # Example output: Image classified as: a photo of a dog# Conceptual CLIP inference for zero-shot classification from transformers import CLIPProcessor, CLIPModel model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32") processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32") image = load_image("my_dog.jpg") candidate_labels = ["a photo of a dog", "a photo of a cat", "a photo of a car"] inputs = processor(text=candidate_labels, images=image, return_tensors="pt", padding=True) with torch.no_grad(): outputs = model(**inputs) logits_per_image = outputs.logits_per_image # this is the dot product of image and text features probs = logits_per_image.softmax(dim=1) # convert to probabilities print(f"Image classified as: {candidate_labels[probs.argmax()]}") # Example output: Image classified as: a photo of a dog -

DALL-E / Stable Diffusion:

- Idea: Generate novel images from text descriptions.

- Mechanism: These models typically involve a text encoder (often CLIP's text encoder) that translates text prompts into a latent representation, and a diffusion model that iteratively refines random noise into an image guided by this latent representation. They learn to map textual concepts to visual features.

- Impact: Revolutionized creative AI, enabling text-to-image generation, image editing, and style transfer with unprecedented control and quality.

Practical Applications of Multimodality:

- Zero-shot Classification/Detection: Classify or detect objects without needing labeled examples for every category. Crucial for rare events or rapidly evolving categories.

- Image Captioning: Automatically generate descriptive text for images.

- Visual Question Answering (VQA): Answer questions about the content of an image (e.g., "What is the person doing?" "How many cars are there?").

- Content Moderation: Identify and filter inappropriate content based on visual and textual cues.

- Creative Tools: Generate art, design assets, and visual content from simple text prompts.

- Accessibility: Describe images for visually impaired users.

Architectural Innovations & Efficiency

While ViTs established the core idea, subsequent research has focused on making them more efficient, robust, and adaptable to various tasks.

- Swin Transformers: As mentioned, Swin Transformers introduced hierarchical attention with shifted windows. This design makes them more efficient for dense prediction tasks (like segmentation and detection) because the attention complexity scales linearly with image size, unlike the quadratic scaling of vanilla ViTs. They also produce multi-scale feature maps, similar to CNNs, which are beneficial for these tasks.

- ConvNeXt: This is a fascinating development. After ViTs demonstrated superior performance, researchers at Meta asked: "Can we modernize CNNs using ViT design principles?" ConvNeXt is the answer. It systematically re-evaluated design choices in ResNets, incorporating elements like larger kernel sizes, inverted bottleneck structures, and activation functions inspired by Transformers. The result is a CNN architecture that is competitive with, and sometimes even surpasses, ViTs, demonstrating that CNNs are far from obsolete and can benefit from architectural insights gained from Transformers. This highlights a convergence of ideas rather than a complete replacement.

- Efficient Implementations: The computational demands of Transformers are significant. Researchers are actively working on:

- Quantization: Reducing the precision of model weights (e.g., from 32-bit to 8-bit integers) to save memory and speed up inference.

- Pruning: Removing redundant connections or neurons from the network without significant performance loss.

- Knowledge Distillation: Training smaller, more efficient "student" models to mimic larger "teacher" models.

- Specialized Hardware: Development of AI accelerators (TPUs, NPUs) optimized for Transformer operations.

Challenges and Future Directions

Despite their immense potential, Foundation Models in computer vision face several challenges that are actively being researched:

- Computational Cost & Energy Consumption: Training these models requires massive computational resources and energy, raising concerns about environmental impact and accessibility for smaller research groups.

- Interpretability and Explainability: Understanding why a ViT makes a particular decision can be more challenging than with CNNs, where attention maps often highlight salient regions. New methods are needed to provide transparent explanations.

- Bias and Fairness: Foundation models are trained on vast, often unfiltered, datasets from the internet. These datasets can embed societal biases (e.g., gender, race, stereotypes), leading to unfair or discriminatory outcomes when the models are deployed. Robust bias detection, mitigation, and ethical deployment strategies are critical.

- Deployment at Scale: Integrating these powerful but complex models into real-world applications requires significant engineering effort, including model compression, efficient inference, and robust MLOps practices.

- Beyond Images: The principles of Foundation Models are extending beyond static images to dynamic data like video (e.g., VideoMAE), 3D data, and even other sensory inputs like audio and sensor data. The ultimate goal is truly general-purpose AI that can perceive and reason across all modalities.

- Continual Learning: How can these massive models adapt to new data and tasks over time without catastrophic forgetting, especially without retraining from scratch?

Conclusion

The era of Foundation Models for computer vision, spearheaded by Vision Transformers and fueled by self-supervised and multimodal learning, represents a profound leap forward. We've moved from models that excel at specific tasks to models that learn general-purpose visual intelligence, capable of adapting to a vast array of downstream applications with remarkable efficiency.

From understanding the intricate details of an image to generating entirely new visual content from a text prompt, these models are reshaping our interaction with AI. While challenges related to computational cost, interpretability, and bias remain, the rapid pace of innovation suggests that the next decade will witness even more astonishing advancements. For practitioners and enthusiasts alike, understanding this paradigm shift is not just about staying current; it's about being equipped to build the future of intelligent systems. The journey has just begun, and the vision ahead is clearer and more expansive than ever before.