Vision Transformers: Ushering in a New Era of Computer Vision and Foundation Models

Explore the revolutionary shift in computer vision from traditional CNNs to Vision Transformers (ViT) and their multimodal successors. Discover how these foundation models are redefining AI capabilities and democratizing advanced visual intelligence.

The world of artificial intelligence is in a constant state of flux, but every so often, a paradigm shift occurs that fundamentally redefines how we approach problems. For the past decade, Convolutional Neural Networks (CNNs) reigned supreme in computer vision, proving their mettle across countless tasks from image classification to object detection. However, a new contender has emerged, one that has not only challenged but, in many ways, surpassed the capabilities of traditional CNNs: the Vision Transformer (ViT) and its multimodal successors. This shift isn't merely an incremental improvement; it's a foundational transformation, ushering in an era of "foundation models" that promise to democratize advanced computer vision and unlock unprecedented capabilities, especially when combined with other modalities like language.

The Seismic Shift: From CNNs to Transformers in Vision

For years, the architecture of choice for computer vision tasks was the Convolutional Neural Network. CNNs excelled due to their inherent properties: local receptive fields, which allowed them to efficiently capture local patterns like edges and textures, and translation equivariance, meaning they could detect a feature regardless of its position in an image. Layers of convolutions, pooling, and non-linearities built up hierarchical representations, making them incredibly effective.

However, CNNs had limitations. Their local nature meant they often struggled to capture long-range dependencies or global context without very deep architectures or specialized modules. Enter the Transformer.

Originally conceived for Natural Language Processing (NLP) by Google Brain in their seminal 2017 paper "Attention Is All You Need," Transformers revolutionized sequence modeling. Their core innovation was the self-attention mechanism, which allows every element in an input sequence to weigh the importance of every other element, effectively capturing global dependencies regardless of their distance. This was a stark contrast to recurrent neural networks (RNNs) that processed information sequentially, often losing context over long sequences.

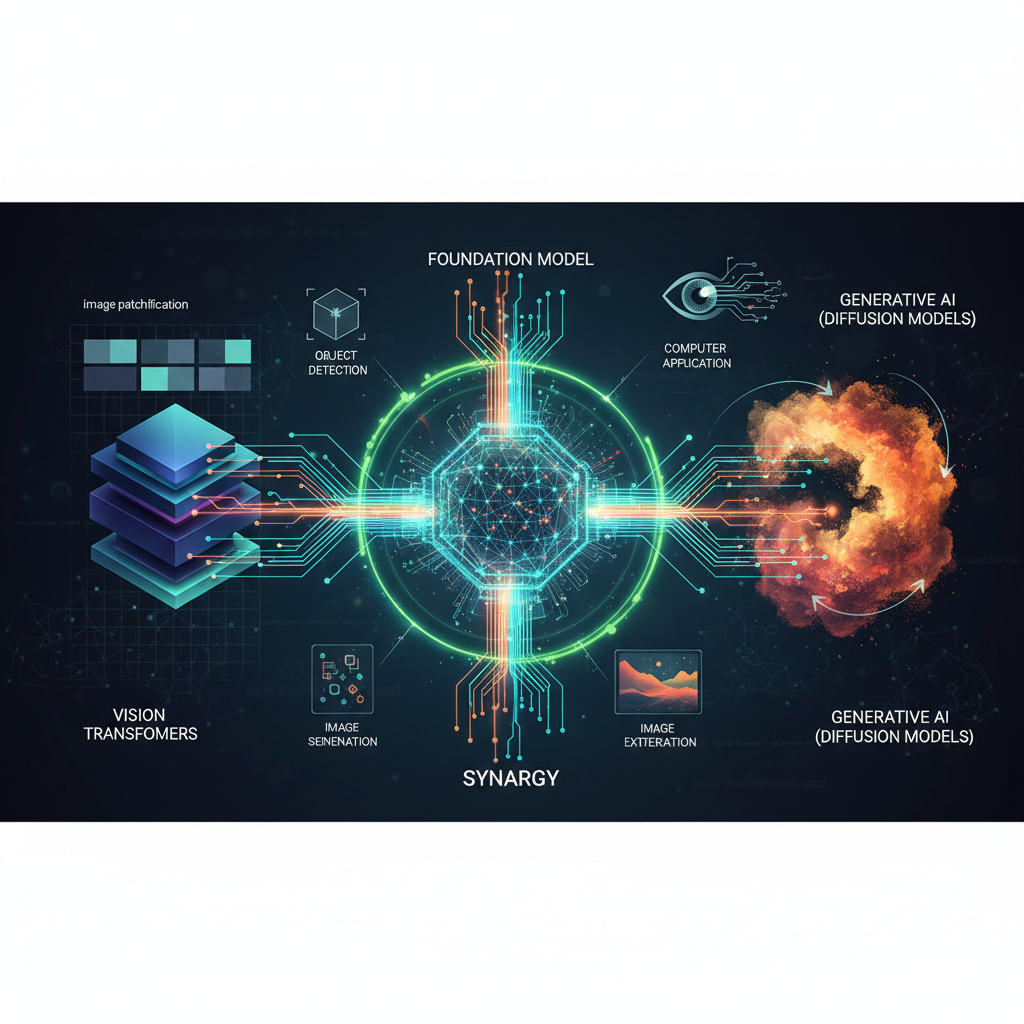

The question then became: could this powerful architecture be adapted for images? Images are not sequences of words; they are grids of pixels. The breakthrough came with the Vision Transformer (ViT) in 2020. The key insight was surprisingly simple yet profoundly impactful: treat an image as a sequence of patches.

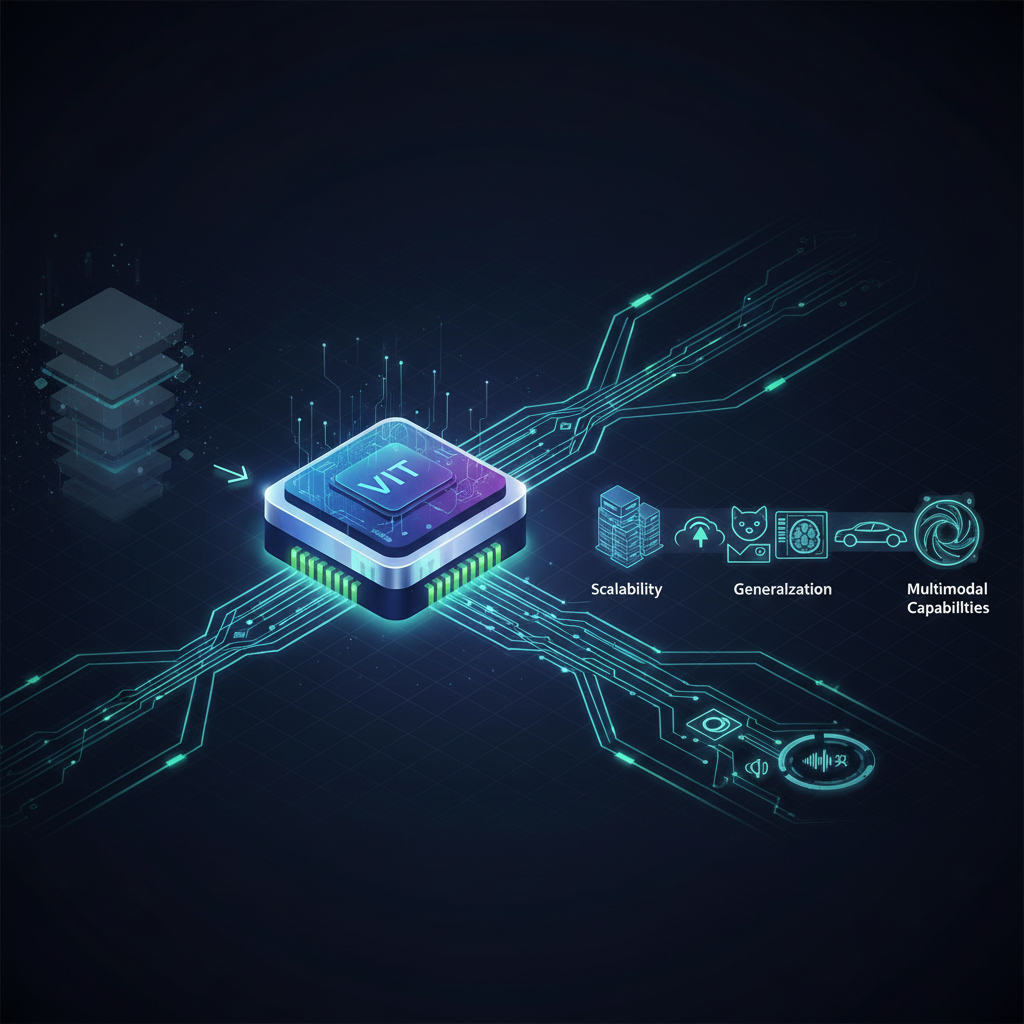

Vision Transformers (ViT): Patching Up the Visual World

The original ViT paper, "An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale," demonstrated that if you divide an image into fixed-size, non-overlapping patches (e.g., 16x16 pixels), flatten each patch into a vector, and then linearly embed these vectors, you effectively create a sequence of "visual words." Positional encodings are added to these patch embeddings to retain spatial information, as Transformers are inherently permutation-invariant. This sequence is then fed into a standard Transformer encoder, identical to those used in NLP.

The power of ViT lies in its ability to model global relationships. Unlike CNNs that build up global understanding through many layers of local operations, ViTs can directly attend to features across the entire image from the very first layer. This global perspective proved incredibly effective, especially when trained on massive datasets. While initial ViTs required vast amounts of data to outperform CNNs (due to the lack of inherent inductive biases like translation equivariance), their performance scaled dramatically with dataset size.

Key Variants and Improvements:

- Swin Transformers: While ViTs were powerful, their global self-attention mechanism scaled quadratically with the number of patches, making them computationally expensive for high-resolution images. Swin Transformers introduced a hierarchical architecture and "shifted window" attention. Instead of attending globally, patches attend only within local windows, and these windows are shifted between layers, allowing for cross-window connections and hierarchical feature learning, much like CNNs. This made Swin Transformers more efficient and performant across a wider range of vision tasks.

- Masked Autoencoders (MAE): Inspired by BERT's masked language modeling, MAE applies self-supervised learning to ViTs. It masks out a large portion of image patches (e.g., 75%) and trains the Transformer to reconstruct the missing pixels. This forces the model to learn rich, semantic representations of the image content, making it an excellent pre-training strategy.

- DeiT (Data-efficient Image Transformers): Addressed the data hunger of early ViTs by introducing a "distillation token." This token interacts with the Transformer layers and is trained to mimic the output of a pre-trained CNN teacher model, allowing ViTs to achieve competitive performance with less data.

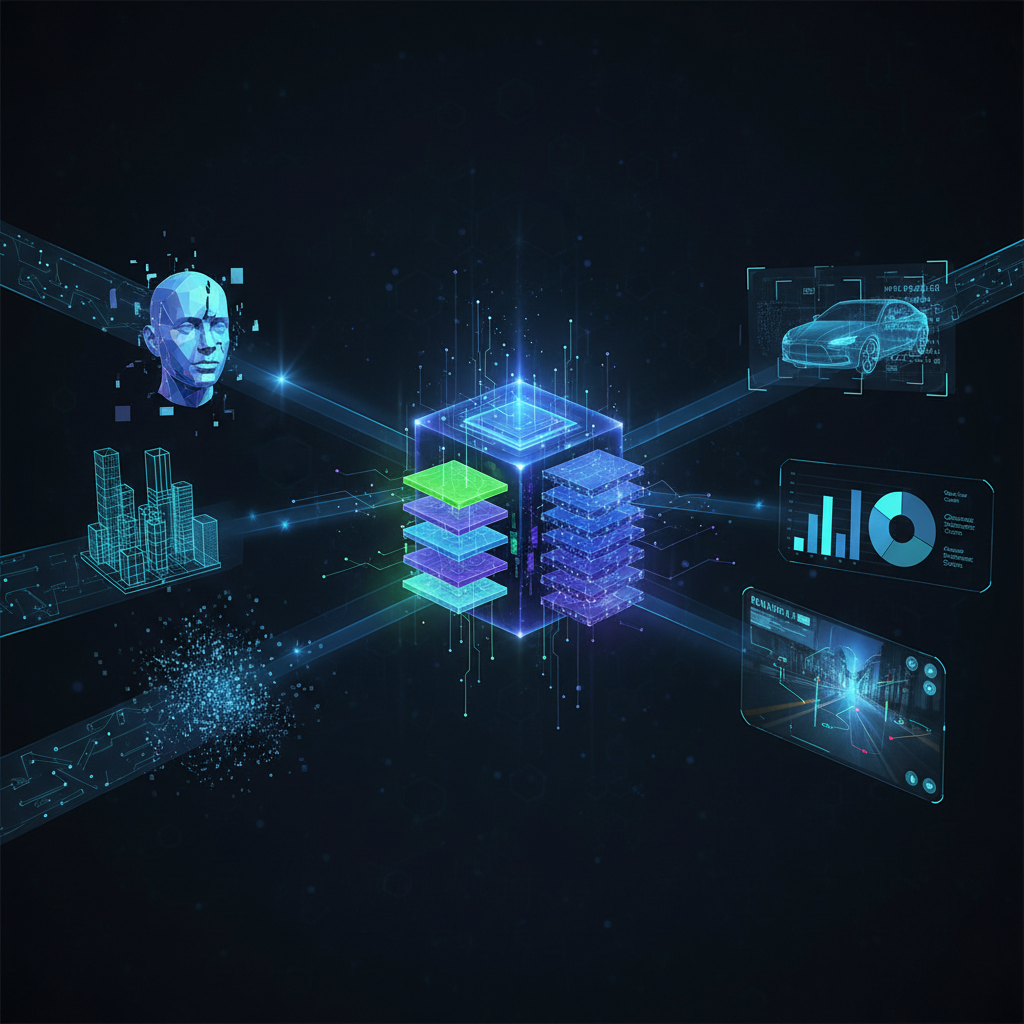

Foundation Models for Vision: The New Paradigm

The success of ViTs, especially when pre-trained on massive, diverse datasets, paved the way for the concept of "foundation models" in computer vision. Just like large language models (LLMs) such as GPT-3/4 are pre-trained on vast text corpora and then fine-tuned for various downstream NLP tasks, vision foundation models are large, general-purpose models pre-trained on enormous image datasets. They learn a broad, transferable understanding of visual concepts, making them highly adaptable to a wide array of specific tasks with minimal fine-tuning.

Pre-training Strategies for Vision Foundation Models:

-

Supervised Pre-training: The most straightforward approach involves training on massive, human-labeled datasets. Datasets like JFT-300M (300 million images with labels) have been instrumental in training early ViTs to achieve state-of-the-art performance. While effective, this approach is limited by the availability and cost of human annotations.

-

Self-Supervised Learning (SSL): This is where much of the innovation lies. SSL methods learn powerful representations from unlabeled data by creating "pretext tasks" where the model generates its own labels.

- Masked Autoencoders (MAE): As mentioned, MAE learns by reconstructing masked image patches, forcing it to understand the underlying structure and semantics of images.

- DINO (Self-supervised Vision Transformers): DINO uses knowledge distillation with no labels. It trains a "student" ViT to match the output of an exponentially moving average "teacher" ViT, encouraging the student to learn strong, distinct features without explicit supervision.

- MoCo v3 (Momentum Contrast v3): A contrastive learning approach that learns representations by contrasting positive pairs (different augmentations of the same image) with negative pairs (different images), pulling positive pairs closer in the embedding space while pushing negative pairs apart.

-

Contrastive Learning (Vision-Language Alignment): This strategy bridges the gap between vision and language, a crucial step towards multimodal AI.

- CLIP (Contrastive Language-Image Pre-training): Perhaps the most influential example. CLIP trains a vision encoder (a ViT) and a text encoder (a Transformer) jointly. It learns to associate images with their corresponding text captions by maximizing the similarity of correct image-text pairs and minimizing the similarity of incorrect pairs within a batch. This is done on a massive dataset of image-text pairs scraped from the internet (e.g., 400 million pairs for CLIP).

Emergent Capabilities:

These large-scale pre-training strategies enable foundation models to learn incredibly rich and transferable representations. They develop a nuanced understanding of objects, scenes, attributes, and even abstract concepts, without being explicitly programmed for them. This allows for:

- Strong Generalization: Performance on unseen data and out-of-distribution examples is significantly improved.

- Zero-shot and Few-shot Learning: The ability to perform tasks for which they haven't been explicitly trained, or with very few examples, by leveraging their broad understanding.

- Semantic Understanding: The learned embeddings often capture deep semantic relationships, going beyond superficial visual features.

Multimodal Vision-Language Models: The Future is Interconnected

The true power of foundation models blossoms when different modalities are brought together. The world isn't just images or text; it's a rich tapestry of sensory information. Multimodal models, particularly those integrating vision and language, are pushing AI closer to human-like understanding and interaction.

CLIP: The Zero-Shot Vision Powerhouse

CLIP's pre-training objective of aligning image and text embeddings has profound implications. It can perform zero-shot classification: given an image, you can query it with arbitrary text descriptions (e.g., "a photo of a cat," "a picture of a dog," "an image of a bicycle"). CLIP will then calculate the similarity between the image embedding and each text embedding and predict the class corresponding to the highest similarity. This means you don't need to retrain the model for new classes; you just provide new text descriptions.

Beyond classification, CLIP excels at:

- Image Retrieval: Finding images matching a text query or finding text matching an image.

- Object Detection (Zero-shot): When combined with region proposals, CLIP can identify objects described by text without ever seeing examples of those objects during training.

Diffusion Models: Generating the Unseen

While CLIP understands the relationship between text and images, Diffusion Models are masters of creation. These generative models have taken the world by storm with their ability to produce incredibly realistic and diverse images from text prompts.

- Introduction to Diffusion: Diffusion models work by gradually adding Gaussian noise to an image until it becomes pure noise. The training process then involves learning to reverse this process: predicting and removing the noise at each step to reconstruct the original image. This "denoising" process is typically performed by a U-Net architecture.

- Latent Diffusion Models (LDMs): Models like Stable Diffusion and DALL-E 2 are Latent Diffusion Models. Instead of operating directly on high-resolution pixel space, they perform the diffusion process in a lower-dimensional "latent space." This significantly reduces computational cost and memory requirements. The magic for text-to-image generation happens when a text encoder (often a frozen CLIP text encoder) is used to condition the denoising process. The text prompt guides the model on what to generate.

- ControlNet, LoRA: The ecosystem around diffusion models is rapidly evolving.

- ControlNet allows for fine-grained control over image generation by incorporating additional input conditions like edge maps, depth maps, or human poses. This enables users to guide the generated image's structure and composition.

- LoRA (Low-Rank Adaptation) is an efficient fine-tuning technique that allows users to adapt large pre-trained models to specific styles, objects, or concepts with minimal computational overhead and storage. It injects small, trainable matrices into the Transformer layers, significantly reducing the number of parameters that need to be updated during fine-tuning.

Visual Question Answering (VQA) & Image Captioning:

Multimodal foundation models are rapidly advancing these tasks. VQA models take an image and a natural language question about it (e.g., "What is the person in the red shirt doing?") and generate a natural language answer. Image captioning models generate descriptive text for an input image. By leveraging the aligned representations learned by models like CLIP and the generative capabilities of Transformers, these tasks are achieving unprecedented levels of coherence and accuracy.

GPT-4V (Vision): The Ultimate Integration

The release of GPT-4V, which integrates vision directly into the large language model, represents a significant leap. Instead of separate vision and language models, GPT-4V can process both image and text inputs, allowing for complex reasoning that spans modalities. You can show it an image and ask intricate questions about its content, context, and implications, and it can generate coherent, multi-turn conversational responses. This moves beyond simple captioning or VQA to truly multimodal understanding and reasoning.

Practical Implications and Applications

The rise of vision Transformers and multimodal foundation models has profound practical implications across industries:

-

Democratizing Advanced CV:

- Transfer Learning & Fine-tuning: Practitioners can now leverage massive pre-trained models and fine-tune them on relatively small, task-specific datasets. This drastically reduces the data and computational resources required to achieve state-of-the-art performance, making advanced computer vision accessible to more researchers and businesses.

- Zero-shot and Few-shot Learning: For niche applications with very limited data, zero-shot capabilities (e.g., with CLIP) allow for immediate deployment without any training, while few-shot learning enables rapid adaptation with just a handful of examples.

-

Generative AI Revolution:

- Content Creation: Artists, designers, marketers, and game developers can generate high-quality images, illustrations, and textures from simple text prompts, accelerating creative workflows and enabling new forms of digital art.

- Virtual Environments: Generating realistic and diverse assets for virtual reality, augmented reality, and metaverse applications.

- Personalization: Creating personalized content and experiences based on user preferences.

-

Enhanced Robustness and Generalization:

- Foundation models often exhibit better generalization to out-of-distribution data and improved robustness to noise and adversarial attacks compared to traditional CNNs, making them more reliable in real-world scenarios.

-

New Research Avenues:

- The field is buzzing with research on efficient fine-tuning methods (like LoRA, adapters), prompt engineering for vision models, understanding emergent properties, and exploring the scaling laws of these models.

Challenges:

Despite their immense potential, these models come with significant challenges:

- Computational Cost: Training and even inferencing with these massive models require substantial computational resources (GPUs, TPUs), making them expensive to develop and deploy.

- Data Requirements: While fine-tuning can be data-efficient, the initial pre-training requires truly gargantuan datasets, often curated from the internet, raising questions about data quality and bias.

- Ethical Concerns:

- Bias: Models trained on internet-scale data inevitably inherit biases present in that data, leading to unfair or discriminatory outputs (e.g., generating images that reinforce stereotypes).

- Misinformation and Misuse: The ability to generate highly realistic synthetic media raises concerns about deepfakes, propaganda, and the blurring of reality.

- Copyright and Attribution: The use of vast amounts of existing art and images for training raises complex legal and ethical questions regarding intellectual property rights.

- Interpretability: Understanding why these complex models make certain decisions or generate specific outputs remains a significant challenge, hindering debugging and trust.

Future Directions

The journey of foundation models in computer vision is just beginning. Several exciting avenues are being explored:

- Efficiency: Developing smaller, more efficient ViTs and multimodal models that can run on edge devices or with less computational power, making them more accessible and sustainable.

- New Architectures: Exploring hybrid approaches that combine the strengths of CNNs and Transformers, or entirely new architectures like State-Space Models (SSMs) such as Mamba, which offer linear scaling with sequence length.

- Embodied AI: Integrating vision with robotics and physical interaction, allowing AI agents to perceive, understand, and act in the real world.

- Responsible AI: A critical focus on addressing biases, developing robust evaluation metrics, ensuring transparency, and establishing ethical guidelines for the development and deployment of these powerful technologies.

- Beyond Vision-Language: Expanding to other modalities like audio, haptics, and sensor data to build truly comprehensive models of the world.

Conclusion

The landscape of computer vision has been irrevocably transformed by the advent of Vision Transformers and multimodal architectures. We've moved from a world dominated by local feature extractors to one where global context, cross-modal understanding, and emergent capabilities are paramount. Foundation models, pre-trained on vast datasets, are not just performing tasks; they are learning a generalized understanding of the visual world, much like large language models comprehend language. This paradigm shift empowers practitioners with unprecedented tools for transfer learning, zero-shot capabilities, and generative AI, while simultaneously opening up new frontiers for research into truly intelligent, multimodal AI systems. As we navigate this exciting new era, addressing the ethical and computational challenges will be as crucial as pushing the boundaries of technological innovation. The future of computer vision is bright, interconnected, and profoundly transformative.