Adaptive Agentic SDLC: The Future of AI-Driven Software Development

Explore the next frontier of AI-driven development with Adaptive Agentic SDLC. Discover how intelligent, autonomous systems can proactively adapt to dynamic requirements and evolving environments, minimizing rework and technical debt.

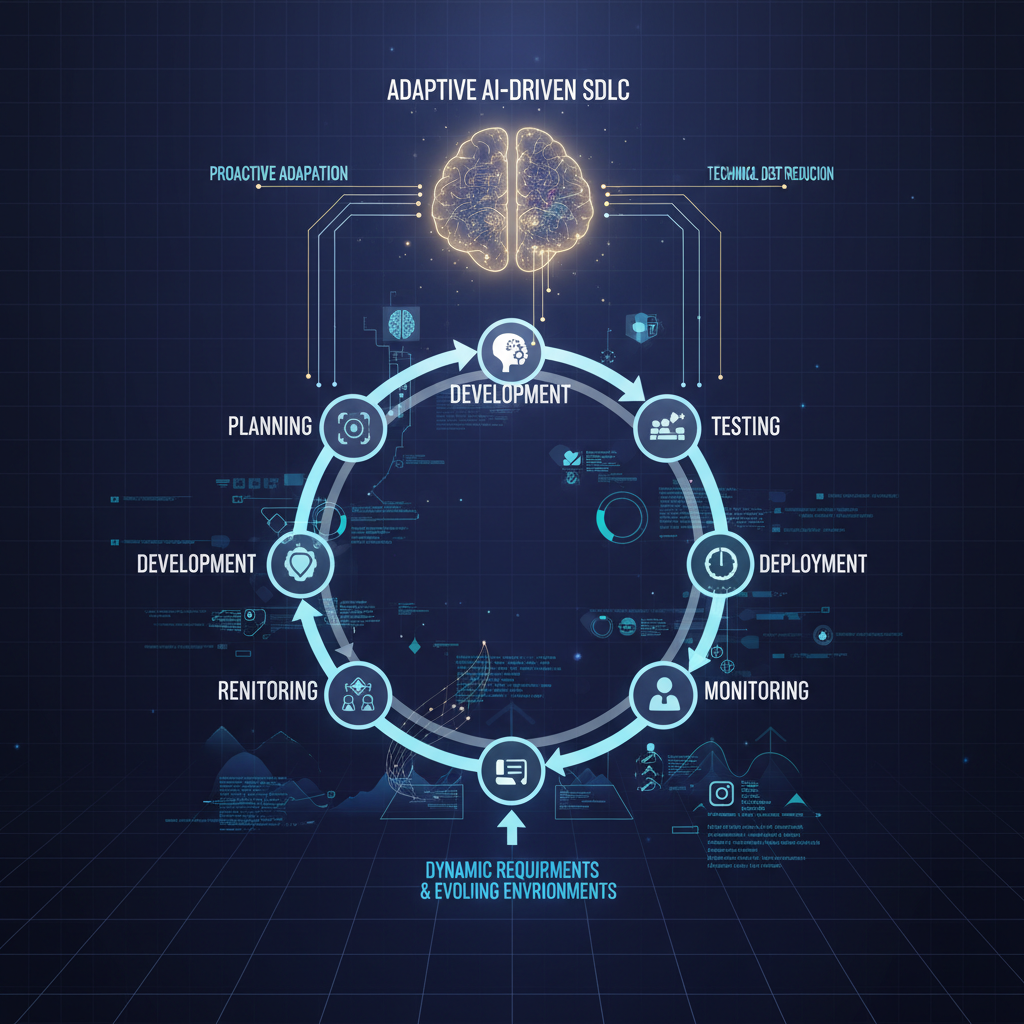

The landscape of software development is in a perpetual state of flux. Requirements shift, user feedback introduces new directions, and the underlying technological environment evolves at a dizzying pace. Traditional Software Development Life Cycles (SDLCs), even agile ones, often struggle to keep pace with this inherent dynamism, leading to rework, delays, and an accumulating pile of technical debt. Enter the next frontier of AI-driven development: Adaptive Agentic SDLC for Dynamic Requirements and Evolving Environments.

This isn't just about agents writing code from a static prompt; it's about intelligent, autonomous systems that can understand, interpret, and even negotiate changes, then proactively refactor, re-plan, and re-implement solutions. It's a vision where software systems evolve organically, driven by continuous feedback and intelligent adaptation, mirroring the very environments they operate within.

Why Adaptive Agentic SDLC is the Next Big Leap

The concept of agents in software development has rapidly matured beyond simple code generation. We're now witnessing the emergence of multi-agent systems capable of orchestrating complex workflows. This evolution is particularly timely and impactful for several reasons:

- Real-World Agility: Software rarely adheres to a static blueprint. User needs change, market demands pivot, and external APIs are updated. Adaptive Agentic SDLC directly addresses this core challenge, enabling systems to respond with unprecedented agility, minimizing the friction associated with change.

- Beyond Static Generation: The true power lies in agents that don't just execute instructions but reason about them. They can identify implications of a change, propose solutions, and autonomously manage the development lifecycle from ideation to deployment, making intelligent decisions along the way.

- Multi-Agent Orchestration: Tackling dynamic requirements often necessitates a collaborative approach. Imagine a specialized "Requirement Analyst Agent" working with an "Architect Agent," a "Developer Agent," and a "Tester Agent." This multi-agent paradigm, where specialized agents communicate and coordinate, is a cornerstone of adaptive systems.

- Feedback-Driven Evolution: For agents to adapt effectively, they need continuous, real-time feedback. This involves deep integration with observability tools, user behavior analytics, and automated testing frameworks, creating a closed-loop system for continuous improvement.

- Ethical and Control Considerations: As agents gain more autonomy, the discussion around human oversight, control, and alignment with high-level objectives becomes paramount. This field is actively exploring how to build robust human-in-the-loop mechanisms and ensure responsible AI development.

Key Concepts Driving Adaptive Agentic SDLC

The foundation of adaptive agentic SDLC rests on several interconnected concepts, each powered by advancements in AI, particularly Large Language Models (LLMs) and sophisticated planning algorithms.

1. Continuous Requirement Elicitation & Refinement Agents

Concept: These agents are the ears and eyes of the development process, constantly monitoring, analyzing, and interpreting signals from the environment to understand evolving requirements. They don't just take a prompt; they actively seek out and refine needs.

Technical Details & Developments: Imagine an agent continuously scanning various data sources:

- User Feedback Platforms: Analyzing sentiment from support tickets (e.g., Zendesk, Intercom), app store reviews, and social media mentions.

- Usage Analytics: Monitoring user interaction patterns within the application (e.g., Google Analytics, Mixpanel, custom telemetry) to identify pain points, underutilized features, or new usage paradigms.

- Product Management Tools: Integrating with Jira, GitHub Issues, or Trello to track feature requests and bug reports.

- Market Intelligence: Even scanning industry news, competitor updates, and regulatory changes.

Using advanced LLMs, these agents can:

- Summarize & Categorize: Condense vast amounts of unstructured text feedback into actionable insights. For example, grouping multiple user complaints about "slow loading times" into a single performance optimization requirement.

- Identify Themes & Gaps: Pinpoint recurring themes or areas where the current system falls short of user expectations.

- Draft User Stories & Acceptance Criteria: Based on identified needs, an agent could draft preliminary user stories complete with acceptance criteria, ready for human product owner review. This might involve querying a knowledge base for existing patterns or suggesting new ones.

- Active Learning & Clarification: A more advanced agent could engage in "active learning," where it identifies ambiguities in requirements and proactively asks clarifying questions to users or product owners through a chat interface or automated survey.

Example: A "Feedback Analyst Agent" observes a surge in support tickets related to "difficulty exporting data to CSV." It analyzes the tickets, identifies common frustrations (e.g., "missing columns," "incorrect formatting"), and cross-references them with existing feature requests. It then drafts a user story: "As a data analyst, I want to export report data to CSV with customizable columns and correct data types, so I can easily integrate it with my spreadsheets." It might also suggest specific acceptance criteria like "The export should include all selected columns," "Date fields should be formatted as YYYY-MM-DD," and "Large exports should not time out." This draft is then presented to a human product owner for approval.

2. Dynamic Planning and Re-Planning Agents

Concept: Once new or changed requirements are identified, these agents take charge of adapting the project plan, architectural design, and task breakdown. They are the strategic thinkers of the adaptive SDLC.

Technical Details & Developments: This is where sophisticated LLM planning algorithms come into play:

- Tree of Thought (ToT) / Graph of Thought (GoT): Instead of a linear thought process, these agents explore multiple reasoning paths, evaluating potential solutions and their consequences. For a requirement change, they can explore different architectural modifications, refactoring strategies, or task dependencies.

- Self-Correction Loops: Agents can identify potential flaws in their own plans, simulate outcomes, and iteratively refine their approach. For instance, if a proposed change introduces a conflict with an existing module, the agent can detect this and adjust its plan.

- Impact Analysis: Leveraging knowledge graphs of the existing codebase and architecture, agents can identify all components potentially affected by a requirement change. This includes not just direct code changes but also dependencies, integrations, and potential performance implications.

- Resource Estimation & Scheduling: Based on the identified tasks and their complexity, agents can estimate effort, identify required resources (e.g., specific developer skills), and suggest revised timelines or reallocate tasks among developer agents.

Example: Following the "customizable CSV export" requirement, a "Planning Agent" receives the approved user story. It queries the codebase's architecture graph, identifying the existing data export module, database schema, and UI components. It determines that adding customizable columns requires:

- Modifying the UI to allow column selection.

- Updating the backend API to accept column parameters.

- Adjusting the data serialization logic.

- Potentially optimizing database queries for specific column selections.

The agent then generates a detailed plan, breaking these down into sub-tasks, estimating their complexity, and identifying potential conflicts or dependencies. It might suggest refactoring the existing export service to be more modular, anticipating future export format changes. This plan, along with an updated timeline, is then presented for human review.

3. Self-Healing and Self-Optimizing Agents (Runtime Adaptation)

Concept: These agents extend adaptation beyond development into the operational phase, monitoring deployed applications for performance, stability, and security, and autonomously proposing or even applying fixes and optimizations.

Technical Details & Developments: This area heavily integrates with AIOps (Artificial Intelligence for IT Operations) principles:

- Continuous Monitoring: Agents connect to monitoring tools (e.g., Prometheus, Grafana, Datadog) to collect real-time metrics on CPU usage, memory, latency, error rates, and security vulnerabilities.

- Anomaly Detection: Using machine learning, they can detect deviations from normal behavior, indicating potential issues before they escalate.

- Root Cause Analysis: When an anomaly is detected, agents can leverage RAG (Retrieval Augmented Generation) to access internal documentation, past incident reports, knowledge bases, and best practices to diagnose the root cause.

- Automated Remediation: For well-understood issues, agents can propose and even automatically apply fixes. This could be a code patch, a configuration change (e.g., scaling up resources, adjusting a database parameter), or restarting a service.

- Proactive Optimization: Based on usage patterns and performance metrics, agents can suggest or implement optimizations, such as caching strategies, database index improvements, or code refactoring for hot paths.

Example: A "DevOps Agent" monitors a production e-commerce application. It detects a sudden spike in latency for the product search API, coinciding with increased traffic.

- Anomaly Detection: It flags the latency increase.

- Diagnosis: It cross-references the latency with database query logs and finds a particular complex join query that's slowing down. It also checks recent code deployments and finds a new feature that might have introduced this query.

- RAG for Solutions: It queries its knowledge base for "slow database queries" and "indexing strategies." It identifies that a new index on a specific

product_tagscolumn could significantly speed up the query. - Proposed Fix: The agent generates a SQL script to add the new index and a small code patch to ensure the application utilizes it.

- Deployment (Controlled): It proposes this change to a human operator, explaining the problem, the proposed solution, and the expected impact. In a more autonomous setup, it might deploy this to a canary environment first, monitor its effect, and then roll it out more broadly if successful.

4. Adaptive Testing and Validation Agents

Concept: As requirements and code evolve, the testing strategy must also adapt. These agents ensure that the system remains robust and correct by dynamically generating, modifying, and executing test cases.

Technical Details & Developments:

- LLM-driven Test Case Generation: When a requirement changes or new code is introduced, agents can use LLMs to generate new unit, integration, or end-to-end test cases based on the updated specifications and code changes. They can infer edge cases, error conditions, and success paths.

- Test Suite Refinement: Agents can analyze existing test suites, identify redundant tests, or suggest modifications to existing tests to cover new functionality or changed behavior.

- Exploratory Testing: More advanced agents can simulate realistic user behavior, navigating the application, inputting data, and reporting anomalies or unexpected behavior, mimicking human exploratory testers.

- Regression Impact Analysis: When code changes, agents can identify which existing tests are most likely to be affected and prioritize their execution, ensuring that new changes don't break existing functionality.

Example: When the "customizable CSV export" feature is developed, an "Adaptive Testing Agent" comes into play:

- New Test Generation: Based on the new user story and acceptance criteria, it generates new unit tests for the updated data serialization logic, integration tests for the API endpoint, and end-to-end tests that simulate a user selecting various columns and verifying the exported CSV content.

- Existing Test Modification: It identifies existing tests related to data export and modifies them to account for the new customizable options, ensuring backward compatibility or graceful degradation.

- Regression Prioritization: It runs a full regression suite, prioritizing tests related to data integrity, performance, and security, given the sensitive nature of data export. It might even generate negative test cases, such as attempting to export with invalid column names, to ensure robust error handling.

5. Human-in-the-Loop (HITL) and Explainability for Adaptive Agents

Concept: While autonomy is a goal, human oversight and intervention are critical, especially for complex or critical systems. This involves mechanisms for agents to explain their decisions, seek approval, and allow humans to guide or override their actions.

Technical Details & Developments:

- Explanation Engines: Agents are being developed with the ability to articulate their reasoning. For instance, an agent proposing a code change wouldn't just present the code but also explain why it made that change, what problem it's solving, and what impact it expects.

- Interactive Dashboards: User interfaces are designed to visualize agent plans, proposed code modifications (e.g., diffs with explanations), test results, and impact analyses. This allows human operators to quickly grasp the agent's intent and potential consequences.

- Approval Workflows: Critical decisions (e.g., deploying to production, making significant architectural changes) are routed through explicit human approval steps.

- Safe Exploration: Agents might first test proposed changes in isolated, sandboxed environments or use A/B testing in production with a small user segment before full rollout, providing a safety net for adaptive actions.

- Policy-Based Control: Humans can define high-level policies or constraints that agents must adhere to, such as "never deploy a change that increases latency by more than 5%" or "always seek approval for database schema modifications."

Example: When the "DevOps Agent" proposes the database index and code patch, it doesn't just apply it. It generates a detailed report:

- Problem: "High latency on product search API due to inefficient query on

product_tags." - Proposed Solution: "Add B-tree index on

product_tagscolumn and update query to utilize it." - Reasoning: "Analysis of query plans shows current full table scan. Index will reduce query time from 500ms to ~50ms based on test environment simulations."

- Impact: "Expected 90% reduction in search API latency. Minimal downtime for index creation (non-blocking). Code change is localized to search service."

- Code Diff: Presents the SQL and code changes with inline comments explaining each modification.

This report is sent to a human SRE or developer for review and approval before any changes are applied to production.

Practical Applications and Value for AI Practitioners

The promise of Adaptive Agentic SDLC is transformative, offering tangible benefits across the software development lifecycle:

- Accelerated Feature Development: Imagine incorporating user feedback and rolling out new features or modifications in days, not weeks or months. Agents can drastically reduce the time-to-market for innovations.

- Reduced Technical Debt: By continuously adapting, refactoring, and optimizing, agents can proactively prevent the accumulation of technical debt, which often arises from rushed changes or evolving requirements in traditional SDLCs.

- Enhanced System Resilience: Self-healing and self-optimizing agents lead to more reliable and performant deployed systems, often requiring minimal human intervention for routine issues. This means fewer outages and happier users.

- Improved Developer Experience: Developers can offload repetitive, mundane, or adaptive coding tasks to agents, freeing them to focus on higher-level problem-solving, innovation, and complex architectural challenges.

- Proactive Problem Solving: Agents can identify potential issues, bottlenecks, or security vulnerabilities before they impact users or become critical, leading to more stable and robust applications.

- Personalized Software: The ultimate vision includes software that adapts its features, behavior, and even UI based on individual user feedback and usage patterns, creating truly personalized experiences.

Challenges and Future Directions

While the potential is immense, several significant challenges must be addressed for Adaptive Agentic SDLC to reach its full potential:

- Trust and Control: How much autonomy can we safely grant agents, especially for critical systems like financial applications or medical devices? Establishing clear boundaries, robust human-in-the-loop mechanisms, and effective override capabilities is paramount.

- Cost of Adaptation: The computational resources required for continuous re-planning, re-generating code, running extensive test suites, and monitoring systems can be substantial. Optimizing these processes for efficiency and cost-effectiveness is a key research area.

- Emergent Behavior: As multi-agent systems become more complex and interact in nuanced ways, predicting and controlling their emergent behaviors becomes increasingly challenging. Ensuring alignment with human intent and preventing unintended consequences is vital.

- Data Quality for Adaptation: Agents are only as good as the data they consume. High-quality, real-time data from user feedback, system metrics, code changes, and architectural knowledge bases is crucial for informed adaptive decisions.

- Formal Verification for Adaptive Systems: How can we formally verify the correctness, safety, and security of systems that are constantly changing and adapting? Traditional verification methods struggle with dynamic systems, necessitating new approaches.

- Standardization and Interoperability: As various agentic frameworks and tools emerge, there will be a need for standardization and interoperability protocols to enable seamless collaboration between different types of agents and systems.

Conclusion

The shift from static code generation to Adaptive Agentic SDLC for Dynamic Requirements and Evolving Environments represents a profound leap forward in software engineering. It promises to unlock truly agile and resilient software development, where systems can autonomously evolve alongside user needs and environmental changes. For AI practitioners, this area offers rich research opportunities in multi-agent orchestration, advanced planning and reasoning, human-AI collaboration, and robust, explainable AI systems. It's where the rubber meets the road for AI in real-world software engineering, moving us closer to a future where software isn't just built, but grows. The journey is complex, but the destination—software that learns, adapts, and thrives—is undeniably worth pursuing.