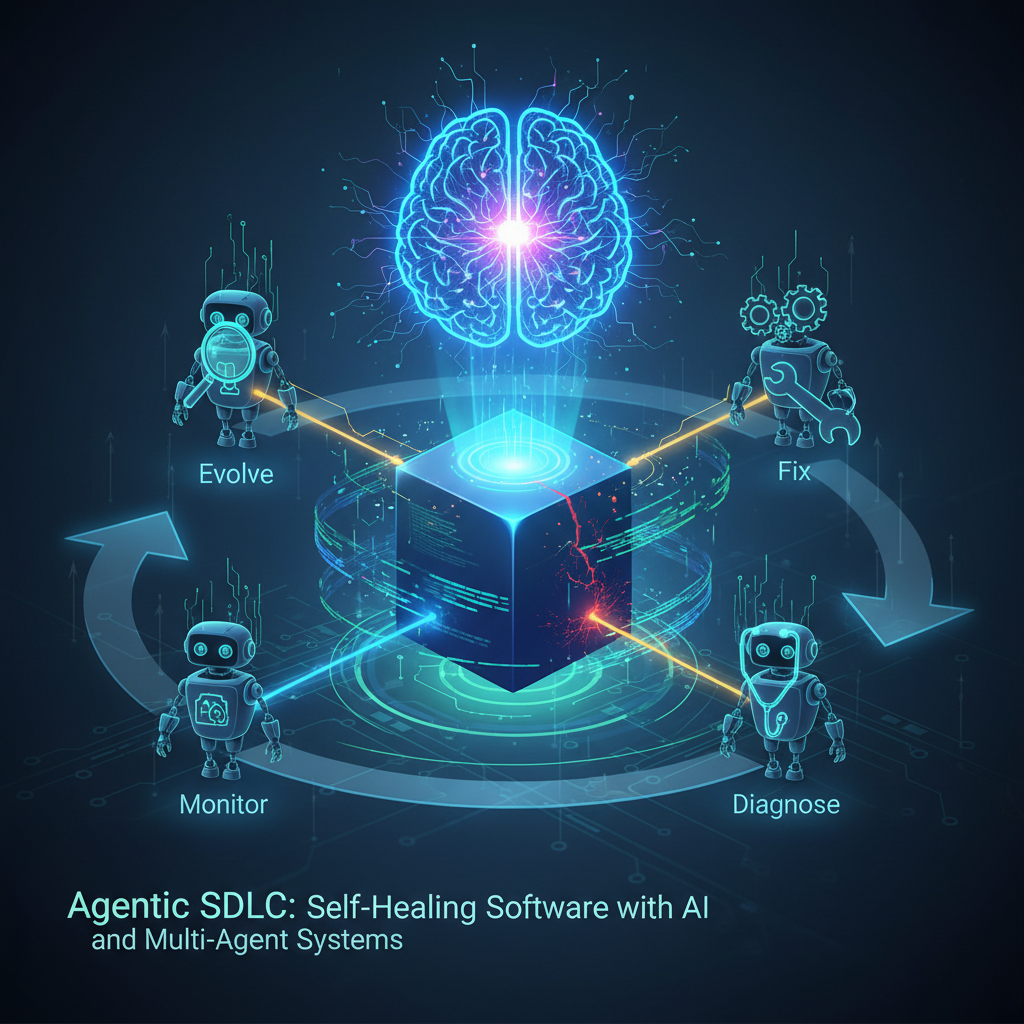

Agentic SDLC: Self-Healing Software with AI and Multi-Agent Systems

Explore the revolutionary shift towards an Agentic SDLC, where AI and multi-agent systems enable software to intelligently monitor, diagnose, and fix itself. Discover how this paradigm moves beyond mere code generation to fundamentally alter software reliability and evolution.

The software development lifecycle (SDLC) has long been a human-centric endeavor, characterized by iterative cycles of planning, coding, testing, deployment, and maintenance. While automation has steadily permeated various stages, the core intellectual tasks of problem-solving, debugging, and adaptation have largely remained the domain of human engineers. However, with the rapid advancements in artificial intelligence, particularly large language models (LLMs) and multi-agent systems, we are on the cusp of a revolutionary shift: the emergence of a self-healing and adaptive Agentic SDLC. This paradigm envisions software systems that don't just get built by AI, but also intelligently monitor, diagnose, and even fix themselves, fundamentally altering how we approach software reliability and evolution.

The Evolution Beyond Code Generation

The initial wave of AI in software development focused heavily on code generation. Tools leveraging LLMs demonstrated impressive capabilities in writing functions, generating boilerplate, and even translating between programming languages. While undeniably valuable, this only addressed a fraction of the SDLC. The real challenge, and often the most resource-intensive part, lies in ensuring that generated code is robust, maintainable, and resilient in dynamic production environments. This is where the concept of "self-healing" comes into play, pushing the boundaries beyond mere creation to encompass operational integrity and continuous adaptation.

Imagine an SDLC where the system itself can detect a performance degradation, pinpoint the root cause, propose a code fix or configuration change, validate it through automated testing, and even deploy it – all with minimal human intervention. This isn't science fiction; it's the trajectory of the Agentic SDLC, driven by the collaborative power of specialized AI agents.

The Multi-Agent Architecture for Autonomous SDLC

The cornerstone of a self-healing and adaptive SDLC is a sophisticated multi-agent system. Instead of a single monolithic AI attempting to solve all problems, this approach leverages a collective of specialized agents, each designed with specific expertise and responsibilities, mirroring a human development team. These agents communicate, collaborate, and orchestrate their actions to achieve complex goals, such as detecting an anomaly, diagnosing its cause, and implementing a remediation.

Let's break down the typical roles and interactions within such a system:

1. Advanced Observability & Monitoring Integration

At the foundation of any self-healing system is the ability to perceive its environment. This is handled by Observability Agents.

- Role: Continuously monitor the health and performance of software systems in real-time. They are the "eyes and ears" of the autonomous SDLC.

- Technical Details: These agents integrate deeply with existing observability platforms like Prometheus, Grafana, Datadog, Splunk, or OpenTelemetry. They ingest vast streams of data: logs, metrics (CPU usage, memory, latency, error rates), traces (request paths across services), and events.

- Functionality: Beyond simple data ingestion, Observability Agents are trained to interpret this data. They use advanced pattern recognition, anomaly detection algorithms (e.g., statistical process control, machine learning models like Isolation Forests or autoencoders), and causal inference techniques to identify deviations from normal behavior. They can correlate events across different microservices or infrastructure components to identify potential issues that might otherwise be missed.

- Example: An Observability Agent detects a sudden spike in 5xx errors from a specific API endpoint, coupled with an increase in database connection timeouts. It flags this as a critical incident and routes it to the next stage.

2. Specialized Remediation Agents: The Autonomous Debugging Team

Once an issue is detected, a specialized team of agents swings into action, mimicking the process a human developer would follow to debug and fix a problem.

-

Diagnosis Agent:

- Role: Receives anomaly alerts from Observability Agents and is responsible for pinpointing the root cause.

- Technical Details: This agent queries various knowledge bases, including the project's codebase, documentation, commit history, past incident reports, and even external forums like Stack Overflow. It uses LLMs to analyze logs and error messages, generate hypotheses about potential causes (e.g., "database connection pool exhaustion," "malformed API request," "logic error in

UserService.java"), and prioritize them based on probability and impact. It might interact with the Observability Agent to request more specific metrics or logs. - Example: Given the 5xx error and database timeouts, the Diagnosis Agent analyzes recent code changes, checks database connection pool metrics, and identifies a recent deployment that introduced a new, inefficient database query.

-

Planning Agent:

- Role: Based on the diagnosis, this agent formulates a remediation strategy.

- Technical Details: It considers various options: a code fix, a configuration change (e.g., increasing connection pool size), a rollback to a previous version, or even scaling up resources. It assesses the potential impact and risks of each strategy, potentially consulting with the Dependency Agent (described below) to understand downstream effects. For critical issues, it might prioritize immediate mitigation (e.g., rollback) over a full fix.

- Example: The Planning Agent proposes two options: 1) Rollback the recent deployment, or 2) Generate a code fix for the inefficient query and increase the database connection pool size temporarily.

-

Code Generation/Modification Agent:

- Role: If a code fix is required, this agent generates or modifies the necessary code.

- Technical Details: Leveraging advanced LLMs, this agent takes the diagnosis and planning instructions to generate precise code snippets. It can fix bugs, refactor inefficient code, or even add new error handling logic. It operates within the context of the existing codebase, understanding its structure, coding conventions, and architectural patterns.

- Example: The Code Generation Agent, guided by the Planning Agent, generates a more optimized SQL query and updates the relevant repository method in

UserService.java.

-

Testing Agent:

- Role: Crucially, this agent ensures the proposed fix doesn't introduce new bugs and actually resolves the original issue.

- Technical Details: It automatically generates and executes a comprehensive suite of tests: unit tests for the modified code, integration tests to ensure inter-service compatibility, and even end-to-end tests to validate the user flow. It might also replay production traffic or synthetic load tests to verify performance improvements. The Testing Agent provides feedback to the Code Generation Agent if tests fail, initiating an iterative refinement loop.

- Example: The Testing Agent generates new unit tests for the optimized query, runs existing integration tests, and performs a load test to confirm the database connection issue is resolved and performance is restored.

-

Deployment Agent:

- Role: Manages the safe and controlled rollout of the validated fix.

- Technical Details: This agent integrates with CI/CD pipelines and infrastructure-as-code (IaC) tools. It can orchestrate canary deployments, A/B testing, or phased rollouts to minimize risk. It monitors the system post-deployment, collaborating with the Observability Agent to ensure the fix is effective and no new issues arise.

- Example: The Deployment Agent initiates a canary deployment of the patched

UserServiceto 10% of the production traffic, closely monitoring metrics for any regressions before a full rollout.

3. Reinforcement Learning for Adaptation

Beyond simply fixing known issues, an adaptive SDLC learns and improves over time. This is where Strategy Agents powered by Reinforcement Learning (RL) shine.

- Role: These agents learn which remediation strategies are most effective for specific types of errors or system states.

- Technical Details: RL agents operate in an environment where their "actions" are remediation strategies (e.g., "rollback," "apply code fix," "scale up resources"), and their "rewards" are positive outcomes (e.g., "incident resolved," "MTTR reduced," "performance improved") or negative outcomes (e.g., "new bug introduced," "system crash"). Over time, they learn optimal policies for different scenarios, adapting to evolving system architectures or novel failure modes.

- Example: An RL-powered Strategy Agent observes that for transient network errors, a simple retry mechanism is often more effective and less disruptive than a full service restart. It learns to prioritize this action for similar future incidents, improving the system's responsiveness and stability.

4. Semantic Code Understanding & Graph Databases

For agents to make intelligent decisions, they need a deep understanding of the software itself, not just its runtime behavior.

- Role: Provide agents with a semantic understanding of the codebase and its architecture.

- Technical Details: This involves integrating with knowledge graphs or code-aware databases. These systems map out functions, classes, modules, their interdependencies, data flows, and architectural patterns. This allows a Dependency Agent to understand the impact of a proposed change before it's made. For instance, modifying a shared utility function could have ripple effects across numerous services.

- Example: When the Code Generation Agent proposes a fix, the Dependency Agent consults the code graph to identify all downstream services or modules that might be affected. It then instructs the Testing Agent to create additional integration tests specifically for these affected areas, ensuring comprehensive validation.

5. Human-in-the-Loop & Explainable AI (XAI) for Trust

Full autonomy, especially in critical systems, can be risky. Trust and transparency are paramount.

- Role: Ensure human oversight, provide clear explanations, and allow for intervention.

- Technical Details: Review Agents are designed to provide clear, concise, and explainable rationales for their diagnoses and proposed remediations. They can generate summaries of the problem, the proposed solution, the expected impact, and the results of automated tests. For high-risk changes or critical systems, agents can operate in "advisory mode," presenting their findings and recommendations to a human developer for final review and approval before taking autonomous action. XAI techniques are employed to make the agents' decision-making process transparent.

- Example: Before deploying a critical patch, a Review Agent presents a markdown summary to the on-call engineer, detailing: "Problem: High latency on

OrderServicedue to inefficient database query inOrderRepository.java. Diagnosis: Recent commitXYZintroduced N+1 query. Proposed Fix: Optimized query generated by Code Agent. Test Results: All unit and integration tests passed; latency reduced by 70% in staging. Human approval required for production deployment."

Practical Applications and Value for AI Practitioners

The self-healing and adaptive Agentic SDLC offers transformative value across the software development and operations landscape:

-

Automated Bug Fixing in Production (Reduced MTTR):

- Value: Dramatically reduces the Mean Time To Recovery (MTTR) for critical production issues. This translates to higher system availability, improved customer satisfaction, and reduced financial impact of outages.

- Practitioner Insight: Focus on building robust feedback loops for agent learning. Establish clear thresholds and policies for autonomous action versus human intervention. Start with low-risk, well-understood issues before granting full autonomy for critical systems.

-

Proactive Technical Debt Management:

- Value: Agents can continuously scan codebases for "code smells," potential vulnerabilities, or architectural inconsistencies before they become critical issues. They can then propose and even implement refactorings or improvements, preventing technical debt from accumulating.

- Practitioner Insight: Develop agents that can analyze code quality metrics, architectural patterns, and security best practices. Integrate them with static analysis tools and vulnerability scanners.

-

Adaptive System Configuration & Optimization:

- Value: Beyond code, agents can autonomously adjust infrastructure configurations, such as scaling cloud resources (VMs, containers), optimizing database parameters, or dynamically adjusting API rate limits in response to changing load, performance metrics, or cost considerations.

- Practitioner Insight: Explore integrating agents with Infrastructure as Code (IaC) tools (Terraform, Ansible), Kubernetes APIs, and cloud provider SDKs for dynamic infrastructure management.

-

Accelerated Feature Development & Iteration:

- Value: By offloading repetitive and time-consuming tasks like debugging, maintenance, and test case generation, human developers can dedicate more time to innovation, designing complex features, and solving higher-level architectural challenges.

- Practitioner Insight: Design agents to handle mundane coding tasks, generate comprehensive test suites for new features, and even update documentation automatically based on code changes, streamlining the entire development process.

-

Enhanced Security Posture:

- Value: Security agents can continuously scan for vulnerabilities, detect anomalous behavior indicative of attacks, and even autonomously deploy patches or mitigation strategies (e.g., firewall rule changes, isolating compromised services).

- Practitioner Insight: Integrate security agents with threat intelligence feeds, vulnerability databases (CVEs), and security information and event management (SIEM) systems to enable proactive defense and rapid response.

Challenges and Future Directions

While the promise of a self-healing and adaptive Agentic SDLC is immense, several significant challenges must be addressed:

- Trust and Explainability: Building systems that developers implicitly trust to make critical, autonomous changes requires robust XAI capabilities and clear human-in-the-loop mechanisms.

- Complexity Management: Orchestrating numerous specialized agents, managing their interactions, and ensuring their collective behavior is coherent and predictable can quickly become a complex engineering problem.

- Hallucinations & Incorrect Fixes: LLM-powered agents can "hallucinate" or generate incorrect solutions. Mitigating the risk of agents deploying harmful fixes requires rigorous testing, validation, and fallback mechanisms.

- Ethical Implications: Defining the boundaries for autonomous action, especially in sensitive domains (e.g., financial systems, healthcare), and addressing job displacement concerns are crucial ethical considerations.

- Generalization: Ensuring agents can adapt to novel problems, diverse codebases, and evolving technologies beyond their initial training data remains a significant hurdle.

- Data Scarcity: Training robust agents often requires vast amounts of high-quality data on bugs, fixes, system behavior, and human debugging processes, which can be difficult to acquire.

- Security of the Agents Themselves: The agents become critical infrastructure. Securing them from adversarial attacks or unintended malicious behavior is paramount.

Conclusion

The self-healing and adaptive Agentic SDLC, powered by multi-agent systems, represents a profound paradigm shift in how software is developed, maintained, and operated. It moves us beyond simple automation to genuine autonomy, promising unprecedented levels of reliability, efficiency, and continuous adaptation. For AI practitioners, this field offers fertile ground for innovation, demanding expertise in multi-agent orchestration, reinforcement learning, semantic code analysis, and explainable AI. As we navigate the complexities and address the challenges, the vision of software systems that intelligently monitor, diagnose, and repair themselves is not just a futuristic aspiration, but a rapidly approaching reality that will redefine the future of software engineering.