Democratizing AI: The Race for Efficient and Accessible Large Language Models

Large Language Models (LLMs) offer incredible power but demand vast computational resources. This post explores cutting-edge techniques making LLMs more efficient, accessible, and sustainable, moving beyond well-funded labs to a broader community.

The era of Large Language Models (LLMs) has ushered in unprecedented capabilities in natural language understanding and generation. From writing code to composing poetry, LLMs like GPT-4, Claude, and Llama have demonstrated a remarkable ability to tackle complex tasks. However, this power comes at a significant cost: immense computational resources. Training and deploying these colossal models demand vast amounts of memory, processing power, and energy, creating a formidable barrier to entry for many researchers, developers, and organizations.

This challenge has ignited a fervent race to make LLMs more efficient, accessible, and sustainable. The goal is clear: democratize access to this transformative technology, allowing it to move beyond the confines of well-funded research labs and into the hands of a broader community. This blog post will dive deep into the cutting-edge techniques that are making efficient LLMs a reality, exploring how optimization, clever deployment strategies, and new architectural paradigms are reshaping the landscape of AI.

The Imperative for Efficiency: Why It Matters

Before we explore the "how," let's understand the "why." The drive for LLM efficiency is multi-faceted and critical for several reasons:

- Cost Reduction: Running a massive LLM can be prohibitively expensive, especially for inference at scale. Reducing resource requirements directly translates to lower operational costs, making LLMs viable for more businesses and applications.

- Democratization: High resource demands limit who can build, fine-tune, and deploy LLMs. Efficiency techniques empower smaller teams, startups, and individual developers to leverage these powerful models without needing supercomputing infrastructure.

- Faster Inference & Real-time Applications: Latency is a critical factor for interactive applications like chatbots, virtual assistants, and real-time content generation. Optimized models can respond faster, enhancing user experience.

- Edge AI & Mobile Deployment: Bringing LLM capabilities to devices with limited resources (smartphones, IoT devices, embedded systems) requires highly efficient models that can run locally.

- Environmental Sustainability: The energy consumption associated with training and running large models contributes to a significant carbon footprint. Efficiency improvements are crucial for more environmentally responsible AI development.

- Innovation & Customization: Cheaper and faster fine-tuning allows for more experimentation and the creation of highly specialized LLMs tailored to specific domains or tasks, unlocking new applications.

The pursuit of efficiency isn't just about saving money; it's about expanding the reach and impact of LLMs, transforming them from niche tools into ubiquitous, everyday technologies.

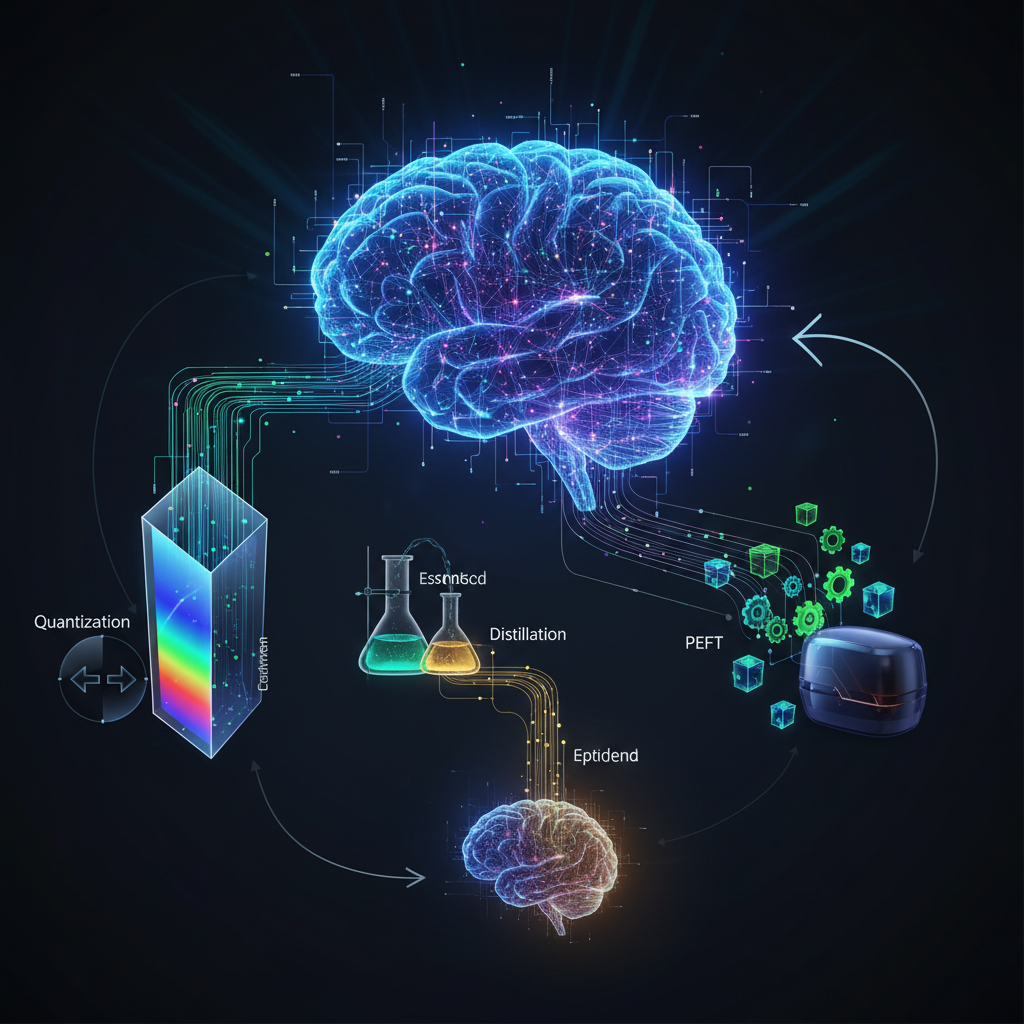

Core Techniques for Efficient LLMs

The field of LLM efficiency is a vibrant ecosystem of interconnected techniques. Let's break down the most impactful ones.

1. Quantization: Shrinking Models Without Losing Their Minds

Concept: Quantization is the process of reducing the numerical precision of a model's weights and activations. Most LLMs are trained using 32-bit floating-point numbers (FP32). Quantization converts these to lower precision formats, such as 16-bit floating-point (FP16), 8-bit integers (INT8), or even 4-bit (INT4) or 2-bit (INT2) integers.

Why it works:

- Reduced Memory Footprint: Lower precision numbers require less memory to store. An INT4 model is one-eighth the size of an FP32 model.

- Faster Computation: Processors can often perform operations on lower-precision integers much faster than on floating-point numbers, especially on specialized hardware.

- Lower Bandwidth: Less data needs to be moved between memory and processing units, which is a major bottleneck in LLM inference.

Recent Developments & Examples:

- Post-Training Quantization (PTQ): This is the most common approach, applied after a model has been fully trained.

- GPTQ (Generative Pre-trained Transformer Quantization): A highly effective PTQ method that quantizes weights to INT4 or INT8 with minimal accuracy loss. It works by quantizing weights layer by layer, minimizing the impact on the model's output. GPTQ is particularly popular for making large models like Llama-2 runnable on consumer GPUs.

- AWQ (Activation-aware Weight Quantization): Focuses on quantizing weights based on the distribution of activations, identifying "salient" weights that are more critical to performance and treating them differently during quantization. This helps preserve accuracy.

- Quantization-Aware Training (QAT): Involves simulating the effects of quantization during the training process itself. This often leads to higher accuracy than PTQ but requires re-training.

- GGML/GGUF: Not strictly a quantization method, but a file format and library (developed by the creator of

llama.cpp) that has revolutionized local LLM deployment. GGML (now superseded by GGUF) enables efficient quantization (e.g., to Q4_0, Q5_K, Q8_0) and CPU inference for LLMs. This is how you can run powerful Llama-2 models on your laptop without a dedicated GPU.

Example Use Case (GGML/GGUF): Imagine you want to run a 7B parameter Llama-2 model on your MacBook. An FP32 version would require ~28GB of RAM, likely exceeding your laptop's capacity. A Q4_0 quantized GGUF version might only need ~4GB, making it perfectly runnable on a standard laptop CPU.

# Example command using llama.cpp to run an INT4 quantized Llama-2 model

# First, you'd download a Llama-2-7B-chat.Q4_0.gguf file

./main -m models/Llama-2-7B-chat.Q4_0.gguf -p "Tell me a short story about a space-faring cat." -n 256

# Example command using llama.cpp to run an INT4 quantized Llama-2 model

# First, you'd download a Llama-2-7B-chat.Q4_0.gguf file

./main -m models/Llama-2-7B-chat.Q4_0.gguf -p "Tell me a short story about a space-faring cat." -n 256

2. Pruning: Trimming the Fat

Concept: Pruning involves removing redundant or less important connections (weights), neurons, or even entire layers from a neural network without significantly impacting its performance. The idea is that many parameters in over-parameterized LLMs contribute little to the model's overall function.

Why it works:

- Reduced Model Size: Directly decreases the number of parameters, saving memory.

- Faster Inference: Fewer computations are needed during forward passes.

Recent Developments & Challenges:

- Unstructured Pruning: Removes individual weights, leading to sparse models. While effective in reducing parameters, it often doesn't translate to speedups on standard hardware because sparse matrix operations can be slower than dense ones unless specialized hardware is used.

- Structured Pruning: Removes entire neurons, channels, or layers. This results in smaller, dense models that are more amenable to acceleration on existing hardware.

- Dynamic Pruning: Pruning applied during the training process, allowing the model to adapt to the pruned structure.

Pruning in LLMs is challenging because of their intricate interdependencies. While promising, it's often combined with other techniques like quantization for maximum effect.

3. Knowledge Distillation: Learning from the Master

Concept: Knowledge distillation is a technique where a smaller, more efficient "student" model is trained to mimic the behavior of a larger, more powerful "teacher" model. The student learns not just from the hard labels (e.g., correct answer) but also from the "soft targets" (e.g., probability distributions over all possible answers) provided by the teacher.

Why it works:

- Transfer of Knowledge: The student model gains the generalization capabilities of the teacher without needing to be as large or complex.

- Reduced Size & Cost: The student model is significantly smaller and faster to train and deploy.

Recent Developments:

- Task-Specific Distillation: Distilling specific capabilities (e.g., summarization, question answering, code generation) from a large LLM into a smaller, specialized model. This creates highly efficient models for particular tasks.

- Progressive Distillation: Gradually distilling knowledge from a larger model to a sequence of progressively smaller student models.

- Self-Distillation: A single model acts as both teacher and student, often by using an ensemble of its own predictions or different training stages.

Example Use Case: Imagine you have access to a powerful, proprietary LLM (the teacher) for text summarization. You can train a much smaller, open-source model (the student) on a dataset of documents and their summaries generated by the teacher. The student learns to summarize effectively, but with far fewer parameters, making it cheaper and faster to deploy.

4. Parameter-Efficient Fine-Tuning (PEFT): Adapting with Finesse

Concept: Fine-tuning a full LLM (e.g., 70B parameters) for a specific task is incredibly resource-intensive. PEFT methods address this by only updating a small fraction of the model's parameters, or by adding a small number of new, trainable parameters, while keeping the majority of the pre-trained weights frozen.

Why it works:

- Massive Memory Savings: Only a tiny fraction of parameters need to be loaded into GPU memory for training, making it feasible on consumer-grade GPUs.

- Faster Training: Fewer parameters to update means quicker convergence.

- Reduced Storage: Storing multiple fine-tuned versions of a model only requires storing the small, task-specific adapters, not entire copies of the base model.

Recent Developments & Examples:

- LoRA (Low-Rank Adaptation): One of the most popular and effective PEFT methods. LoRA injects small, trainable matrices (adapters) into each layer of the transformer architecture. These adapters learn low-rank updates to the original weight matrices. During inference, these adapters can be merged back into the original weights, incurring no additional latency.

- QLoRA (Quantized LoRA): Takes LoRA a step further by quantizing the base pre-trained model (e.g., to 4-bit precision) and then applying LoRA adapters. This drastically reduces the memory footprint during fine-tuning, allowing models like Llama-2 70B to be fine-tuned on a single consumer GPU with 24GB VRAM.

- Prefix-Tuning: Adds a small, trainable "prefix" vector to the input sequence, which guides the model's generation without modifying its core weights.

- P-Tuning / Prompt-Tuning: Optimizes a set of "soft prompts" (continuous vectors) that are prepended to the input. These soft prompts learn to steer the LLM towards desired outputs for specific tasks.

Example Use Case (LoRA): You have a Llama-2 13B model and want to fine-tune it for a customer support chatbot. Instead of fine-tuning all 13 billion parameters, you apply LoRA. This allows you to train the model on your customer support dialogues using a single GPU (e.g., an RTX 3090 with 24GB VRAM), generating a small adapter file (e.g., 50MB) that can be loaded alongside the base model.

# Conceptual example using Hugging Face PEFT library with LoRA

from peft import LoraConfig, get_peft_model

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "meta-llama/Llama-2-7b-hf"

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

lora_config = LoraConfig(

r=8, # LoRA attention dimension

lora_alpha=16, # Alpha parameter for LoRA scaling

target_modules=["q_proj", "v_proj"], # Modules to apply LoRA to

lora_dropout=0.05,

bias="none",

task_type="CAUSAL_LM"

)

peft_model = get_peft_model(model, lora_config)

peft_model.print_trainable_parameters()

# Output: trainable params: 4,194,304 || all params: 6,738,415,616 || trainable%: 0.06224

# Only a tiny fraction of parameters are trainable!

# Conceptual example using Hugging Face PEFT library with LoRA

from peft import LoraConfig, get_peft_model

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "meta-llama/Llama-2-7b-hf"

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

lora_config = LoraConfig(

r=8, # LoRA attention dimension

lora_alpha=16, # Alpha parameter for LoRA scaling

target_modules=["q_proj", "v_proj"], # Modules to apply LoRA to

lora_dropout=0.05,

bias="none",

task_type="CAUSAL_LM"

)

peft_model = get_peft_model(model, lora_config)

peft_model.print_trainable_parameters()

# Output: trainable params: 4,194,304 || all params: 6,738,415,616 || trainable%: 0.06224

# Only a tiny fraction of parameters are trainable!

5. Efficient Inference Frameworks & Architectures: Speed Demons

Concept: Beyond model-level optimizations, specialized software frameworks and architectural innovations are crucial for maximizing LLM inference speed and throughput.

Why it works:

- Optimized Memory Access: LLM inference is often bottlenecked by memory bandwidth (moving data to/from GPU memory). These frameworks optimize how data is stored and accessed.

- Parallelism & Batching: Efficiently processing multiple requests simultaneously.

- Hardware Acceleration: Leveraging specific features of GPUs or other accelerators.

Recent Developments & Examples:

- vLLM: An open-source library for fast LLM inference. Key innovations include:

- PagedAttention: Optimizes the KV (Key-Value) cache, which stores intermediate attention states. Instead of allocating contiguous memory for each sequence, PagedAttention uses a paged memory management system (similar to virtual memory in operating systems), allowing for more efficient memory utilization and avoiding fragmentation. This significantly boosts throughput.

- Continuous Batching: Processes incoming requests continuously, rather than waiting for a full batch, reducing latency and maximizing GPU utilization.

- FlashAttention: A highly optimized attention mechanism that reorders the computation of attention to reduce the number of memory reads and writes (I/O operations) to high-bandwidth memory (HBM). This results in significant speedups for both training and inference, especially on long sequences.

- Speculative Decoding (or Assisted Generation): Uses a smaller, faster "draft" model to predict a sequence of tokens. The larger, more accurate "target" model then verifies these tokens in parallel. If verified, the tokens are accepted; if not, the target model generates the next token. This can provide significant speedups (2-3x) without sacrificing accuracy.

- Triton Inference Server: A flexible, open-source inference serving software that can deploy models from various frameworks (TensorFlow, PyTorch, ONNX, etc.). It provides features like dynamic batching, concurrent model execution, and model ensemble to optimize throughput and latency.

Example Use Case (vLLM):

If you're building an API service that needs to handle hundreds or thousands of concurrent LLM inference requests, vLLM can dramatically increase your throughput and reduce average latency compared to a naive implementation, thanks to PagedAttention and continuous batching.

# Conceptual example of using vLLM

from vllm import LLM, SamplingParams

# Load a model

llm = LLM(model="meta-llama/Llama-2-7b-hf")

# Prepare prompts and sampling parameters

prompts = [

"Hello, my name is",

"The capital of France is",

"Write a Python function to compute the Fibonacci sequence."

]

sampling_params = SamplingParams(temperature=0.7, top_p=0.95, max_tokens=100)

# Generate texts

outputs = llm.generate(prompts, sampling_params)

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")

# Conceptual example of using vLLM

from vllm import LLM, SamplingParams

# Load a model

llm = LLM(model="meta-llama/Llama-2-7b-hf")

# Prepare prompts and sampling parameters

prompts = [

"Hello, my name is",

"The capital of France is",

"Write a Python function to compute the Fibonacci sequence."

]

sampling_params = SamplingParams(temperature=0.7, top_p=0.95, max_tokens=100)

# Generate texts

outputs = llm.generate(prompts, sampling_params)

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")

6. Smaller, Specialized Architectures: Built for Purpose

Concept: Instead of always scaling up, this approach focuses on designing inherently smaller, more efficient LLM architectures from the ground up. The insight here is that "smaller" doesn't necessarily mean "less capable," especially when models are trained on high-quality, curated data or for specific tasks.

Why it works:

- Reduced Resource Needs: By design, these models require less memory and computational power.

- Faster Training & Inference: Fewer parameters and layers directly translate to speed.

- Targeted Capabilities: Can be highly effective for specific domains without the overhead of a general-purpose giant.

Recent Developments & Examples:

- Microsoft's Phi-2: A 2.7 billion parameter model that demonstrates "state-of-the-art reasoning capabilities among models with less than 13 billion parameters." It achieves this by focusing on high-quality, "textbook-quality" data for training.

- Orca 2 (Microsoft): A series of smaller models (7B and 13B parameters) that excel at reasoning tasks. They are trained by imitating the step-by-step reasoning process of larger, more capable models, rather than just matching final outputs.

- TinyLlama: An open-source project aiming to pre-train a 1.1B Llama-like model on 3 trillion tokens. The goal is to create a compact, powerful base model that can be easily fine-tuned for various applications.

- Mistral 7B: A 7.3B parameter model that outperforms Llama 2 13B on all benchmarks and Llama 1 34B on many, achieving impressive performance for its size, partly due to architectural choices like Grouped-Query Attention (GQA) and Sliding Window Attention (SWA).

These models challenge the "bigger is always better" paradigm, proving that intelligent architectural design and data curation can yield highly capable models with significantly fewer parameters.

Practical Applications and Impact

The cumulative effect of these efficiency techniques is profound, opening up a world of possibilities:

- Local LLMs on Consumer Hardware: Running sophisticated LLMs like Llama-2 on a MacBook, Raspberry Pi, or even a smartphone is no longer a dream but a reality, thanks to GGML/GGUF and quantization.

- Cost-Effective Cloud Deployment: Businesses can deploy custom LLM solutions on cloud platforms without incurring exorbitant GPU costs, making advanced AI accessible to a wider range of companies.

- Real-time AI Assistants: Faster inference enables truly interactive chatbots, code completion tools, and intelligent search engines that respond instantly.

- Edge AI for Specialized Tasks: Imagine an LLM running on an industrial robot to understand voice commands, or on a smart camera to describe complex scenes, all without constant cloud connectivity.

- Democratized Customization: Researchers and developers can fine-tune open-source LLMs for niche domains (e.g., legal, medical, scientific research) with modest computational resources, fostering innovation and creating highly specialized AI tools.

- Sustainable AI Development: By reducing the energy footprint of LLMs, these techniques contribute to a more environmentally conscious approach to AI, aligning with global sustainability goals.

The Road Ahead

The pursuit of efficient LLMs is an ongoing journey. Research continues at a rapid pace, with new techniques and optimizations emerging constantly. Hybrid approaches, combining multiple methods (e.g., QLoRA for fine-tuning a quantized base model, then serving it with vLLM), are becoming the norm.

The future of LLMs is not just about building bigger models, but about building smarter, more accessible, and more sustainable ones. By making these powerful technologies available to everyone, we unlock their true potential to drive innovation across every industry and aspect of our lives. The era of efficient LLMs is here, and it's transforming the landscape of artificial intelligence for the better.