Retrieval-Augmented Generation (RAG): Enhancing LLMs with External Knowledge

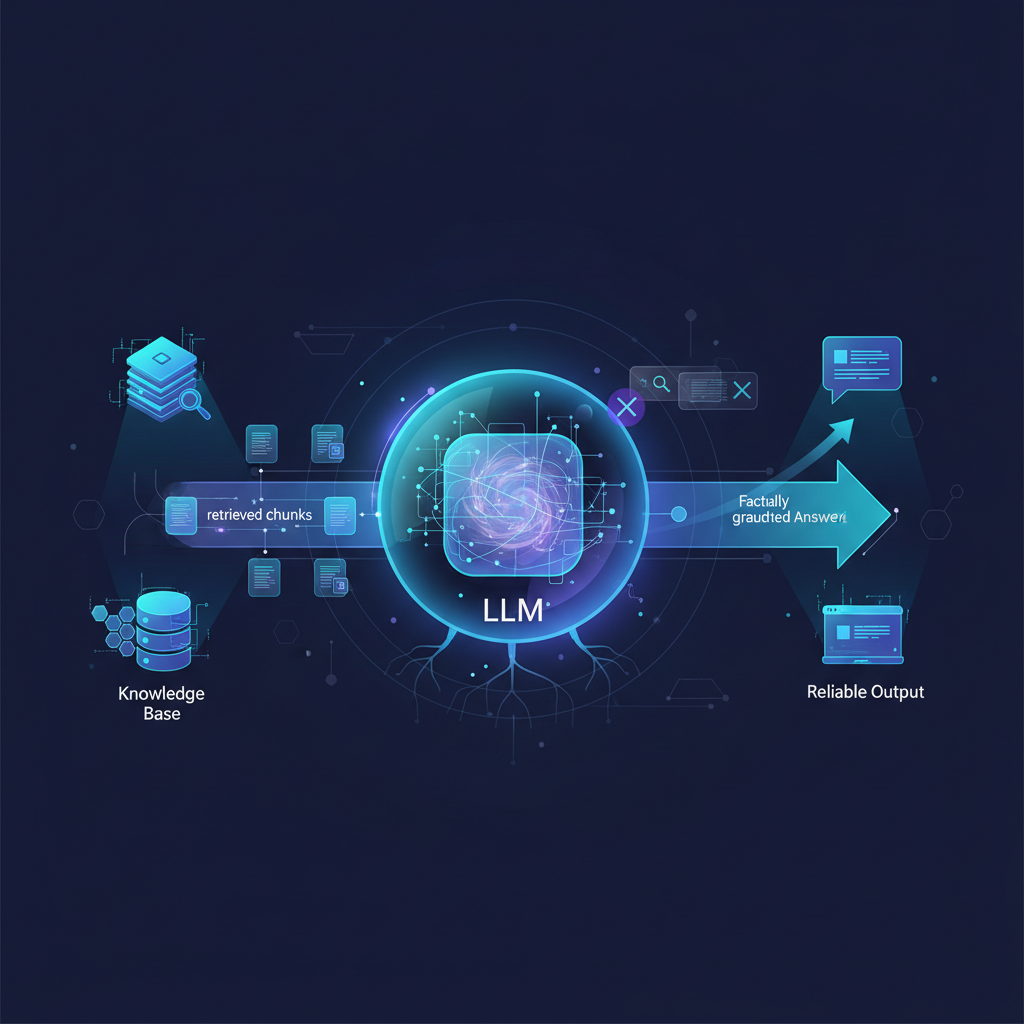

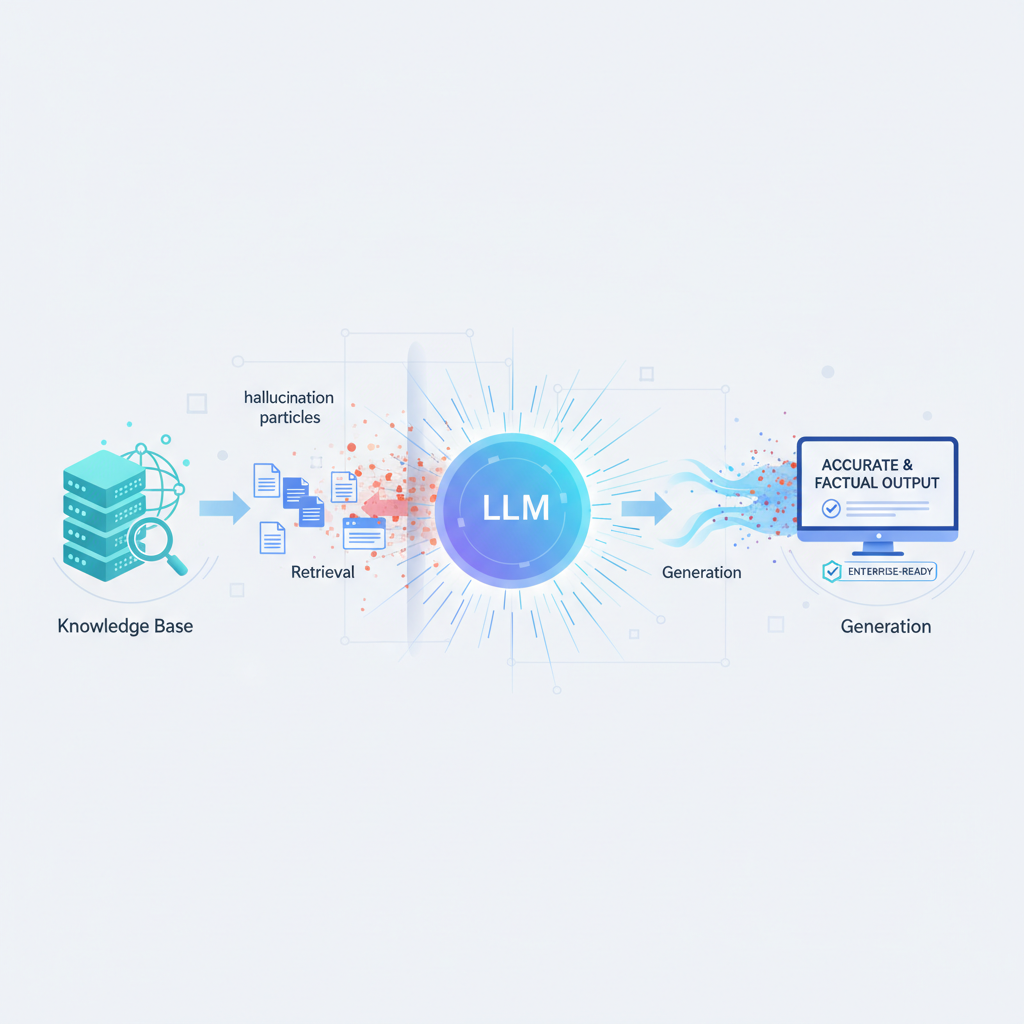

Explore Retrieval-Augmented Generation (RAG), a revolutionary technique that combines the generative power of Large Language Models (LLMs) with external knowledge retrieval. Discover how RAG addresses LLM limitations like hallucination and outdated information, making AI systems more accurate and trustworthy.

The landscape of Artificial Intelligence is evolving at an unprecedented pace, with Large Language Models (LLMs) at the forefront of this revolution. These models have demonstrated incredible capabilities in understanding, generating, and manipulating human language. However, their power comes with inherent limitations: they can "hallucinate" facts, their knowledge is capped at their training data, and they often lack domain-specific expertise. Enter Retrieval-Augmented Generation (RAG) – a paradigm shift that marries the generative power of LLMs with the precision of external knowledge retrieval.

RAG has rapidly moved from a theoretical concept to a cornerstone of practical LLM applications, addressing these core limitations head-on. It's not just a buzzword; it's a critical technique for building reliable, accurate, and trustworthy AI systems in the real world.

Why RAG is a Game-Changer for LLMs

The enthusiasm surrounding RAG isn't accidental. It directly tackles the most pressing challenges faced by "vanilla" LLMs, unlocking new levels of performance and applicability:

- Combating Hallucinations: LLMs, by their nature, are probabilistic models. They predict the next most likely token, which can sometimes lead to generating plausible but factually incorrect information. RAG grounds their responses in verifiable, external knowledge sources, drastically reducing the incidence of hallucinations.

- Overcoming Knowledge Cut-offs: Pre-trained LLMs have a knowledge cut-off date, meaning they aren't aware of events or information that occurred after their last training cycle. RAG allows them to access and incorporate the most up-to-date information from external databases, ensuring currency and relevance.

- Achieving Domain Specificity: General-purpose LLMs are, well, generalists. For specialized tasks in fields like law, medicine, or internal corporate policies, they lack the necessary depth. RAG transforms them into domain experts by providing access to curated, domain-specific documents without the expensive and time-consuming process of continuous fine-tuning.

- Enhancing Explainability and Trust: One of the major hurdles for AI adoption is the "black box" problem. RAG provides source attribution, allowing users to verify the information presented by the LLM. By citing the documents from which information was retrieved, RAG significantly increases trust and transparency.

- Cost-Effectiveness and Agility: For many applications, continuously fine-tuning an LLM with new data is prohibitively expensive and slow. RAG offers a more agile and cost-effective solution, allowing knowledge bases to be updated independently of the LLM itself.

The Anatomy of a RAG System: Core Components

Implementing an effective RAG system is more than just plugging an LLM into a database. It involves a sophisticated pipeline of data engineering, natural language processing, and information retrieval techniques. Let's break down the core components:

1. Data Ingestion & Preprocessing

Before any retrieval can happen, your knowledge base needs to be prepared. This initial step is often the most challenging due to the diversity of data sources.

- Challenge: Handling various data formats (PDFs, HTML, Markdown, database records, images), extracting meaningful text, and cleaning it of irrelevant artifacts (headers, footers, boilerplate).

- Insight: Robust parsing libraries are crucial. Tools like

Unstructured.ioorLlamaParsecan intelligently extract text from complex documents, including handling embedded tables and images (via OCR). For structured data, standard ETL processes apply. The goal is to transform raw data into clean, searchable text.

2. Chunking Strategy

Documents are often too large to fit into an LLM's context window or to be efficiently searched. They need to be broken down into smaller, semantically meaningful units called "chunks."

- Challenge: How to segment documents without losing crucial context or creating chunks that are too large or too small. Too small, and context is fragmented; too large, and it exceeds the LLM's input limit.

- Insight:

- Fixed-size Chunking with Overlap: A common starting point, where documents are split into fixed-length segments (e.g., 500 tokens) with some overlap between consecutive chunks to preserve context.

- Semantic Chunking: Utilizes LLMs or sentence transformers to identify natural breaks in text, such as paragraph or section boundaries, ensuring each chunk represents a coherent idea.

- Hierarchical Chunking: Creates chunks at different granularities (e.g., a document summary, section summaries, individual paragraphs). Retrieval can then happen at multiple levels, providing both broad and specific context.

- Parent-Child Chunking: A sophisticated technique where small, semantically rich "child" chunks are retrieved, but then a larger "parent" chunk (e.g., the full paragraph or section) containing the child is passed to the LLM. This provides more context for generation while keeping retrieval efficient.

3. Embedding Models

Once chunks are prepared, they need to be converted into numerical representations called "embeddings." These dense vectors capture the semantic meaning of the text, allowing for similarity searches.

- Challenge: Choosing an embedding model that accurately captures the semantic nuances of your domain and data.

- Insight:

- Open-source Options: Models like

BAAI/bge-large-en-v1.5orall-MiniLM-L6-v2(from the Sentence-BERT family) offer excellent performance and are ideal for self-hosting. - Proprietary Services: OpenAI's

text-embedding-ada-002ortext-embedding-3-largeoffer high quality and ease of use, often at a cost. - Evaluation & Fine-tuning: The quality of your embeddings directly impacts retrieval relevance. Evaluate models on your specific data. For highly specialized domains, fine-tuning an existing embedding model on your own corpus can significantly boost performance.

- Open-source Options: Models like

4. Vector Database (Vector Store)

Embeddings need a place to live and be efficiently queried. Vector databases are specialized databases designed for this purpose.

- Challenge: Storing and querying millions or even billions of high-dimensional vectors with low latency.

- Insight: Key players include Pinecone, Weaviate, Milvus, Qdrant, and Chroma. For local or smaller-scale applications, Faiss is a powerful option. When choosing, consider:

- Scalability: Can it handle your expected data volume and query load?

- Latency: How quickly can it return results?

- Cost: Cloud-hosted solutions have associated costs.

- Filtering Capabilities: Can you filter results based on metadata (e.g., document type, author, date)? This is crucial for precise retrieval.

5. Retrieval Strategy

This is where the "R" in RAG truly shines. Given a user query, how do you find the most relevant chunks from your vector store?

- Challenge: Ensuring the retrieved chunks are highly relevant to the user's intent, even with ambiguous or complex queries.

- Insight:

- Vector Similarity Search (VSS): The most common method. The user's query is also embedded, and the vector database finds chunks whose embeddings are "closest" (e.g., using cosine similarity) to the query embedding.

- Hybrid Search: Combines VSS with traditional keyword search (like BM25). VSS captures semantic similarity, while keyword search ensures exact matches for specific terms, often leading to more robust retrieval.

- Re-ranking: After an initial retrieval of, say, 50 candidate chunks, a smaller, more powerful model (a "cross-encoder" or a dedicated re-ranker like Cohere Rerank) can re-order the top-N results. This deeper semantic understanding helps surface the truly best chunks.

- Query Transformation/Expansion: An LLM can be used to rephrase the user's initial query, break it into sub-questions, or add relevant keywords before performing retrieval. This can significantly improve results for complex or vague queries.

6. Generation (LLM Integration)

With the most relevant chunks retrieved, they are then passed to the LLM as context for generating a response.

- Challenge: Effectively integrating the retrieved context into the LLM's prompt and ensuring the LLM uses only this context.

- Insight:

- Prompt Engineering: Crafting clear, explicit instructions for the LLM is paramount. For example: "You are an expert assistant. Answer the following question based only on the provided context. If the answer is not in the context, state that you don't know. Cite your sources."

- Context Window Management: LLMs have finite context windows. If too many chunks are retrieved, summarization of the chunks or more aggressive filtering might be necessary to fit within the limit.

- Generation Parameters: Adjusting

temperature(controls randomness) andtop-p(controls token sampling) can influence how creative or factual the LLM's response is. For RAG, lowertemperaturevalues are often preferred to encourage factual adherence.

7. Evaluation

How do you know if your RAG system is actually working well? Evaluation is critical for iteration and improvement.

- Challenge: Quantifying the performance of a RAG system, which involves both retrieval and generation quality.

- Insight:

- Retrieval Metrics:

- Recall@k: How often are the relevant documents among the top

kretrieved? - Precision@k: Of the top

kretrieved, how many are relevant? - Mean Reciprocal Rank (MRR): Measures the ranking of the first relevant document.

- Recall@k: How often are the relevant documents among the top

- Generation Metrics:

- Faithfulness: Is the generated answer supported by the retrieved context? (Crucial for hallucination reduction)

- Answer Relevance: Is the answer relevant to the user's query?

- Context Relevance: Is the retrieved context actually relevant to the query?

- Answer Correctness: Is the answer factually correct? (Often requires human review or LLM-based evaluation against ground truth.)

- Tools: Frameworks like Ragas, TruLens, and DeepEval provide structured ways to automate and analyze RAG evaluation.

- Retrieval Metrics:

Practical Applications and Use Cases

RAG is being adopted across diverse industries, powering intelligent applications that were previously difficult to achieve:

- Enterprise Search & Q&A: Imagine a chatbot that can answer complex questions about internal HR policies, technical documentation, or customer support logs, all grounded in your company's private knowledge base. This reduces employee onboarding time and improves customer service.

- Personalized Content Generation: Generating summaries of lengthy reports, creating personalized marketing copy based on customer data, or drafting legal briefs by pulling relevant precedents from vast legal databases.

- Medical & Legal Research: Assisting doctors and lawyers by providing accurate, source-backed information from vast databases of medical literature, clinical trials, or legal statutes and case law.

- Code Generation & Documentation: Helping developers by retrieving relevant code snippets, API documentation, or best practices from internal repositories, accelerating development cycles.

- Data Analysis & Reporting: Summarizing complex financial reports, generating insights from large datasets, or creating executive summaries based on specific data points and external market context.

Challenges and Future Directions

While RAG is powerful, it's not without its challenges, and the field is continuously innovating:

- Scalability & Cost: Managing massive document corpora and high query volumes efficiently, especially for real-time applications, remains an engineering challenge.

- Latency: For interactive applications, minimizing the time taken for retrieval and generation is critical.

- Multimodality: Extending RAG to incorporate images, audio, and video as retrieval sources (e.g., "Show me the part of the video where the CEO talks about Q3 earnings") is an exciting frontier.

- Security & Privacy: Handling sensitive data within vector stores and ensuring robust access controls and data governance are paramount for enterprise adoption.

- Dynamic Knowledge: Keeping the knowledge base up-to-date with continuous changes in information requires robust synchronization and indexing pipelines.

- Complex Reasoning: Enabling RAG to handle multi-hop questions (questions requiring information from multiple disparate sources and synthesizing them) and more sophisticated reasoning remains an active research area.

- Agentic RAG: Integrating RAG seamlessly into autonomous AI agents, allowing them to intelligently decide when to retrieve information, formulate queries, and use the retrieved context to plan and execute complex tasks.

Conclusion

Retrieval-Augmented Generation (RAG) stands as one of the most impactful and actively developed practical applications of LLMs today. It provides a robust framework for overcoming the inherent limitations of large language models, transforming them from impressive but sometimes unreliable generalists into trustworthy, domain-specific experts.

For AI practitioners and enthusiasts, mastering RAG involves navigating a complex yet rewarding landscape of data engineering, advanced NLP techniques, vector databases, and sophisticated prompt engineering. Its ability to ground LLMs in verifiable, up-to-date, and domain-specific knowledge makes it an indispensable tool for building reliable and trustworthy AI applications. As innovations continue in retrieval algorithms, chunking strategies, and evaluation methodologies, RAG will undoubtedly remain at the forefront of practical AI development, continuously pushing the boundaries of what LLMs can achieve in the real world.