Multi-Modal Generative AI & Autonomous Agents: A Paradigm Shift in AI

Explore the revolutionary convergence of multi-modal generative AI and autonomous agents, moving beyond narrow tasks to embodied intelligence. This synergy redefines technology interaction and complex problem-solving.

The landscape of artificial intelligence is undergoing a profound transformation, moving beyond specialized, narrow tasks to embrace systems capable of understanding, reasoning, and acting across diverse modalities and complex environments. At the heart of this revolution lies the potent synergy between multi-modal generative AI and autonomous agents. This convergence isn't merely an incremental step; it represents a paradigm shift towards embodied intelligence and unprecedented levels of complex task automation, promising to redefine how we interact with technology and solve real-world problems.

The Genesis: From Isolated AI to Integrated Intelligence

For years, AI development often proceeded along distinct tracks. We had powerful natural language processing (NLP) models, impressive computer vision systems, and sophisticated robotics. Each excelled in its domain but struggled to integrate seamlessly with others. Generative AI, initially popularized by text-to-text models like GPT, began to bridge these gaps by demonstrating an ability to create content rather than just analyze it. Simultaneously, the concept of autonomous agents, systems designed to perceive, plan, and execute actions to achieve goals, gained traction.

The real breakthrough, however, emerges when these two powerful concepts intertwine. Imagine an agent that doesn't just process text, but sees the world, hears instructions, generates novel solutions, and acts upon them. This is the promise of multi-modal generative AI empowering autonomous agents.

Multi-Modal Generative AI: Beyond Text and Pixels

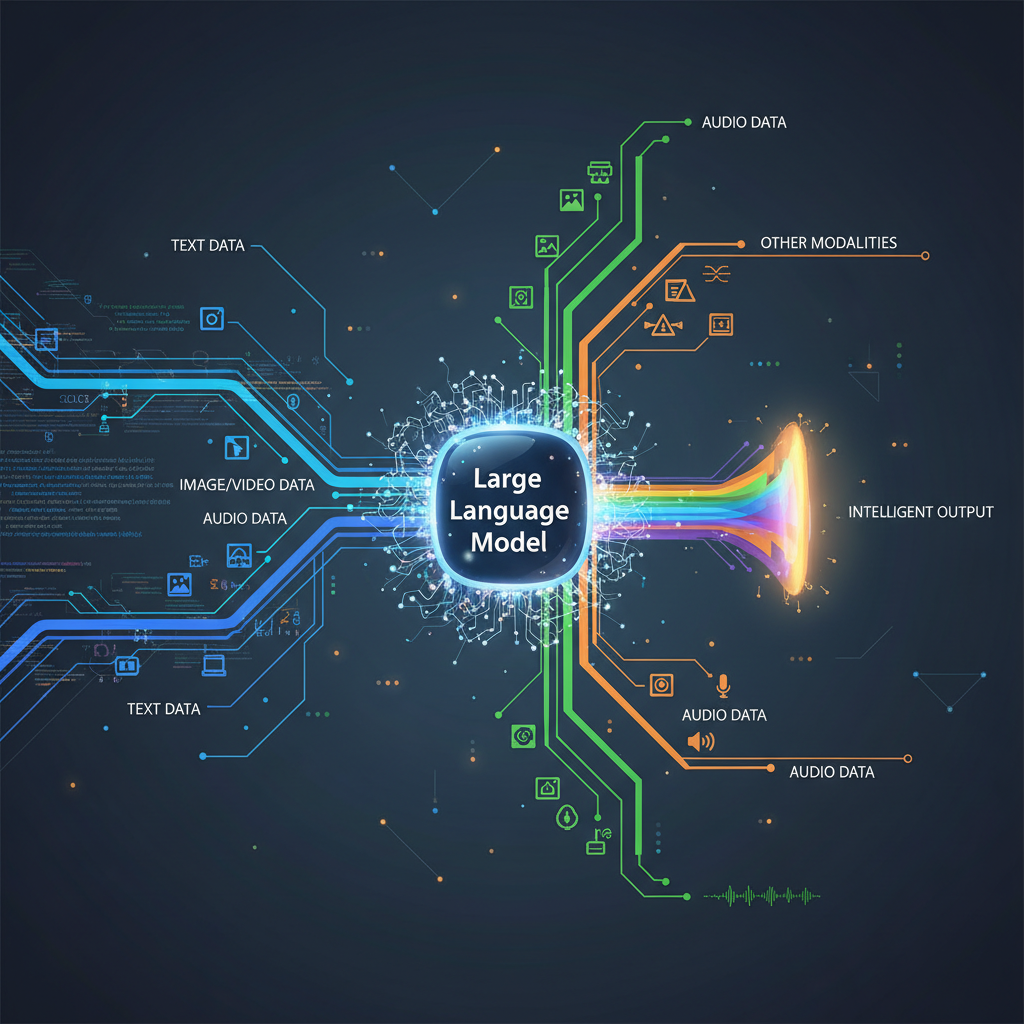

Multi-modal generative AI refers to models that can process and generate content across multiple data types – text, images, audio, video, 3D models, code, and even haptic feedback. Unlike earlier models that might translate text to image or vice versa, true multi-modal models can understand the interconnections and context across these modalities.

Key Characteristics:

- Unified Representation: These models often learn a shared, high-dimensional latent space where different modalities are represented in a semantically consistent manner. This allows for seamless translation and generation across modalities.

- Cross-Modal Understanding: They can infer relationships between different data types. For example, understanding that a text description of a "red car" corresponds to a specific visual representation, or that a sound of "splashing water" relates to a video of a swimming pool.

- Diverse Generation: Given an input in one or more modalities, they can generate coherent and contextually relevant output in another or multiple modalities. For example, a text prompt could generate an image, a descriptive caption, and even a short video clip.

Technical Underpinnings: Modern multi-modal models often leverage transformer architectures, which have proven exceptionally effective for sequential data. For multi-modal inputs, techniques like cross-attention mechanisms allow different modalities to "attend" to each other, learning dependencies. For instance, in a vision-language model, visual tokens (patches of an image) can attend to text tokens, and vice versa, enabling a rich, joint understanding.

- Example: GPT-4o (Omni)

- Input: User speaks, "Tell me about this chart," while showing a complex financial graph.

- Processing: The model simultaneously processes the audio (speech-to-text), the visual input (image analysis of the graph's data, labels, trends), and its internal knowledge base.

- Output: The model might respond verbally, explaining key trends, and simultaneously generate a text summary or even suggest a visual modification to highlight a specific data point.

This ability to fluidly move between modalities provides agents with a significantly richer perception of their environment and a broader palette for action.

Autonomous Agents: From Scripted Bots to Intelligent Decision-Makers

Autonomous agents are AI systems designed to operate independently within an environment to achieve specific goals. Unlike simple scripts or chatbots, true autonomous agents exhibit characteristics like:

- Perception: Gathering information from their environment.

- Planning: Formulating strategies and sequences of actions.

- Reasoning: Making decisions based on perceived information and goals.

- Action: Executing plans in the environment.

- Learning/Adaptation: Modifying their behavior based on experience.

- Memory: Storing and retrieving past experiences and knowledge.

The recent explosion of "AI agents" (e.g., AutoGPT, GPT-Engineer, open-source agent frameworks like LangChain, LlamaIndex, CrewAI) has largely been driven by the capabilities of Large Language Models (LLMs) acting as the "brain." These LLMs provide the reasoning and planning capabilities, allowing agents to break down complex tasks, generate code, interact with APIs, and even self-correct.

Agent Architecture (Simplified):

- Perception Module: Gathers data from the environment (sensors, APIs, user input).

- Memory Module: Stores short-term (context window) and long-term (vector databases, knowledge graphs) information.

- Planning/Reasoning Module (LLM Core): Interprets goals, generates plans, breaks down tasks, and reflects on outcomes.

- Action Module: Executes plans by interacting with tools, APIs, or physical actuators.

- Feedback Loop: Observes the outcome of actions and updates perception/memory for future planning.

The Convergence: Multi-Modal Generative Agents

The true power emerges when multi-modal generative AI becomes the core intelligence of autonomous agents. Here, the generative capabilities enhance every aspect of an agent's lifecycle:

- Richer Perception: Instead of just reading text logs, an agent can see a dashboard, hear a user's frustrated tone, read a technical manual, and interpret a 3D model of a faulty component – all simultaneously. The generative model helps it synthesize this disparate information into a coherent understanding.

- Sophisticated Reasoning & Planning: When faced with a problem, the agent can generate not just text-based plans, but also visual diagrams of proposed solutions, simulated outcomes, or even code snippets for new tools it needs. It can "imagine" different scenarios across modalities.

- Diverse Action Capabilities: An agent isn't limited to text-based responses or API calls. It can generate a new image, compose a piece of music, design a 3D object, write a complex program, or even synthesize a human-like voice response, directly impacting the multi-modal environment.

- Embodied Intelligence: This convergence is critical for embodied AI. For an agent to truly interact with the physical world (e.g., a robot), it needs to perceive it multi-modally (vision, touch, sound), understand it generatively (predicting physical interactions, generating novel movements), and act multi-modally (manipulating objects, communicating verbally).

How it Works (Conceptual Example):

Consider a "Creative Design Agent" tasked with designing a new product based on a vague user brief.

-

Input (Multi-Modal Perception):

- Text: "Design a comfortable, futuristic chair for a small apartment."

- Image: User provides a mood board of desired aesthetics (minimalist, organic shapes).

- Audio: User describes preferred materials and textures ("soft, warm, but durable").

- 3D Scan: User provides a scan of their apartment space.

-

Reasoning & Planning (Generative Core):

- The multi-modal generative model (acting as the agent's brain) synthesizes all inputs.

- It generates initial design concepts (3D models) based on "futuristic," "minimalist," "organic," and "small apartment" constraints, while also considering "comfortable," "soft," and "durable" properties.

- It might generate text descriptions of material choices, explaining why certain fabrics fit the "soft, warm, durable" criteria.

- It could generate simulated stress tests for the 3D models to check durability.

- The agent might identify conflicting requirements (e.g., "small apartment" vs. "very comfortable" potentially needing larger dimensions) and generate a question back to the user.

-

Action (Multi-Modal Generation):

- Presents several 3D chair designs (visual).

- Provides detailed material specifications (text).

- Generates a simulated rendering of the chair within the user's scanned apartment (image/video).

- Generates an audio narration explaining the design choices and trade-offs.

- If the user requests, it could even generate CAD files for manufacturing.

-

Feedback & Iteration:

- User provides feedback (text, voice, drawing annotations on the 3D model).

- The agent incorporates this feedback, refining its internal models and generating new iterations.

This iterative, multi-modal process is far more powerful than a text-only or image-only design tool.

Practical Applications and Use Cases

The implications of multi-modal generative agents span nearly every industry, promising to automate complex workflows and unlock new possibilities.

1. Advanced Robotics and Automation

- Autonomous Manufacturing & Assembly: Robots equipped with multi-modal perception (vision, tactile sensors, audio) can interpret complex, natural language instructions ("Assemble the engine block using these components," pointing to parts), generate novel assembly sequences on the fly, adapt to variations in component placement or tool availability, and even self-diagnose issues by analyzing sensor data and generating repair instructions.

- Service Robots: Imagine a hospital robot that understands a patient's spoken request ("I need my water bottle from the nightstand"), visually navigates a dynamic environment (avoiding obstacles, identifying the nightstand), uses its manipulators to grasp the bottle, and provides verbal confirmation. If it encounters an unexpected object, it can generate a query to a human operator, perhaps with an image of the obstruction.

- Agricultural Automation: Drones and ground robots can analyze multi-spectral imagery (visual), soil composition data (sensor input), and weather forecasts (text), then generate optimal irrigation patterns, pest control strategies, or harvesting routes, adapting to real-time conditions.

2. Intelligent Content Creation and Design

- Automated Game Development: Agents can interpret a high-level creative brief ("Create a fantasy RPG level with a dark forest and an ancient ruin"), generate 3D models for assets (trees, rocks, ruins), textures, sound effects (forest ambiance, crumbling stone), write dialogue for NPCs, and even generate basic game logic or questlines. This significantly accelerates game prototyping and asset creation.

- Personalized Media Production: A system could take a user's preferred news topics (text), their viewing history (video), and their emotional state (inferred from bio-feedback or interaction patterns), then generate a personalized news digest comprising text summaries, relevant video clips, and even a synthesized audio narration in a preferred voice and tone.

- Architectural and Product Design: Architects can provide sketches (image), material preferences (text), budget constraints (numerical), and environmental data (text/numerical). The agent generates multiple 3D building designs, simulates energy efficiency, structural integrity, and material costs, and provides detailed blueprints and material lists.

3. Enhanced Software Development and IT Operations

- Autonomous Code Generation and Debugging: An agent can be given a complex software requirement (text, mockups), generate a multi-file codebase across different languages, create unit tests, identify and fix bugs (by analyzing error logs and generating patches), and even deploy the application to a cloud environment. It can translate a visual UI design into functional front-end code.

- Intelligent IT Support: An agent can analyze system logs (text), interpret network topology diagrams (images), understand user voice complaints ("My internet is slow and I can't access the shared drive"), and autonomously diagnose the root cause, generating a fix (e.g., restarting a service, reconfiguring a network device) or escalating to a human with a detailed multi-modal summary of the problem.

4. Scientific Discovery and Research

- Drug Discovery: Agents can analyze vast biological datasets (genomic sequences, protein structures, chemical properties), hypothesize novel drug compounds (generating molecular structures), simulate their interactions with target proteins, and even generate experimental protocols for synthesis and testing, accelerating the R&D cycle.

- Material Science: Given desired properties (e.g., strength, conductivity, transparency), agents can generate novel material compositions at the atomic level, simulate their behavior under various conditions, and predict their performance, leading to the discovery of advanced materials.

5. Personalized Learning and Assistance

- Adaptive Tutors: An agent can observe a student's learning style (e.g., visual learner, auditory learner), analyze their responses to questions (text, spoken), and generate personalized explanations (diagrams, analogies, audio explanations), custom exercises, and real-time feedback, adapting the curriculum dynamically.

- Proactive Personal Assistants: Beyond scheduling, these assistants could anticipate needs. For example, noticing a flight delay (text alert), it could proactively re-route travel (generating new flight/train options), inform contacts (text/email), and even generate a personalized audio message to explain the situation to family.

Challenges and Future Directions

While the potential is immense, several challenges need to be addressed for multi-modal generative agents to reach their full potential:

- Computational Cost: Training and running these models are extremely resource-intensive.

- Data Scarcity for Multi-Modal Alignment: Creating truly aligned multi-modal datasets is complex and expensive.

- Robustness and Reliability: Ensuring agents behave predictably and reliably in diverse, real-world scenarios is critical, especially when dealing with physical actions.

- Safety and Ethics: As agents become more autonomous and capable of generating diverse content, concerns around bias, misuse, "hallucinations," and alignment with human values intensify. Developing robust safety protocols, explainable AI (XAI) for agents, and mechanisms for human oversight are paramount.

- Generalization and Transfer Learning: Agents need to generalize well to unseen environments and tasks, and effectively transfer knowledge between different domains.

- Long-Term Memory and Learning: Current models struggle with truly long-term, continuous learning and memory retention beyond their immediate context window.

- Human-Agent Collaboration: Designing intuitive interfaces and interaction paradigms for seamless human-agent teamwork is crucial.

The future will likely see continued advancements in:

- Smaller, More Efficient Multi-Modal Models: Enabling deployment on edge devices and reducing computational overhead.

- Enhanced Reasoning and Planning Architectures: Integrating more sophisticated symbolic reasoning, causal inference, and hierarchical planning with generative capabilities.

- Advanced Simulation Environments: Providing realistic, scalable platforms for training and testing embodied agents in complex scenarios.

- Standardized Agent Frameworks and Protocols: Facilitating interoperability and collaborative development.

- Focus on Human-Centric AI: Ensuring that these powerful agents augment human capabilities and align with societal values.

Conclusion

The convergence of multi-modal generative AI and autonomous agents is not just a fascinating research area; it's a foundational shift that will power the next generation of intelligent systems. From automating complex design processes to enabling truly intelligent robots that can understand and interact with our world in nuanced ways, the possibilities are staggering. For AI practitioners and enthusiasts, this domain offers a rich tapestry of challenges and opportunities – to build, innovate, and shape a future where AI systems are not just smart, but truly adaptive, creative, and capable of tackling the world's most intricate problems across all its diverse forms of information. The journey towards embodied intelligence and comprehensive task automation has truly begun.