The Convergence of LLMs, Multi-modal AI, and Embodied Agents: A New Era of AI

Explore the pivotal shift in AI as Large Language Models merge with multi-modal capabilities and embodied agents. Discover how this synergy is redefining human-AI interaction and unlocking unprecedented applications, moving beyond isolated AI advancements to integrated intelligent systems.

The realm of artificial intelligence is undergoing a profound transformation, pushing the boundaries of what we once considered science fiction into tangible reality. For years, AI advancements often specialized in singular domains: a vision model for image recognition, a language model for text generation, or a robotics controller for specific physical tasks. However, the cutting edge of AI is no longer about isolated capabilities; it's about intelligent systems that can perceive, understand, reason, and act across multiple modalities and within dynamic environments. This pivotal shift marks the convergence of Large Language Models (LLMs) with multi-modal AI and embodied agents – a synergy poised to redefine human-AI interaction and unlock unprecedented applications.

Beyond Text: The Rise of Multi-modal LLMs (MLLMs)

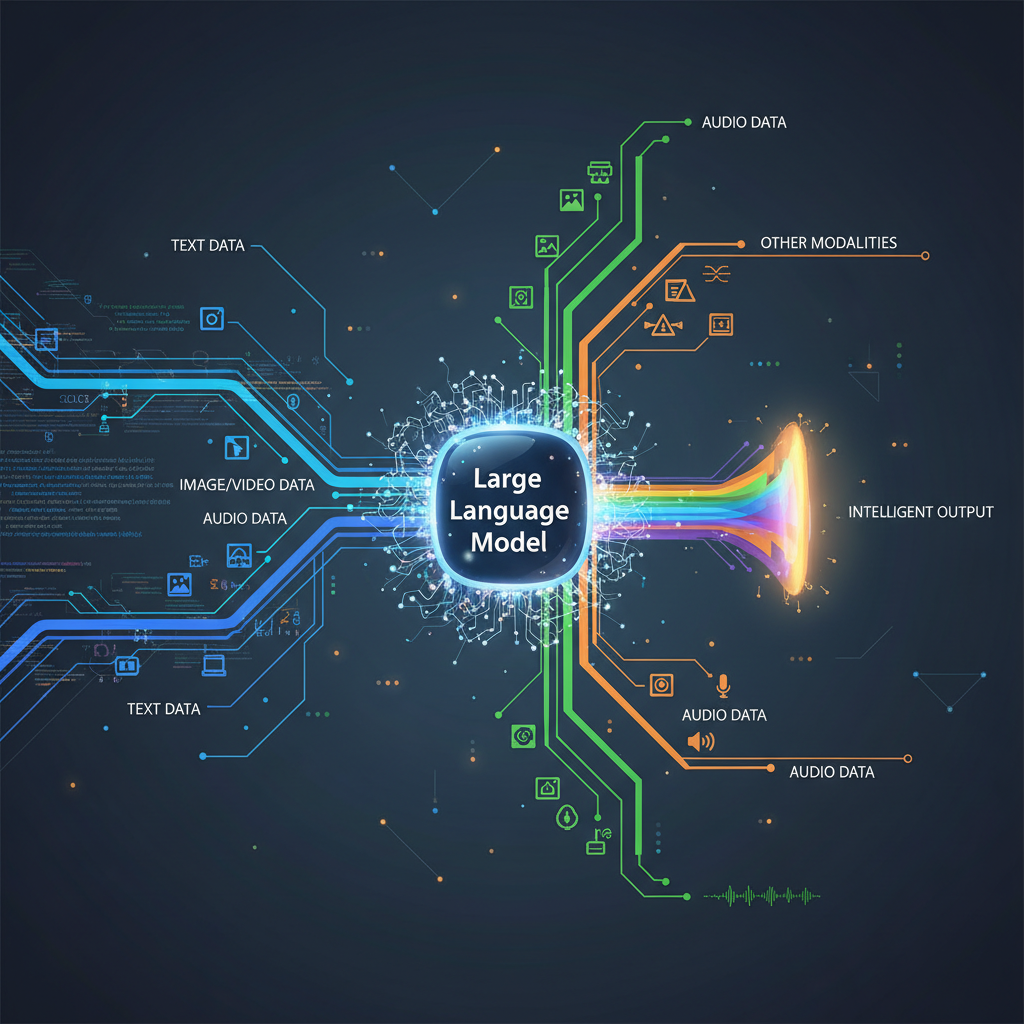

The past few years have been dominated by the spectacular capabilities of Large Language Models. From generating coherent articles to writing code and answering complex questions, LLMs like GPT-4, Llama 3, and Claude 3 have showcased an astonishing grasp of language and abstract reasoning. Yet, the real world is not just text. It's a rich tapestry of sights, sounds, and physical interactions. This realization has spurred the development of Multi-modal Large Language Models (MLLMs), which extend the linguistic prowess of LLMs to encompass other data types, primarily images, audio, and video.

What are MLLMs? At their core, MLLMs are neural networks designed to process and integrate information from multiple modalities. Unlike traditional models that might process an image and then describe it with a separate language model, MLLMs are trained to understand the relationships between these different data types. This allows them to perform tasks that require cross-modal reasoning, such as:

- Image Captioning: Describing the content of an image in natural language.

- Visual Question Answering (VQA): Answering questions about an image, requiring both visual understanding and linguistic comprehension.

- Audio-Visual Speech Recognition: Transcribing speech while also considering visual cues from the speaker's mouth movements.

- Video Understanding: Summarizing video content, identifying events, or answering questions about actions taking place.

- Generative Tasks: Creating images from text descriptions (text-to-image), generating video from text, or even synthesizing speech that matches a given emotion or speaker's voice.

How do MLLMs work? The architecture of MLLMs typically involves several key components:

-

Modality-Specific Encoders: Each modality (text, image, audio) first passes through its own specialized encoder. For images, this might be a Vision Transformer (ViT) or a Convolutional Neural Network (CNN). For audio, it could be a specialized audio transformer or recurrent neural network. These encoders transform the raw input into a high-dimensional vector representation (embeddings) that captures the salient features of that modality.

python# Conceptual example: Modality encoders import torch# Conceptual example: Modality encoders import torch

import torch.nn as nn from transformers import AutoModel, AutoTokenizer

class ImageEncoder(nn.Module): def init(self, output_dim): super().init() # Simplified: In reality, this would be a pre-trained ViT or ResNet self.conv = nn.Conv2d(3, 64, kernel_size=3, stride=2, padding=1) self.pool = nn.AdaptiveAvgPool2d((1, 1)) self.fc = nn.Linear(64, output_dim)

def forward(self, x): x = self.conv(x) x = self.pool(x) x = x.view(x.size(0), -1) return self.fc(x)

class TextEncoder(nn.Module): def init(self, model_name="bert-base-uncased", output_dim=768): super().init() self.tokenizer = AutoTokenizer.from_pretrained(model_name) self.model = AutoModel.from_pretrained(model_name) self.projection = nn.Linear(self.model.config.hidden_size, output_dim)

def forward(self, text): inputs = self.tokenizer(text, return_tensors="pt", padding=True, truncation=True) outputs = self.model(inputs) # Use the [CLS] token embedding for sentence representation cls_embedding = outputs.last_hidden_state[:, 0, :] return self.projection(cls_embedding)

Example usage (conceptual)

image_input = torch.randn(1, 3, 224, 224) # Batch, Channels, Height, Width

text_input = "A cat sitting on a mat."

img_encoder = ImageEncoder(output_dim=768)

txt_encoder = TextEncoder(output_dim=768)

img_embedding = img_encoder(image_input)

text_embedding = txt_encoder(text_input)

print(f"Image embedding shape: {img_embedding.shape}")

print(f"Text embedding shape: {text_embedding.shape}")

-

Cross-Modal Alignment: The embeddings from different modalities need to be aligned into a common latent space. This is often achieved through contrastive learning, where the model learns to pull embeddings of semantically related multi-modal pairs closer together and push unrelated pairs apart. For example, an image of a cat and the text "a cat" should have similar embeddings.

-

Fusion Mechanism: Once aligned, the embeddings are fed into a central fusion mechanism, often a large transformer-based decoder or a multi-modal transformer. This component processes the combined information, allowing the model to reason across modalities and generate the desired output (e.g., text, image, or another modality).

Examples of MLLMs:

- OpenAI's GPT-4o: Capable of processing and generating text, audio, and image inputs. It can understand spoken commands, analyze images, and respond with synthesized speech, all within a single model.

- Google's Gemini: Designed from the ground up to be multi-modal, handling text, code, audio, image, and video inputs.

- Meta's LLaVA (Large Language and Vision Assistant): An open-source MLLM that combines a vision encoder with an LLM for visual instruction following.

- Stable Diffusion (and variants): While primarily text-to-image, these models demonstrate multi-modal generation by translating text prompts into visual outputs.

The advent of MLLMs signifies a crucial step towards AI that can perceive the world more holistically, mirroring human perception where sight, sound, and language are intrinsically linked.

Embodied AI: Giving LLMs a Body

While MLLMs grant AI the ability to perceive and generate across modalities, the next frontier is to equip these intelligent systems with the capacity to act within the physical or simulated world. This is the essence of Embodied AI: giving AI models "bodies" – whether in robotics, virtual reality, or simulation – and allowing them to interact with their environment.

Why Embodied AI? Traditional AI often operates in a disembodied, abstract space. It processes data, makes predictions, and generates outputs, but it doesn't directly experience the consequences of its actions in a dynamic environment. Embodied AI addresses this limitation by:

- Grounding: Providing a physical grounding for abstract concepts. An LLM might know the definition of "heavy," but an embodied agent can learn what "heavy" truly means by attempting to lift an object.

- Interaction: Enabling active exploration and learning through interaction. Agents can manipulate objects, navigate spaces, and learn from trial and error.

- Situational Awareness: Developing a deeper understanding of context and causality by experiencing the world directly.

- Real-world Problem Solving: Moving beyond theoretical solutions to practical applications in robotics, virtual assistants, and more.

LLMs as the "Brain" for Embodied Agents: The convergence of LLMs and embodied AI is particularly exciting because LLMs can serve as the high-level "brain" for these agents. They bring powerful capabilities to embodiment:

-

Task Planning and Reasoning: LLMs can take high-level natural language instructions (e.g., "Make me coffee") and break them down into a sequence of executable sub-tasks (e.g., "Go to kitchen," "Get mug," "Fill with water," "Insert coffee pod," "Press brew"). They can reason about preconditions, effects, and potential obstacles.

-

Natural Language Interaction: LLMs enable agents to understand complex, open-ended commands from humans, ask clarifying questions, and provide detailed explanations of their actions or intentions. This makes human-robot interaction (HRI) far more intuitive and natural.

-

Knowledge Integration: LLMs are pre-trained on vast amounts of text data, giving them a broad understanding of the world, common sense, and how objects and actions relate. This knowledge can guide an embodied agent's decisions, even in novel situations.

-

Learning from Experience: While LLMs themselves don't directly "learn" motor skills, they can process observations and feedback from the environment (e.g., "The robot failed to grasp the bottle") and use this information to refine their internal plans or strategies for future attempts. They can also generate code or instructions for lower-level control policies based on observed successes or failures.

Architectural Approaches for Embodied LLMs:

-

Hierarchical Control: A common paradigm where the LLM acts as a high-level planner, generating abstract plans or code. These plans are then translated into low-level motor commands by specialized robotic control policies or skill modules. The LLM might monitor execution and replan if necessary.

python# Conceptual example: LLM as a high-level planner def llm_plan_task(instruction): # In a real scenario, this would be an API call to an LLM # that generates a sequence of actions or Python code. if "make coffee" in instruction.lower(): return [ "navigate_to_kitchen()", "find_object('mug')", "grasp_object('mug')", "navigate_to_coffee_machine()", "place_object('mug', 'coffee_machine_tray')", "find_object('coffee_pod')", "grasp_object('coffee_pod')", "insert_object('coffee_pod', 'coffee_machine_slot')", "press_button('coffee_machine_brew')", "wait(60)", # Wait for brewing "retrieve_object('mug')", "navigate_to_user()" ] elif "clean table" in instruction.lower(): return [ "navigate_to_table()", "detect_objects_on_table()", "for obj in detected_objects: pick_and_place_in_bin(obj)", "wipe_surface('table')" ] else: return ["I don't understand that instruction yet."] # Example of a low-level skill module (simplified) class RobotSkills: def navigate_to_kitchen(self): print("Robot navigating to kitchen...") def find_object(self, obj): print(f"Robot finding {obj}...") def grasp_object(self, obj): print(f"Robot grasping {obj}...") # ... other skills # Execution flow # user_instruction = "Please make me a cup of coffee." # plan = llm_plan_task(user_instruction) # # robot = RobotSkills() # for action_code in plan: # try: # eval(f"robot.{action_code}") # Execute the skill # except Exception as e: # print(f"Error executing {action_code}: {e}") # # LLM could be prompted to replan here# Conceptual example: LLM as a high-level planner def llm_plan_task(instruction): # In a real scenario, this would be an API call to an LLM # that generates a sequence of actions or Python code. if "make coffee" in instruction.lower(): return [ "navigate_to_kitchen()", "find_object('mug')", "grasp_object('mug')", "navigate_to_coffee_machine()", "place_object('mug', 'coffee_machine_tray')", "find_object('coffee_pod')", "grasp_object('coffee_pod')", "insert_object('coffee_pod', 'coffee_machine_slot')", "press_button('coffee_machine_brew')", "wait(60)", # Wait for brewing "retrieve_object('mug')", "navigate_to_user()" ] elif "clean table" in instruction.lower(): return [ "navigate_to_table()", "detect_objects_on_table()", "for obj in detected_objects: pick_and_place_in_bin(obj)", "wipe_surface('table')" ] else: return ["I don't understand that instruction yet."] # Example of a low-level skill module (simplified) class RobotSkills: def navigate_to_kitchen(self): print("Robot navigating to kitchen...") def find_object(self, obj): print(f"Robot finding {obj}...") def grasp_object(self, obj): print(f"Robot grasping {obj}...") # ... other skills # Execution flow # user_instruction = "Please make me a cup of coffee." # plan = llm_plan_task(user_instruction) # # robot = RobotSkills() # for action_code in plan: # try: # eval(f"robot.{action_code}") # Execute the skill # except Exception as e: # print(f"Error executing {action_code}: {e}") # # LLM could be prompted to replan here -

Vision-Language-Action (VLA) Models: These models directly map multi-modal observations (visuals, language instructions) to actions. They often leverage MLLMs that are fine-tuned on large datasets of robot trajectories with corresponding visual observations and language commands.

-

Foundation Models for Robotics: Researchers are exploring how pre-trained LLMs and MLLMs can act as "foundation models" for robotics. Just as foundation models generalize across many NLP tasks, the goal is to have a single model that can adapt to a wide range of robotic tasks with minimal fine-tuning, providing high-level reasoning, task planning, and even low-level control policies.

Practical Applications and Use Cases

The convergence of MLLMs and embodied AI isn't just a theoretical concept; it's driving innovation across numerous sectors:

-

Enhanced Robotics:

- Household Robots: Imagine a robot that can understand complex, open-ended instructions like "Tidy up the living room and put the books back on the shelf," and then execute this task by identifying objects, navigating obstacles, and performing manipulation. MLLMs provide the understanding, and embodied AI provides the physical capability.

- Industrial Automation: Robots in factories can be programmed and reconfigured using natural language, adapting to new product lines or tasks with greater flexibility.

- Exploration & Disaster Response: Autonomous robots equipped with MLLM brains can interpret sensory data from unfamiliar environments, plan exploration routes, identify points of interest, and communicate findings in natural language, making them invaluable for hazardous missions.

-

Advanced Virtual Assistants & Avatars:

- Context-Aware Assistants: Virtual assistants that can not only understand your spoken commands but also interpret your gestures, facial expressions, and the objects in your environment (via camera input) to provide more relevant and empathetic assistance.

- Immersive Gaming & VR: NPCs (Non-Player Characters) in games or virtual reality environments can become incredibly intelligent and responsive, understanding player actions, verbal cues, and the virtual world around them, leading to more dynamic and engaging experiences.

- Digital Twins & Simulation: Embodied AI agents can interact within digital twins of real-world systems (e.g., factories, cities) to test scenarios, optimize processes, and train human operators in a safe, virtual environment.

-

Creative Content Generation:

- Multi-modal Storytelling: MLLMs can generate not just text, but also accompanying images, video clips, or even 3D models based on a single narrative prompt. This empowers artists, designers, and content creators with powerful new tools for rapid prototyping and creative expression.

- Personalized Experiences: Generating dynamic, multi-modal content that adapts in real-time to user preferences, context, and interaction.

-

Scientific Discovery & Engineering:

- Autonomous Labs: Embodied AI robots can conduct experiments, analyze results using MLLMs, formulate new hypotheses, and iterate on experimental designs in chemistry, materials science, or biology labs, accelerating discovery.

- Drug Discovery: Simulating molecular interactions and protein folding in virtual environments with embodied agents can help identify promising drug candidates faster.

-

Accessibility:

- Developing AI agents that can better assist individuals with disabilities by interpreting complex sensory information (e.g., reading sign language, describing visual scenes for the visually impaired) and responding in a multi-modal fashion (e.g., haptic feedback, synthesized speech, visual cues).

Technical Depth & Challenges Ahead

While the promise is immense, the path to fully realized multi-modal, embodied AI is fraught with significant technical challenges:

-

Data Integration and Alignment: Training MLLMs requires vast, high-quality datasets where different modalities are perfectly aligned in time and semantic meaning. Creating such datasets (e.g., video clips with synchronized audio, text descriptions, and robot actions) is incredibly expensive and time-consuming. Techniques like self-supervised learning and synthetic data generation are crucial here.

-

Robust Reasoning and Grounding: LLMs excel at linguistic reasoning, but grounding this reasoning in the physical world remains a hurdle. An LLM might know that "a cup holds liquid," but an embodied agent needs to understand the physics of pouring, the stability of the cup, and the potential for spills. Ensuring robust common-sense reasoning and physical grounding is paramount for reliable embodied agents.

-

Safety, Ethics, and Control: As embodied agents become more autonomous and capable, concerns around safety, ethical decision-making, bias propagation, and accountability escalate. How do we ensure these agents operate safely in human environments? How do they handle moral dilemmas? Establishing clear control mechanisms, transparency, and ethical guidelines is critical.

-

Computational Resources: Training and deploying these large multi-modal, embodied models demand immense computational power. The sheer scale of parameters and the complexity of processing diverse data types push the limits of current hardware and infrastructure. Efficiency in model architecture and training is an active research area.

-

Real-time Interaction and Low Latency: For seamless human-agent interaction, embodied MLLMs need to perceive, process, reason, and respond with very low latency. Delays can break immersion in virtual environments or hinder effective collaboration with robots. Optimizing models for real-time performance is a key challenge.

-

Sim-to-Real Transfer: Training embodied agents in simulation is safer and more efficient, but transferring skills learned in a perfect, simplified simulation to the messy, unpredictable real world is notoriously difficult. The "sim-to-real gap" requires robust domain randomization, advanced transfer learning techniques, and continuous learning in the real environment.

-

Long-Term Memory and Continuous Learning: Current LLMs have limited context windows, hindering long-term memory and continuous learning over extended interactions or lifetimes. Embodied agents need to remember past experiences, adapt to new situations, and continuously improve their skills without catastrophic forgetting.

The Next Frontier of Intelligence

The convergence of Large Language Models with multi-modal AI and embodied agents represents a monumental leap in the quest for truly intelligent systems. It moves AI beyond abstract computation into the realm of situated, interactive intelligence – where agents can perceive, reason, act, and communicate within dynamic environments, much like humans do.

For AI practitioners, understanding this convergence is not merely academic; it's crucial for designing the next generation of AI systems that are more capable, versatile, and seamlessly integrated into our physical and digital worlds. For enthusiasts, it offers a thrilling glimpse into a future where AI is not just a tool on a screen, but an active, intelligent participant in our daily lives, capable of understanding our world in all its rich, multi-modal complexity. The challenges are significant, but the potential rewards – from revolutionizing robotics and human-computer interaction to accelerating scientific discovery – are truly transformative. We are witnessing the dawn of a new era of AI, one where intelligence is not just about thinking, but about experiencing and acting.