Unlocking AI's Senses: The Rise of Multimodal Large Language Models

Explore the transformative power of multimodal LLMs, moving beyond text to integrate sight, sound, and other senses. This new era of AI promises to mirror human perception and interaction more closely than ever before.

The world of artificial intelligence is undergoing a profound transformation, driven by the relentless progress of large language models (LLMs). These textual behemoths have redefined what's possible in natural language understanding and generation, from crafting compelling narratives to summarizing complex documents. Yet, as powerful as they are, LLMs traditionally operate within the confines of text. But what if AI could not only read and write but also see, hear, and even interpret the nuances of our physical world? This is the promise of multimodality, and its convergence with LLMs is ushering in a new era of AI, one that mirrors human perception and interaction more closely than ever before.

Beyond Text: Why Multimodality Matters

Humans don't experience the world through text alone. We perceive and process information through a rich tapestry of senses: sight, sound, touch, and more. A simple conversation isn't just about the words spoken; it's about facial expressions, tone of voice, gestures, and the surrounding environment. Text-only AI, while incredibly sophisticated, inherently misses these crucial contextual cues, leading to a semantic gap between AI understanding and human experience.

The recent explosion in multimodal AI development aims to bridge this gap. By integrating different data types – images, audio, video, and text – into a unified understanding framework, AI can begin to grasp the richness and complexity of the real world. This isn't merely an incremental improvement; it's a paradigm shift towards more robust, contextually aware, and truly intelligent systems.

The Rise of Multimodal LLMs: A Technical Deep Dive

The core idea behind multimodal LLMs is to enable a single model to process and reason across multiple data modalities. This often involves extending the architectural principles that made LLMs so successful – primarily the transformer architecture – to handle diverse input types.

Vision-Language Models (VLMs)

This is arguably the most prominent and rapidly advancing area of multimodal AI. VLMs combine visual information (images, video) with textual understanding.

How they work: At a high level, VLMs typically employ separate encoders for each modality, which then project these different data types into a shared latent space.

- Visual Encoder: Often a Convolutional Neural Network (CNN) or a Vision Transformer (ViT) processes images or video frames, extracting visual features.

- Textual Encoder: A standard transformer-based LLM processes text, generating contextualized word embeddings.

- Fusion Mechanism: The magic happens here. Various techniques are used to combine the visual and textual representations:

- Cross-Attention: Text tokens can attend to visual patches, and vice versa, allowing the model to learn relationships between specific words and visual elements.

- Concatenation & Projection: Features from both modalities are concatenated and then passed through additional layers to learn joint representations.

- Contrastive Learning: Models like CLIP (Contrastive Language-Image Pre-training) learn to align images and text descriptions by pushing similar pairs closer in the latent space and dissimilar pairs further apart. This allows for zero-shot generalization.

Examples and Capabilities:

- Image Captioning: Given an image, the model generates a descriptive text caption. Early models like Show and Tell (Google) used CNNs for vision and LSTMs for text. Modern approaches leverage transformers end-to-end.

- Example: Input image of a cat sleeping on a keyboard -> Output: "A fluffy cat is asleep on a computer keyboard."

- Visual Question Answering (VQA): The model answers natural language questions about the content of an image. This requires not just recognition but also reasoning.

- Example: Input image of a kitchen with a person cooking, Question: "What color is the person's shirt?" -> Output: "Blue."

- Visual Grounding: Identifying specific objects or regions in an image based on a textual query. This is crucial for tasks like object localization.

- Example: Input image of a park with several people, Query: "Locate the person wearing a red hat." -> Output: Bounding box around the specified person.

- Image-to-Text with LLMs (e.g., GPT-4V, Gemini): These advanced models take an image and a text prompt, then perform complex analysis. They can not only describe but also interpret, explain, and reason about visual content.

- Example: Input: Image of a meme, Prompt: "Explain this meme." -> Output: A detailed explanation of the meme's cultural context and humor.

- Instruction Following: Models can follow complex instructions that involve both text and visual inputs.

- Example: "Find the yellow car in this parking lot and tell me its license plate number."

Audio-Language Models

While VLMs dominate the current discourse, integrating audio is equally vital for a holistic understanding of human interaction and the environment.

How they work: Similar to VLMs, audio-language models use dedicated encoders for audio data.

- Audio Encoder: Processes raw audio waveforms or spectrograms (visual representations of audio frequencies over time) using CNNs or specialized audio transformers. This extracts features like pitch, timbre, and rhythm.

- Textual Encoder: Standard LLM for text.

- Fusion: Cross-attention or other fusion mechanisms combine audio and text features.

Examples and Capabilities:

- Speech Recognition & Synthesis: While mature fields, their integration with LLMs allows for more context-aware transcription and more natural, emotionally nuanced speech generation.

- Audio Event Detection & Description: Identifying and describing non-speech sounds in an audio clip (e.g., "dog barking," "car horn," "music playing").

- Multimodal Speech Understanding: Understanding not just the literal words spoken, but also the speaker's emotional state, intent, and even linking it to visual cues (e.g., detecting sarcasm from tone and facial expression).

Foundation Models for Multimodality

A significant trend is the development of "foundation models" that are pre-trained on massive, diverse multimodal datasets. These models, like Google's Gemini, Meta's LLaVA, and research models such as Flamingo and CoCa, aim to be generalists, capable of performing a wide array of tasks across modalities with minimal fine-tuning (zero-shot or few-shot learning). They learn robust, transferable representations that can be applied to new tasks and domains.

Practical Applications: Transforming Industries and Experiences

The convergence of LLMs and multimodality isn't just an academic pursuit; it's a catalyst for groundbreaking applications across virtually every sector.

1. Enhanced Customer Service & Support

Imagine an AI agent that can not only understand your text query but also analyze a screenshot of an error message, a product image, or even a short video clip showing the issue. This leads to:

- Faster Resolution: AI can quickly diagnose problems by "seeing" what the customer sees.

- Reduced Frustration: Customers don't need to struggle to describe visual issues verbally.

- Proactive Support: Analyzing customer sentiment from voice, text, and even facial expressions in video calls can allow for more empathetic and tailored responses.

2. Content Creation & Marketing

Multimodal LLMs are revolutionizing how content is generated and consumed.

- Automated Asset Generation: From a single text prompt, AI can generate entire marketing campaigns including text copy, relevant images, and even short video clips.

- Personalized Content: AI can tailor content (visuals, text, audio) based on a user's inferred preferences from their past interactions across different media.

- Creative Augmentation: Designers and marketers can use these tools to rapidly prototype ideas, explore visual styles, and generate variations, significantly accelerating the creative process.

3. Accessibility

This technology holds immense potential for making the digital and physical worlds more accessible.

- Context-Aware Image Descriptions: For visually impaired users, AI can generate rich, detailed, and contextually relevant descriptions of images and video content, going beyond simple object recognition to explain scenes and actions.

- Real-time Multimodal Translation: Imagine a system that can not only translate spoken language but also interpret sign language from video feeds, providing a more comprehensive communication bridge.

4. Robotics and Autonomous Systems

For robots to truly interact with the world, they need to understand it like humans do.

- Natural Language Instruction: Robots can interpret complex natural language commands ("Pick up the red apple from the counter and put it in the bowl") by visually identifying objects and understanding spatial relationships.

- Environmental Awareness: Autonomous vehicles can interpret traffic signs, road conditions, human gestures, and even the intent of other drivers, leading to safer and more efficient navigation.

- Human-Robot Collaboration: Robots can better anticipate human actions and respond appropriately by analyzing visual cues and verbal commands in real-time.

5. Healthcare

The medical field stands to benefit significantly from multimodal AI.

- Enhanced Diagnostics: AI can assist doctors by analyzing medical images (X-rays, MRIs, CT scans) alongside patient notes, electronic health records, and even symptoms described verbally, leading to more accurate and faster diagnoses.

- Patient Monitoring: Combining vital signs data with speech patterns, video feeds (e.g., monitoring gait or facial expressions), and sensor data can provide a holistic view of patient well-being, enabling early detection of issues.

- Scientific Document Understanding: AI can analyze complex scientific papers, understanding not just the text but also diagrams, graphs, and formulas, accelerating research and discovery.

6. Education

Multimodal LLMs can transform learning experiences.

- Interactive Learning Platforms: AI tutors can explain complex diagrams, graphs, and visual information in textbooks, responding to student questions whether they point to an image, describe a concept, or type a query.

- Personalized Learning: AI can adapt teaching methods and content based on a student's learning style, which can be inferred from their interactions across different modalities (e.g., visual learners might get more diagrams, auditory learners more spoken explanations).

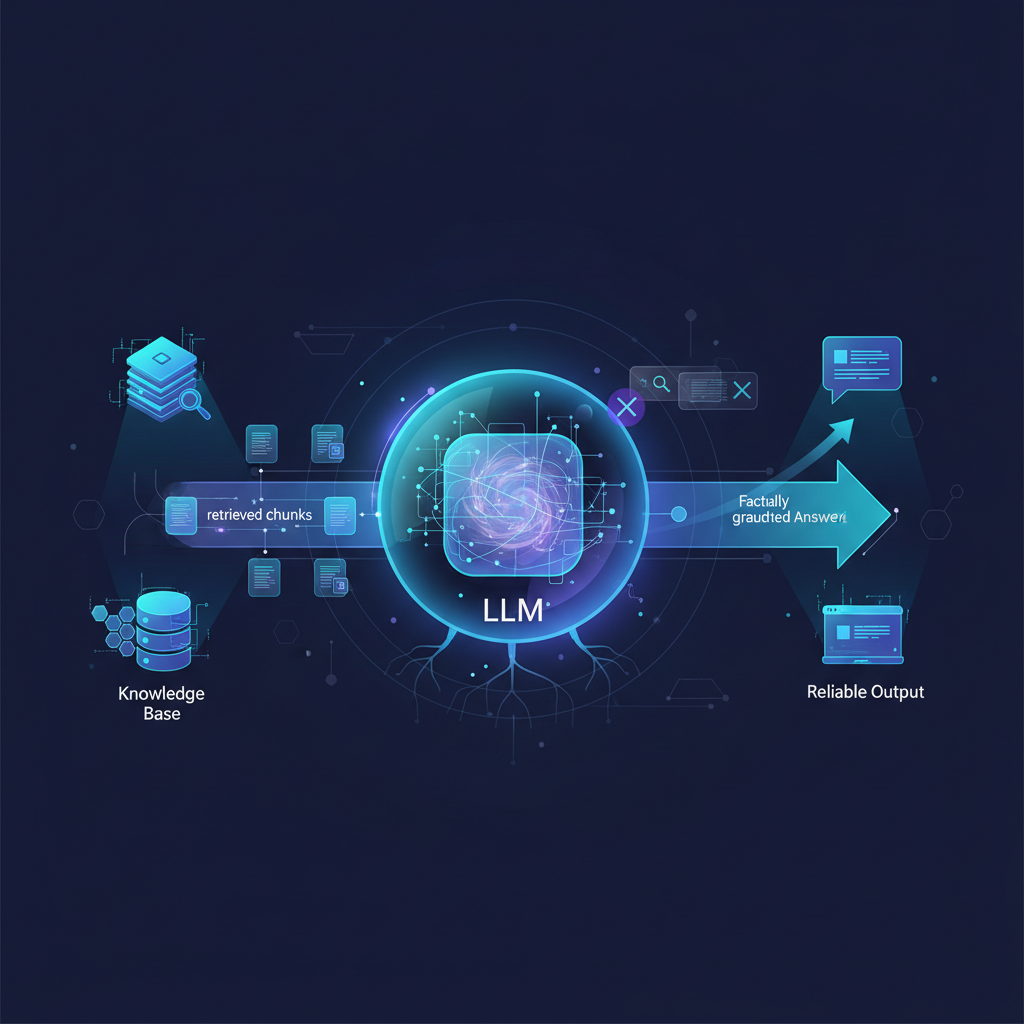

7. Search and Information Retrieval

The future of search is multimodal.

- Universal Search: Users can query with text, images ("Find me recipes that use these ingredients [image] and are quick to make [text]"), or voice, and receive results that span all modalities (e.g., relevant articles, videos, product images).

- Visual Search: Upload an image of an item and find similar products, information, or locations.

Technical Challenges and Future Directions

Despite the incredible progress, the path to truly human-like multimodal AI is fraught with challenges.

- Data Scarcity and Alignment: Creating truly massive, high-quality multimodal datasets where different modalities are perfectly aligned and semantically rich is incredibly difficult and expensive. The sheer diversity of real-world data makes this a monumental task.

- Architectural Design and Fusion: Designing efficient and effective architectures that can seamlessly process, fuse, and reason across diverse data types (pixels, audio waveforms, text tokens) without losing information or becoming computationally prohibitive remains a key research area. How do we optimally combine information from disparate sources?

- Cross-Modal Reasoning: Moving beyond simple recognition or description to complex inference that requires deep integration of information from multiple modalities is a significant hurdle. For example, understanding sarcasm requires combining linguistic, auditory (tone), and visual (facial expression) cues.

- Evaluation Metrics: How do we accurately evaluate the performance of a multimodal model, especially for subjective tasks like creative generation, complex reasoning, or nuanced understanding of human emotion? Traditional metrics often fall short.

- Interpretability and Explainability: Understanding why a multimodal model made a particular decision, especially when multiple inputs are involved, is even harder than with unimodal models. This is crucial for trust and debugging.

- Computational Cost: Training and deploying these models are extremely resource-intensive, demanding massive computational power, energy, and specialized hardware. This limits accessibility and scalability.

- Catastrophic Forgetting: Ensuring that models trained on new modalities don't forget capabilities learned on previous ones (e.g., a VLM should still be an excellent text-only LLM) is an ongoing research challenge.

- Ethical Considerations: Multimodal AI amplifies existing ethical concerns. Biases present in training data can propagate and even be exacerbated across modalities. The potential for misuse (e.g., deepfakes, sophisticated misinformation, surveillance) necessitates careful development and robust safeguards.

Conclusion: A New Frontier for AI

The convergence of large language models and multimodality marks a pivotal moment in the history of artificial intelligence. It's a leap towards creating AI systems that don't just process data but genuinely understand and interact with the world in a more human-like, intuitive way. For AI practitioners and enthusiasts, this field offers an unparalleled opportunity to engage with cutting-edge research, develop truly innovative applications, and contribute to shaping the ethical and technological landscape of the future. The journey is complex, but the destination—a world where AI can truly see, hear, and comprehend alongside us—is profoundly exciting.