Unlocking On-Device AI: The Rise of Efficient LLMs and Edge NLP

Large Language Models offer immense potential but face high computational costs. This post explores the 'LLM paradox' and how efficient models and on-device NLP are democratizing AI, bringing powerful capabilities to personal devices while reducing costs and enhancing privacy.

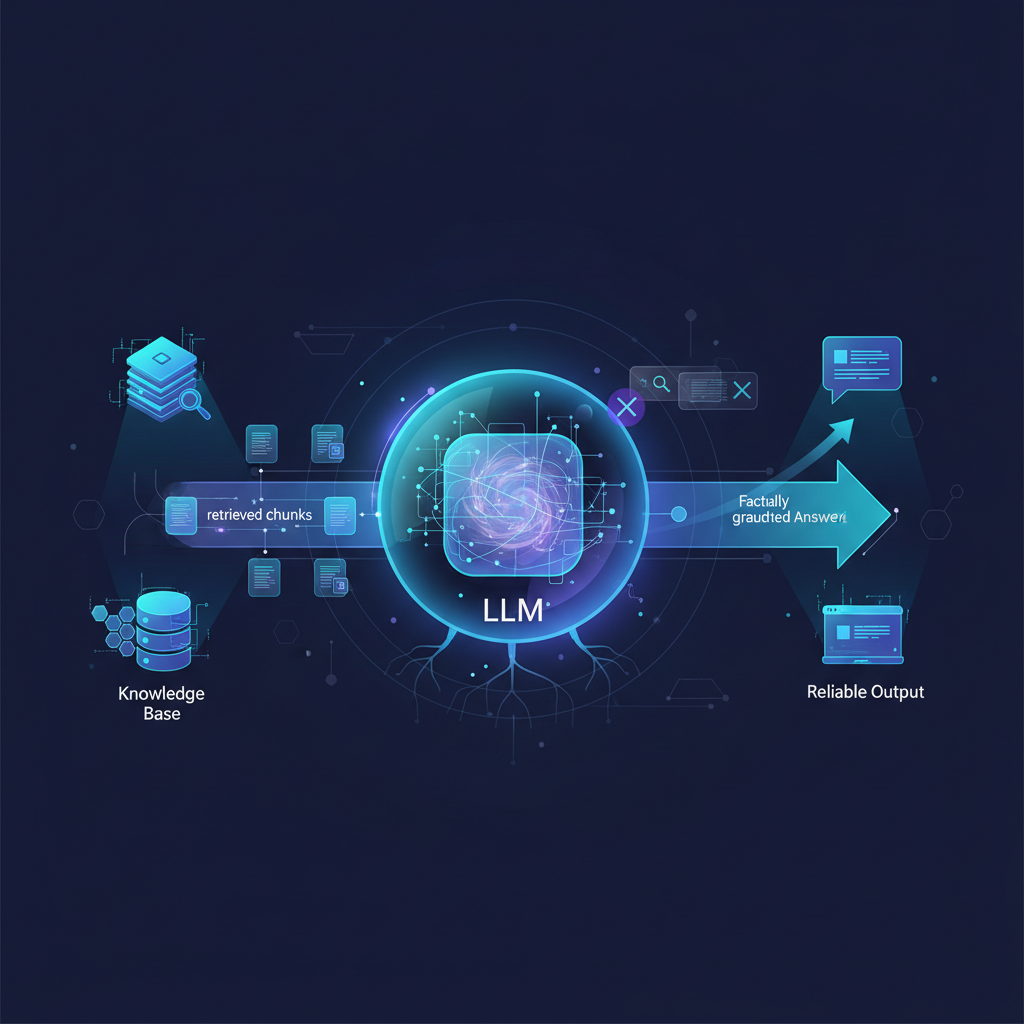

The era of Large Language Models (LLMs) has ushered in unprecedented capabilities in natural language processing. From sophisticated chatbots to advanced content generation, these models have redefined what's possible with AI. However, this power comes at a significant cost: immense computational resources, substantial memory footprints, and often, a hefty price tag for deployment and inference. This "LLM paradox" — incredible potential versus prohibitive practicalities — has created a critical bottleneck for widespread, democratized AI.

But what if we could have the best of both worlds? What if we could harness the power of LLMs not just in massive data centers, but on our smartphones, embedded devices, and personal computers, all while maintaining privacy and reducing costs? This is the promise of efficient LLMs and on-device NLP, a rapidly evolving field that is bridging the gap between cutting-edge research and real-world applicability. The past 12-18 months have seen an explosion of innovation in techniques designed to shrink, speed up, and streamline LLMs without sacrificing their core capabilities. Understanding these advancements is no longer optional; it's essential for anyone looking to build scalable, cost-effective, and user-friendly NLP applications in today's AI landscape.

The Imperative for Efficiency: Why On-Device NLP Matters

The drive for efficient LLMs stems from several compelling factors:

- Cost Reduction: Running large models in the cloud incurs significant operational expenses, especially for high-traffic applications. Efficient models can drastically lower these costs.

- Data Privacy & Security: Processing sensitive user data locally on a device eliminates the need to transmit it to external servers, enhancing privacy and compliance.

- Low Latency & Offline Functionality: On-device processing provides instantaneous responses, crucial for real-time applications like voice assistants or gaming. It also enables full functionality even without an internet connection.

- Scalability & Democratization: By making powerful models accessible on commodity hardware, we empower a broader range of developers and organizations to innovate, fostering a more inclusive AI ecosystem.

- Environmental Impact: Smaller, more efficient models require less energy, contributing to more sustainable AI practices.

This isn't just a theoretical pursuit; it's a practical necessity that's reshaping how we think about deploying AI. Let's dive into the core techniques making this possible.

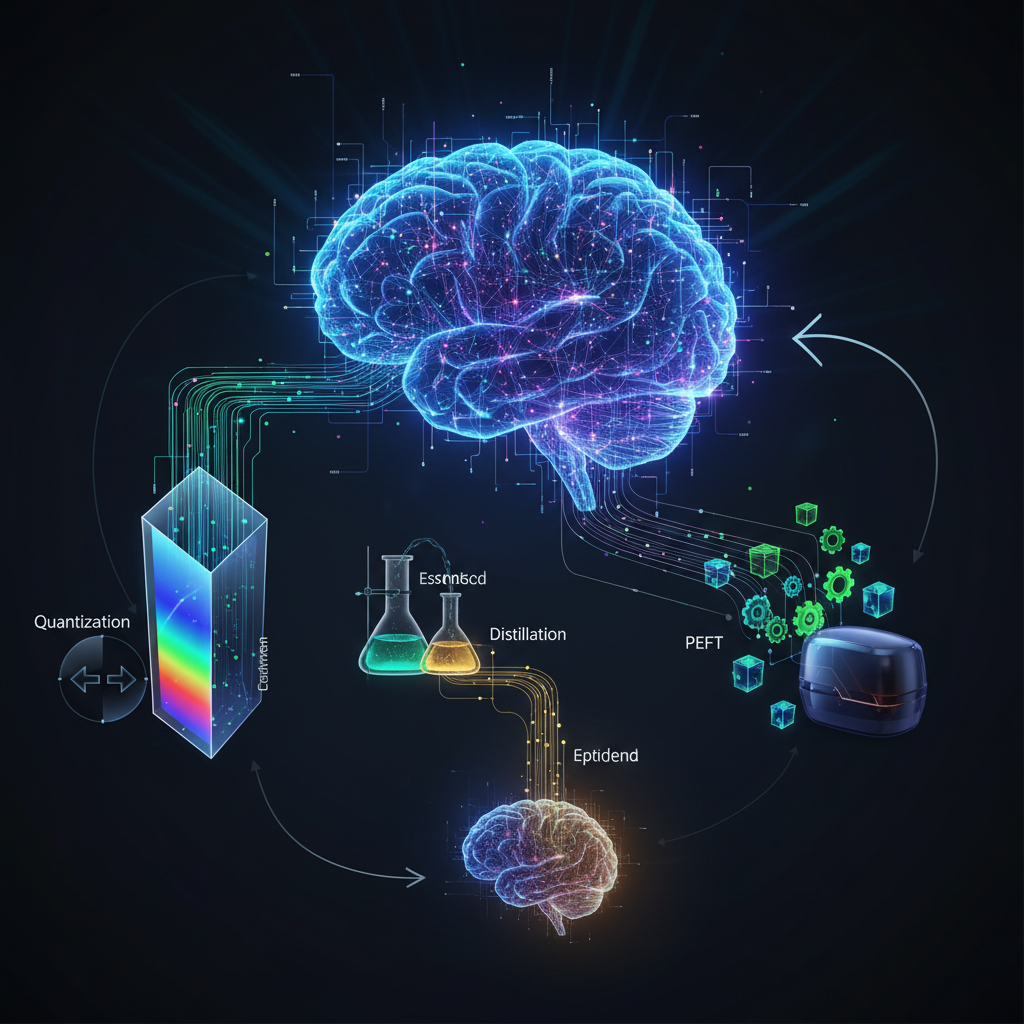

1. Model Quantization: Shrinking the Precision Footprint

At its core, quantization is about reducing the numerical precision of a model's weights and activations. Most LLMs are trained using 32-bit floating-point numbers (FP32). Quantization aims to represent these numbers with fewer bits, such as 16-bit floats (FP16), 8-bit integers (INT8), 4-bit integers (INT4), or even binary (1-bit).

Concept: Imagine representing a number like 3.14159 with fewer decimal places, say 3.14. You lose some precision, but the number takes up less space. Similarly, in a neural network, reducing the bit-width of weights and activations means:

- Smaller Model Size: The model file itself becomes significantly smaller, requiring less storage and faster loading times.

- Faster Inference: Operations on lower-precision numbers are computationally cheaper and can be executed more quickly by specialized hardware.

- Reduced Memory Bandwidth: Less data needs to be moved between memory and processing units, a common bottleneck.

Methods:

- Post-Training Quantization (PTQ): This is the most straightforward approach. A fully trained FP32 model is converted to a lower precision after training.

- Dynamic Quantization: Weights are quantized to INT8, but activations are quantized on the fly during inference. This is simpler but offers less speedup.

- Static Quantization: Both weights and activations are quantized to INT8. This requires a small calibration dataset to determine optimal scaling factors for activations, leading to better accuracy and performance than dynamic quantization.

- Quantization-Aware Training (QAT): The model is trained from the start with the knowledge that it will be quantized. Fake quantization operations are inserted into the training graph, simulating the effects of quantization. This often yields the best accuracy but requires modifying the training pipeline.

Challenges:

- Accuracy Degradation: The primary challenge is maintaining model performance. Reducing precision can lead to information loss and a drop in accuracy, especially for sensitive models like LLMs.

- Hardware Support: Efficient execution of quantized models often relies on hardware accelerators (e.g., TPUs, NPUs, GPUs with INT8 support).

- Calibration: For static PTQ, selecting an appropriate calibration dataset is crucial for determining optimal quantization parameters.

Recent Developments for LLMs:

LLMs are particularly sensitive to quantization due to their vast number of parameters and complex interactions. Recent breakthroughs have focused on mitigating accuracy loss:

- Mixed-Precision Quantization: Different layers or parts of the model can be quantized to different bit-widths (e.g., some layers to INT8, others to FP16) based on their sensitivity.

- GPTQ (General Post-training Quantization): A highly effective PTQ method for LLMs that quantizes weights layer-by-layer using an optimal brain quantization (OBQ) approach. It aims to minimize the mean squared error (MSE) introduced by quantization, often achieving INT4 quantization with minimal perplexity loss.

- Example: Running a 7B parameter Llama model in INT4 using GPTQ can reduce its memory footprint from ~14GB (FP16) to ~3.5GB, making it runnable on consumer GPUs.

- AWQ (Activation-aware Weight Quantization): This method observes that not all weights are equally important for quantization. It identifies and protects a small percentage of "outlier" weights that are critical for performance, quantizing the rest aggressively. This leads to better accuracy than uniform quantization at the same bit-width.

- SmoothQuant: Addresses the issue of activation outliers in LLMs. It shifts the quantization difficulty from activations to weights by applying a per-channel scaling factor to activations and absorbing it into the weights. This makes both activations and weights easier to quantize to INT8 without significant accuracy drops.

These techniques, especially GPTQ and AWQ, have been instrumental in enabling the deployment of large LLMs like Llama 2 on consumer-grade hardware and even some high-end mobile devices.

2. Model Pruning: Trimming the Fat

Pruning is like sculpting a model by removing redundant or less important connections (weights), neurons, or even entire layers, without significantly impacting its overall performance.

Concept: Neural networks are often overparameterized. Many weights might contribute very little to the final output, or multiple neurons might learn similar features. Pruning identifies and removes these inefficiencies.

Methods:

- Unstructured Pruning: Individual weights are set to zero, creating sparse connections. This can achieve high compression ratios but often requires specialized hardware or software to exploit the sparsity for speedups.

- Example: Removing weights below a certain magnitude threshold.

- Structured Pruning: Entire neurons, channels, or layers are removed. This results in a smaller, denser network, which is easier to accelerate on standard hardware.

- Example: Removing an entire attention head in a Transformer if its contribution to performance is low.

- Sparsity-Aware Training: The model is trained with a regularization term that encourages sparsity, making it easier to prune after training.

Challenges:

- Determining Pruning Criteria: Deciding which parts to prune without harming performance is complex. Magnitude-based pruning is common, but more sophisticated methods exist.

- Maintaining Sparsity: After pruning, the network becomes sparse. Efficiently storing and computing with sparse matrices requires specialized libraries and hardware, or the model needs to be "re-densified" (retrained) after pruning.

- Irregular Memory Access: Unstructured sparsity can lead to irregular memory access patterns, which can sometimes negate the theoretical speedup.

Recent Developments for LLMs:

Pruning LLMs is challenging due to their scale and the intricate dependencies between parameters. However, research is progressing:

- Transformer-Specific Pruning: Techniques are emerging to prune specific components of Transformer architectures, such as attention heads or feed-forward network dimensions, based on their importance.

- Dynamic Pruning: Pruning can be applied iteratively during training, allowing the model to adapt and recover from the removal of parameters.

- Combined with Quantization: Pruning and quantization are often used together to achieve maximum efficiency gains.

While pruning for LLMs is still an active research area, it holds immense promise for creating truly compact models.

3. Knowledge Distillation: Learning from a Master

Knowledge distillation is a technique where a smaller, more efficient "student" model is trained to mimic the behavior of a larger, more powerful "teacher" model. The student learns not just from the hard labels (e.g., the correct answer) but also from the "soft targets" (the probability distribution over all possible answers) provided by the teacher.

Concept: The teacher model, having learned complex patterns, provides a rich source of information beyond just the final prediction. By training the student on these nuanced outputs, it can absorb much of the teacher's knowledge without needing the same architectural complexity or parameter count.

Methods:

- Soft Targets (Logits): The most common method involves training the student to match the teacher's output probability distribution (logits) for each input. This provides more information than just the one-hot encoded ground truth.

- Intermediate Representations: The student can also be trained to mimic the teacher's internal representations (e.g., hidden states, attention distributions) at various layers.

- Ensemble Distillation: Multiple teacher models can be used to distill knowledge into a single student.

Challenges:

- Designing Effective Loss Functions: Balancing the student's learning from soft targets and hard labels.

- Selecting Appropriate Student Architectures: The student model needs to be capable enough to learn from the teacher but small enough to be efficient.

- Computational Cost: Running the teacher model to generate soft targets can still be computationally intensive during the distillation process.

Recent Developments for LLMs:

Distillation is highly relevant for LLMs, where the teacher might be a massive proprietary model (like GPT-4) or a very large open-source model (like Llama 2 70B).

- Example: Projects like TinyLlama (1.1B parameters) were trained by distilling knowledge from larger models. The student model learns to generate text that aligns with the teacher's style and factual knowledge.

- Specialized Distillation: Distilling specific skills or knowledge from a large LLM into a smaller, domain-specific model. For instance, distilling a code generation LLM into a smaller model for Python code completion.

- Data Augmentation: The teacher model can generate synthetic data, which is then used to train the student, effectively expanding the training dataset.

Distillation allows organizations to leverage the power of state-of-the-art LLMs without the prohibitive costs of running them directly, creating smaller, specialized, and more deployable models.

4. Parameter-Efficient Fine-Tuning (PEFT) / Adapter-based Methods

Fine-tuning a massive LLM (e.g., 70 billion parameters) for a specific downstream task requires enormous computational resources and storage. Parameter-Efficient Fine-Tuning (PEFT) techniques address this by only updating a tiny fraction of the model's parameters, drastically reducing memory footprint and computational cost during fine-tuning.

Concept: Instead of modifying all billions of parameters in the pre-trained LLM, PEFT methods introduce a small number of new, trainable parameters (e.g., adapter layers, low-rank matrices) while keeping the original LLM weights frozen. Only these new parameters are updated during fine-tuning.

Methods:

- LoRA (Low-Rank Adaptation): One of the most popular and effective PEFT methods. LoRA injects small, trainable rank-decomposition matrices into the Transformer layers. When fine-tuning, only these low-rank matrices are updated, while the original pre-trained weights remain frozen. This significantly reduces the number of trainable parameters (often by 10,000x or more) and memory usage.

- Example: Fine-tuning a Llama 2 7B model with LoRA might only require training a few million parameters instead of 7 billion, enabling fine-tuning on a single consumer GPU.

- Prefix-Tuning: Prepends a small, trainable sequence of vectors (the "prefix") to the input. This prefix is learned during fine-tuning and guides the LLM's behavior without modifying its core weights.

- Prompt-Tuning: Similar to prefix-tuning but learns a soft prompt (a sequence of embedding vectors) that is prepended to the input. It's even simpler than prefix-tuning as it doesn't require modifying the Transformer's internal architecture.

- Adapters: Small neural network modules inserted between layers of the pre-trained model. Only the adapter modules are trained, allowing for modular and efficient fine-tuning for multiple tasks.

Challenges:

- Performance Trade-offs: While highly efficient, PEFT methods might sometimes achieve slightly lower performance compared to full fine-tuning, depending on the task and chosen method.

- Optimal Configuration: Selecting the right rank for LoRA, the length of the prefix, or the placement of adapters can require experimentation.

Practical Impact:

PEFT has been a game-changer for the democratization of LLMs. It allows:

- Individuals and Smaller Teams: To fine-tune massive LLMs on commodity hardware (e.g., a single GPU with 12-24GB VRAM).

- Rapid Experimentation: Faster fine-tuning cycles enable quicker iteration and model development.

- Reduced Storage: Storing only the small adapter weights for each task, rather than a full copy of the fine-tuned LLM, saves significant disk space.

- Multi-Tasking: Easily swap out adapter weights to make a single base LLM perform different tasks.

5. Efficient Architectures and Training Paradigms

Beyond optimizing existing models, researchers are also designing inherently smaller or more efficient architectures from the ground up, or optimizing the training process itself.

Concept: This involves rethinking the fundamental building blocks of LLMs to reduce computational complexity, memory requirements, or the number of parameters.

Examples:

- Mobile-friendly Transformers: Architectures like MobileBERT, TinyLlama, and Phi-2 are designed with efficiency in mind. They often use techniques like depth-wise separable convolutions (in earlier mobile models), smaller embedding dimensions, or fewer layers to achieve a compact size while retaining strong performance.

- Example: Phi-2 (2.7B parameters) from Microsoft demonstrates impressive reasoning and language understanding capabilities despite its relatively small size, often outperforming models 10x its size.

- Example: TinyLlama (1.1B parameters) is trained on 3 trillion tokens, aiming to be a compact, high-quality base model for various applications.

- Sparse Attention Mechanisms: The standard self-attention mechanism in Transformers has a quadratic computational complexity with respect to sequence length, making it a bottleneck for very long inputs. Sparse attention mechanisms (e.g., Longformer, Reformer, Performer) reduce this to linear or nearly linear complexity by only attending to a subset of tokens.

- FlashAttention: A highly optimized attention algorithm that reorders the computation and leverages GPU memory hierarchies (SRAM) to significantly speed up attention and reduce memory usage, especially for long sequences. It's not a new architecture but an optimization of the existing attention mechanism.

- Multi-Query Attention (MQA) / Grouped-Query Attention (GQA): Instead of each attention head having its own set of key and value projections, MQA uses a single set for all heads. GQA uses a small group of key/value heads. This reduces memory bandwidth during inference, leading to faster generation, especially for larger batch sizes. Models like Llama 2 and Falcon use GQA/MQA.

Challenges:

- Architectural Expertise: Designing new efficient architectures requires deep understanding of neural network principles and computational bottlenecks.

- Specialized Hardware: Some architectural innovations might benefit most from custom hardware or highly optimized kernels.

These efforts are crucial for pushing the boundaries of what's possible with efficient LLMs, creating models that are "born" efficient rather than just "made" efficient post-training.

6. Inference Optimization Frameworks and Hardware

Even with highly optimized models, their real-world performance depends heavily on the software and hardware used for inference. This ecosystem is rapidly evolving to support efficient LLM deployment.

Software Frameworks:

- ONNX Runtime: An open-source inference engine that can accelerate machine learning models across various frameworks (PyTorch, TensorFlow) and hardware. It optimizes models for deployment on different platforms.

- OpenVINO (Open Visual Inference & Neural Network Optimization): Intel's toolkit for optimizing and deploying AI inference, particularly on Intel hardware (CPUs, integrated GPUs, VPUs). It supports quantization and model compilation.

- TensorRT: NVIDIA's SDK for high-performance deep learning inference on NVIDIA GPUs. It optimizes models by performing graph optimizations, layer fusion, and precision calibration.

- 🤗 Optimum (Hugging Face): A library that provides a unified API to optimize models from the Hugging Face Transformers library using various backend tools like ONNX Runtime, OpenVINO, and TensorRT. It simplifies the process of applying quantization, pruning, and other optimizations.

- GGML / GGUF: A C library for machine learning that enables efficient inference of LLMs on CPUs, often with quantization (e.g., INT4, INT5, INT8).

llama.cppis a popular project built on GGML/GGUF that allows running Llama models on CPUs with surprisingly good performance. GGUF is the successor format to GGML.- Practical Impact: This is what allows many users to run Llama 2, Mistral, and other open-source LLMs locally on their personal computers without a dedicated GPU.

Hardware Accelerators:

- Neural Processing Units (NPUs): Dedicated AI accelerators found in modern smartphones, laptops (e.g., Apple Neural Engine, Qualcomm AI Engine, Intel Core Ultra NPUs), and embedded systems. They are designed for highly efficient execution of neural network operations, often supporting INT8 or even INT4 natively.

- Specialized AI Accelerators: Beyond general-purpose GPUs, there are custom chips designed specifically for AI inference (e.g., Google's Edge TPUs, various startups).

- Optimized GPU Kernels: Continuous improvements in GPU drivers and low-level kernels ensure that operations like matrix multiplications and attention mechanisms run as fast as possible, especially for lower precision data types.

The synergy between these optimized models and specialized hardware/software is what truly unlocks the potential for fast, on-device NLP.

Practical Applications and Use Cases

The ability to run efficient LLMs locally opens up a plethora of exciting applications:

- On-Device Assistants: Imagine a voice assistant on your smartphone that can answer complex queries, draft emails, or summarize documents, all without sending your data to the cloud. This ensures privacy and provides instant responses.

- Edge AI for IoT: Smart cameras that can analyze spoken commands or text in real-time, industrial sensors that understand natural language instructions, or smart home devices that offer personalized interactions, all operating autonomously without continuous cloud connectivity.

- Privacy-Preserving NLP: Healthcare applications where patient data is processed locally, financial services that analyze sensitive transactions on-device, or secure communication platforms that offer advanced NLP features without compromising user privacy.

- Cost-Effective Enterprise Solutions: Businesses can deploy powerful internal knowledge bases, customer support chatbots, or content generation tools with significantly reduced operational costs compared to cloud-based LLM APIs.

- Democratization of LLMs: Researchers, students, and developers with limited budgets can experiment, fine-tune, and deploy state-of-the-art language models on their own hardware, fostering innovation and reducing barriers to entry.

- Real-time Interactive Experiences: Low-latency responses for gaming NPCs, interactive storytelling, or creative tools that generate content on the fly, enhancing user engagement.

Conclusion

The journey towards efficient LLMs and on-device NLP is not just about making models smaller; it's about making AI more accessible, private, cost-effective, and ultimately, more integrated into our daily lives. The techniques discussed – quantization, pruning, distillation, PEFT, and architectural innovations – are not isolated concepts but often work in concert to achieve impressive gains in efficiency.

For AI practitioners and enthusiasts, this field represents a golden opportunity. Mastering these techniques means moving beyond merely consuming LLM APIs to actively shaping how AI is deployed in the real world. It means building applications that respect user privacy, operate reliably offline, and are economically viable for a wider range of use cases. As LLMs continue their exponential growth, the demand for efficiency will only intensify, making expertise in this domain a critical skill for the future of AI. The democratization of powerful language models is no longer a distant dream; it's an unfolding reality, powered by the relentless pursuit of efficiency.