AI in QA: Revolutionizing Test Case Generation with Generative Models

Discover how Artificial Intelligence, particularly generative models and reinforcement learning, is transforming software quality assurance. Learn why intelligent testing is crucial for modern development and how AI streamlines test case creation.

The relentless pace of modern software development demands not just speed, but unwavering quality. Yet, the traditional approach to quality assurance (QA) and quality control (QC)—heavily reliant on manual test case creation—often struggles to keep up. It's a labor-intensive, error-prone process that can become a bottleneck, especially as software systems grow in complexity. Enter Artificial Intelligence. The convergence of advanced AI techniques, particularly generative models and reinforcement learning, is ushering in a new era for QA, transforming how we design, generate, and optimize test cases. This isn't just an incremental improvement; it's a paradigm shift towards intelligent, proactive quality management.

The Imperative for Intelligent Testing

Why now? Several factors underscore the critical need for AI in test case generation and optimization:

- The Generative AI Revolution: Recent breakthroughs in Large Language Models (LLMs) like GPT-4, Llama, and Gemini have demonstrated an unprecedented ability to understand, interpret, and generate human-like text and code. This capability is a game-changer for automating tasks that previously required human cognitive effort, including test design.

- Exploding Software Complexity: From intricate microservices architectures to distributed systems and AI/ML models themselves, modern software is inherently complex. Manually ensuring comprehensive test coverage for such systems is a Herculean, often impossible, task. AI offers the computational power to navigate this complexity.

- Shift-Left and Continuous Testing: The industry's move towards integrating testing earlier and continuously throughout the CI/CD pipeline necessitates faster, more intelligent test generation and execution. AI can significantly accelerate these processes, making continuous quality a reality.

- Economic Pressures: Organizations are under constant pressure to reduce development costs and accelerate time-to-market. AI-powered test generation directly addresses these by automating mundane tasks and improving efficiency without compromising quality.

- Data-Driven Insights: AI thrives on data. By leveraging vast amounts of historical data—bug reports, user interactions, code changes, test results—AI can learn patterns, predict high-risk areas, and intelligently guide the testing process.

This confluence of factors makes AI-powered test case generation and optimization not just an academic curiosity, but a practical necessity for any organization serious about software quality.

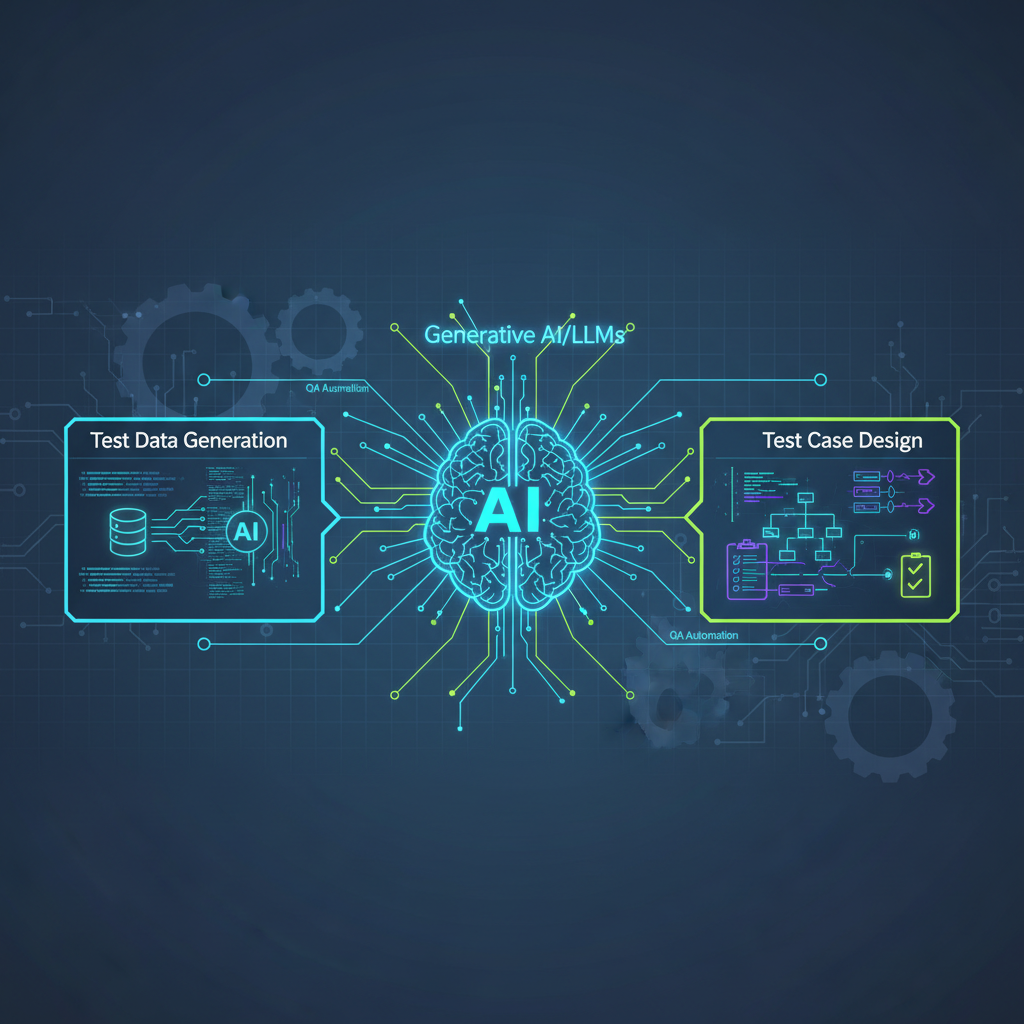

Generative AI for Intelligent Test Case Creation

The most visible and exciting application of recent AI advancements in testing comes from generative models. These models are moving us beyond simple script execution to truly intelligent test design.

Concept

Generative AI, particularly LLMs, can understand natural language requirements, user stories, code, or existing documentation. Based on this understanding, they can automatically generate diverse test assets, from natural language test descriptions and Gherkin scenarios (Given-When-Then) to executable test code in frameworks like Selenium or Playwright.

Recent Developments & Technical Deep Dive

-

LLM-driven Scenario Generation:

- Mechanism: Feed an LLM a user story, a feature description, or even a section of a requirements document. The LLM processes this input, identifies key entities, actions, and conditions, and then generates a comprehensive set of test scenarios.

- Example: Given a user story: "As a registered user, I want to be able to reset my password via email so that I can regain access to my account," an LLM might generate:

- Positive: "Verify user can reset password with valid email."

- Negative: "Verify error message when resetting password with unregistered email."

- Edge Case: "Verify password reset link expires after a set time."

- Security: "Verify rate limiting on password reset requests to prevent brute force."

- Advantage: LLMs can infer implicit requirements, identify edge cases, and even suggest negative test scenarios that human testers might overlook, significantly improving test coverage and accelerating the test design phase.

-

Code-to-Test Generation:

- Mechanism: LLMs, often fine-tuned on code generation tasks, can analyze application source code (e.g., a Python function, a Java class, an API endpoint definition). They infer the functionality, potential inputs, and expected outputs, then generate corresponding unit, integration, or API tests.

- Example: For a Python function

def calculate_discount(price, discount_percentage):, an LLM could generate pytest cases:pythondef test_calculate_discount_positive(): assert calculate_discount(100, 10) == 90 def test_calculate_discount_zero_discount(): assert calculate_discount(50, 0) == 50 def test_calculate_discount_full_discount(): assert calculate_discount(200, 100) == 0 def test_calculate_discount_invalid_percentage(): with pytest.raises(ValueError): calculate_discount(100, 110)def test_calculate_discount_positive(): assert calculate_discount(100, 10) == 90 def test_calculate_discount_zero_discount(): assert calculate_discount(50, 0) == 50 def test_calculate_discount_full_discount(): assert calculate_discount(200, 100) == 0 def test_calculate_discount_invalid_percentage(): with pytest.raises(ValueError): calculate_discount(100, 110) - Advantage: Automates the creation of foundational tests, ensuring immediate feedback on code changes and reducing the burden on developers.

-

Data Synthesis for Test Data:

- Mechanism: Generative models can create realistic, synthetic test data (e.g., user profiles, transaction data, sensor readings) that adheres to specified constraints, covers various edge cases, and mimics real-world distributions. This is crucial for privacy-sensitive applications where real data cannot be used.

- Example: For an e-commerce application, an LLM could generate a JSON object representing a customer:

And then generate variations for edge cases like inactive accounts, customers with no purchase history, or international addresses.json

{ "customer_id": "CUST-789012", "first_name": "Eleanor", "last_name": "Vance", "email": "[email protected]", "address": { "street": "45 Oakwood Lane", "city": "Springfield", "state": "IL", "zip_code": "62704" }, "purchase_history": [ {"order_id": "ORD-1001", "total_amount": 125.50, "items": ["Book A", "Pen B"]}, {"order_id": "ORD-1002", "total_amount": 30.00, "items": ["Notebook C"]} ], "account_status": "active", "loyalty_points": 750 }{ "customer_id": "CUST-789012", "first_name": "Eleanor", "last_name": "Vance", "email": "[email protected]", "address": { "street": "45 Oakwood Lane", "city": "Springfield", "state": "IL", "zip_code": "62704" }, "purchase_history": [ {"order_id": "ORD-1001", "total_amount": 125.50, "items": ["Book A", "Pen B"]}, {"order_id": "ORD-1002", "total_amount": 30.00, "items": ["Notebook C"]} ], "account_status": "active", "loyalty_points": 750 } - Advantage: Overcomes data scarcity, ensures privacy compliance, and allows for thorough testing of data-dependent logic across a wide range of scenarios.

Practical Applications

- Accelerated Test Design: Dramatically reduce the time and effort spent writing test cases from scratch, freeing human testers for more complex exploratory testing.

- Improved Coverage: LLMs can identify implicit requirements or edge cases that human testers might miss, leading to more comprehensive test suites.

- Automated Test Scripting: Generating ready-to-run test scripts in popular frameworks streamlines the transition from test design to execution.

Model-Based Testing (MBT) with AI Enhancements

Model-Based Testing (MBT) is a powerful approach where test cases are automatically generated from an abstract model of the System Under Test (SUT). AI significantly amplifies MBT's capabilities by automating model creation and optimizing test paths.

Concept

Instead of directly writing test cases, testers build models (e.g., state-transition diagrams, activity diagrams) that describe the SUT's behavior. AI then enhances this process by automating the discovery of these models and intelligently exploring them to generate optimal test sequences.

AI Enhancements

-

Automated Model Discovery:

- Mechanism: AI, using techniques like process mining or machine learning, can learn system behavior and automatically construct state-transition diagrams or behavioral models. This can be done by analyzing system logs, user interaction data, API call sequences, or even network traffic.

- Example: An AI algorithm could analyze web server logs, identifying sequences of user actions (login -> browse product -> add to cart -> checkout) and constructing a state machine representing the user journey, including error states for failed transactions.

- Advantage: Eliminates the manual, often tedious, task of model creation, making MBT more accessible and adaptable to rapidly changing systems.

-

Path Exploration & Optimization:

- Mechanism: Once a model exists, AI algorithms like genetic algorithms or reinforcement learning can explore the model's state space efficiently. They prioritize paths most likely to expose defects, cover critical functionalities, or achieve specific coverage goals (e.g., all states, all transitions).

- Example: For an e-commerce checkout model, a genetic algorithm might generate test paths that combine various payment methods, shipping options, and discount codes, iteratively refining paths that lead to unexpected system states or errors.

- Advantage: Ensures comprehensive coverage of the system's behavior while optimizing the number of test cases, preventing redundant tests.

Practical Applications

- Complex System Testing: Ideal for systems with numerous states and transitions, such as financial transaction systems, telecommunications protocols, or embedded software.

- Regression Testing: Automatically generating new test cases for changed parts of the system based on model updates ensures that new features don't break existing functionality.

Reinforcement Learning (RL) for Test Sequence Optimization

Reinforcement Learning (RL) brings a dynamic, adaptive approach to test case generation, treating it as a sequential decision-making problem.

Concept

In RL, an "agent" learns to perform actions in an environment to maximize a cumulative "reward." For testing, the agent selects test steps (actions) to achieve a reward (e.g., finding a bug, increasing code coverage, reaching a specific system state).

Recent Developments & Technical Deep Dive

-

Fuzzing with RL:

- Mechanism: Traditional fuzzing often relies on random input mutation. RL can guide fuzzing engines by learning which input mutations are most effective at exploring new program states, triggering crashes, or uncovering vulnerabilities. The "reward" could be reaching a new code path or causing a program crash.

- Example: An RL agent could learn to intelligently mutate fields in a network packet header, observing the system's response and prioritizing mutations that lead to unique error codes or unexpected behavior, thus discovering vulnerabilities more efficiently than purely random fuzzing.

- Advantage: Significantly enhances the effectiveness of fuzzing, leading to the discovery of more subtle and critical bugs, especially in security testing.

-

UI/API Test Path Exploration:

- Mechanism: RL agents can be trained to navigate complex User Interfaces (UIs) or API sequences. The agent interacts with the application (clicks buttons, enters data, makes API calls) and receives rewards for reaching new screens, triggering specific events, or uncovering errors. This mimics exploratory testing but with systematic exploration.

- Example: An RL agent could explore a dynamic web application, learning to fill out forms, interact with AJAX elements, and navigate through different sections. A reward could be given for reaching a previously unvisited page, or a high penalty for encountering a 500 error.

- Advantage: Automates exploratory testing, discovers hidden states, and uncovers bugs in highly dynamic applications or complex microservice interactions that are difficult to model explicitly.

-

Test Case Prioritization:

- Mechanism: RL can learn to prioritize existing test cases or suites based on their historical effectiveness in finding bugs, their coverage of critical code paths, or their execution cost. The agent learns which tests to run first to maximize the chances of early defect detection or to achieve optimal coverage within a time budget.

- Example: Given a large regression suite, an RL agent could analyze past test runs, code change impact, and bug reports. It might learn that tests related to the

Paymentmodule are frequently failing after changes to theInventorymodule and prioritize those tests accordingly. - Advantage: Optimizes testing resources by running only the most impactful tests, saving time and computing resources, especially crucial in CI/CD pipelines.

Practical Applications

- Automated Exploratory Testing: AI agents can "explore" an application like a human tester, but with greater speed, systematic coverage, and tireless execution.

- Security Testing: Enhancing vulnerability detection through intelligent fuzzing and guided exploration of attack surfaces.

- Resource Optimization: Running only the most impactful tests to save time and computing resources, crucial for rapid feedback loops.

Predictive Analytics for Test Selection and Prioritization

Beyond generating tests, AI excels at making intelligent decisions about which tests to run and where to focus testing efforts.

Concept

Machine learning models analyze vast amounts of historical data—code changes, bug reports, test results, user feedback, static analysis reports—to predict which areas of the software are most likely to contain defects or which tests are most likely to fail.

AI Techniques

- Supervised Learning: Classification models can predict whether a code module is "bug-prone" or "stable." Regression models can predict the number of defects likely to be found.

- Clustering: Grouping similar code changes or bug reports to identify common risk patterns.

Practical Applications

- Smart Regression Suites: Dynamically selecting a subset of regression tests most relevant to recent code changes. If only the

User Profilemodule was changed, the AI might recommend running only tests related toUser Profileand its immediate dependencies, significantly reducing execution time. - Risk-Based Testing: Focusing testing efforts on high-risk components or features identified by AI. For example, if a module has a high churn rate in its code and a history of frequent defects, AI can flag it for more intensive testing.

- Early Defect Prediction: Identifying potential defect-prone code modules even before testing begins, allowing developers to proactively review and refactor code, shifting quality left even further.

Challenges and Future Directions

While the promise of AI in QA is immense, several challenges must be addressed for widespread adoption:

- Explainability and Trust: AI-generated tests, especially from LLMs, can sometimes be opaque. Testers need to understand why a particular test was generated and trust its relevance. Ensuring transparency and interpretability of AI's decisions is crucial.

- Hallucinations and Irrelevant Tests: Generative AI can occasionally produce nonsensical or irrelevant test cases, requiring human oversight and refinement. Developing better guardrails and validation mechanisms for AI output is an active research area.

- Integration Complexity: Seamlessly integrating AI tools into existing CI/CD pipelines, test management systems, and development workflows can be complex. Standardized APIs and robust tooling are essential.

- Data Requirements: Many AI approaches, especially supervised learning and deep learning, require significant amounts of high-quality historical data for training. Organizations with limited historical data might face initial hurdles.

- Handling Non-Determinism: Testing AI-powered systems themselves, which can exhibit non-deterministic behavior, poses unique challenges for test generation and oracle problem.

- Ethical Considerations: Ensuring fairness and mitigating bias when using AI to prioritize tests, especially in critical systems (e.g., healthcare, finance), is paramount. AI models trained on biased data could inadvertently perpetuate or amplify those biases in testing.

Future directions will likely focus on more sophisticated hybrid approaches combining different AI techniques, developing self-healing tests, and creating AI agents that can learn and adapt to evolving system behaviors with minimal human intervention.

Conclusion for AI Practitioners and Enthusiasts

AI-powered test case generation and optimization represent a pivotal moment in how software quality is assured. For AI practitioners, this field offers exciting opportunities to apply cutting-edge techniques like generative models, reinforcement learning, and predictive analytics to solve real-world, high-impact problems. The direct impact on product quality, development speed, and cost efficiency is tangible and measurable.

For enthusiasts, it demonstrates how AI can move beyond "cool demos" into practical, enterprise-level applications that directly contribute to business value. It's a testament to AI's capability to augment human intelligence, allowing testers to focus on critical thinking, complex problem-solving, and exploratory testing, rather than repetitive, manual test case creation.

Staying abreast of developments in this area, particularly the practical applications of LLMs and RL in testing, will be crucial for anyone looking to leverage AI in the software development lifecycle. The future of software quality is intelligent, automated, and driven by AI. Embracing these advancements is not just an option; it's a strategic imperative for building robust, reliable software in an increasingly complex world.