AI-Powered Generative Test Cases & Self-Healing Tests: Redefining Software Quality

Discover how AI is revolutionizing QA and QC by automating test case generation and enabling self-healing test suites. This paradigm shift promises to boost agility, efficiency, and unwavering software quality, moving from manual to autonomous testing.

The relentless pace of modern software development demands agility, efficiency, and unwavering quality. Yet, two persistent challenges often slow down even the most optimized teams: the monumental effort required to craft comprehensive and effective test cases, and the frustrating fragility of test suites that crumble with every UI or API change. Enter the transformative power of AI, ushering in a new era of QA & QC automation with AI-Powered Generative Test Case Generation and Self-Healing Tests. This isn't just an incremental improvement; it's a paradigm shift, promising to redefine how we ensure software quality.

The Evolution of QA: From Manual to Autonomous

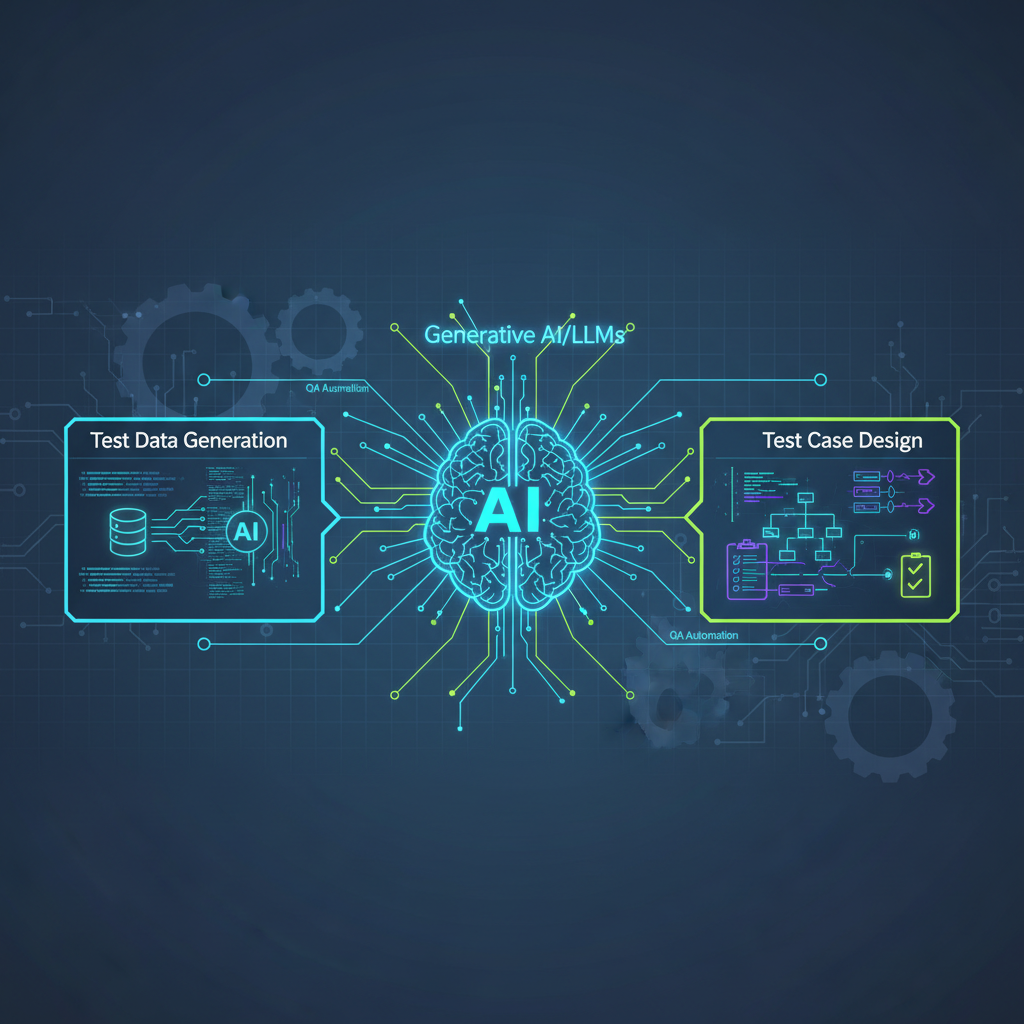

For decades, software testing has been a critical but often resource-intensive bottleneck. Manual testing is slow and prone to human error, while traditional automation, though faster, requires significant upfront investment and constant maintenance. The vision of truly intelligent QA has long been a holy grail, and with recent advancements in Artificial Intelligence, particularly Generative AI, Large Language Models (LLMs), and Reinforcement Learning (RL), that vision is rapidly becoming a reality. We're moving beyond simple test execution and defect prediction towards systems that can intelligently create and adapt tests, fundamentally altering the economics and effectiveness of quality assurance.

Generative Test Case Generation (GTCG): Crafting Tests with AI Intelligence

The burden of creating exhaustive test cases for every new feature, every edge case, and every potential user interaction is immense. GTCG aims to alleviate this by leveraging AI to automatically generate new, diverse, and highly effective test cases from various inputs.

LLMs for Intelligent Test Scenario and Data Generation

The advent of powerful LLMs like GPT-4, Claude, and specialized code models has been a game-changer for GTCG. These models possess an unprecedented ability to understand, interpret, and generate human-like text, which translates directly into their capacity to create sophisticated test artifacts.

- How it Works: LLMs are prompted with application context – this could be natural language requirements documents, user stories, design specifications, or even existing code comments. They can then infer user intent, identify potential interactions, and generate detailed test scenarios.

- Example: Imagine feeding an LLM a user story like: "As a registered user, I want to be able to reset my password securely so I can regain access to my account." The LLM can then generate Gherkin-style scenarios:

gherkin

Feature: Password Reset Functionality Scenario: Successful password reset with valid email Given I am on the "Forgot Password" page And I enter a registered email address "[email protected]" When I click the "Reset Password" button Then I should see a message "Password reset link sent to your email." And an email with a reset link should be sent to "[email protected]" Scenario: Attempt password reset with unregistered email Given I am on the "Forgot Password" page When I enter an unregistered email address "[email protected]" And I click the "Reset Password" button Then I should see an error message "Email address not found." And no email should be sent Scenario: Password reset link expiration Given I requested a password reset link for "[email protected]" And 61 minutes have passed since the link was sent When I click the password reset link from the email Then I should be redirected to the "Forgot Password" page And I should see a message "Your password reset link has expired. Please request a new one."Feature: Password Reset Functionality Scenario: Successful password reset with valid email Given I am on the "Forgot Password" page And I enter a registered email address "[email protected]" When I click the "Reset Password" button Then I should see a message "Password reset link sent to your email." And an email with a reset link should be sent to "[email protected]" Scenario: Attempt password reset with unregistered email Given I am on the "Forgot Password" page When I enter an unregistered email address "[email protected]" And I click the "Reset Password" button Then I should see an error message "Email address not found." And no email should be sent Scenario: Password reset link expiration Given I requested a password reset link for "[email protected]" And 61 minutes have passed since the link was sent When I click the password reset link from the email Then I should be redirected to the "Forgot Password" page And I should see a message "Your password reset link has expired. Please request a new one."

- Example: Imagine feeding an LLM a user story like: "As a registered user, I want to be able to reset my password securely so I can regain access to my account." The LLM can then generate Gherkin-style scenarios:

- Beyond Scenarios: LLMs can also generate synthetic test data (e.g., valid/invalid inputs for forms, diverse user profiles, boundary values) and even initial code snippets for popular automation frameworks like Playwright, Selenium, or Cypress, significantly accelerating test development.

- Practical Impact: This capability drastically reduces the manual effort involved in writing initial test cases, especially for complex business logic or elusive edge cases. It also helps achieve higher test coverage earlier in the development lifecycle, shifting quality left.

Model-Based Testing (MBT) with AI Enhancements

Traditional MBT involves creating an abstract model of the system under test (SUT) and then generating test cases from that model. AI elevates MBT by automating or refining the model creation process itself.

- How it Works: AI algorithms analyze diverse data sources such as application logs, network traffic, API documentation (e.g., OpenAPI specifications), and even source code to construct a behavioral model of the application. Reinforcement Learning (RL) agents can actively explore the SUT, interacting with it to discover states, transitions, and potential paths, effectively building a dynamic model on the fly. From this inferred model, test cases are generated to cover identified paths, including boundary conditions, error states, and complex sequences.

- Example: For an e-commerce checkout flow, an AI might analyze user session logs and API calls to infer a state machine:

(Product Page -> Cart -> Shipping Info -> Payment -> Order Confirmation). It can then generate tests for each transition, and also for invalid transitions (e.g., trying to go from Payment directly to Product Page without completing the order). - Practical Impact: This is particularly powerful for complex, stateful applications where manually building and maintaining a model is prohibitively expensive. It helps uncover hidden paths and potential deadlocks that human testers might overlook.

Fuzz Testing with AI Guidance

Fuzz testing, a technique for finding software bugs by feeding programs with invalid, unexpected, or random data, gets a significant upgrade with AI.

- How it Works: Instead of purely random input generation, AI (especially evolutionary algorithms and machine learning) intelligently guides fuzzers towards vulnerable code paths or interesting application states. AI models learn from past crashes, unusual application behavior, or code coverage metrics to generate inputs that are more likely to expose new defects or security vulnerabilities. This transforms "dumb fuzzing" into "smart fuzzing."

- Example: An AI-guided fuzzer might learn that inputs containing specific character sequences or malformed JSON structures previously led to crashes in an API endpoint. It would then prioritize generating variations of these "interesting" inputs, rather than completely random strings, to explore similar vulnerabilities.

- Practical Impact: Highly effective for security testing and uncovering obscure bugs in parsers, network protocols, and input validation logic, providing a more efficient and targeted approach to vulnerability discovery.

Self-Healing Tests: Building Resilient Automation

One of the biggest pain points in test automation is maintenance. Minor UI changes, API refactors, or even simple locator changes can cause a cascade of test failures, leading to significant time spent fixing tests rather than writing new ones. Self-healing tests leverage AI to automatically adapt and repair existing test scripts when the underlying application changes, dramatically reducing maintenance overhead.

Visual AI and Computer Vision for UI Element Detection

Traditional UI test automation relies heavily on brittle locators (XPath, CSS selectors, IDs). When these change, tests break. Visual AI offers a robust alternative.

- How it Works: Modern AI-powered testing tools use computer vision and deep learning models to identify UI elements (buttons, text fields, links) based on their visual appearance, text content, and contextual relationships, rather than solely on their underlying technical properties. When a UI element's locator changes (e.g., a developer renames an ID or changes a CSS class), the AI visually scans the screen, compares it to a baseline image, and attempts to find the element based on its visual characteristics, text content, or proximity to other elements. It can then automatically update the test script's locator or suggest a new one.

- Example: A test script might be looking for a "Submit" button using

id="submitButton". If a developer changes the ID toid="sendForm", the test would fail. With visual AI, the system would still "see" a button labeled "Submit" in the same general area, understand its function, and automatically remap the action to the newly identified element, or even update the locator in the test script. - Practical Impact: This drastically reduces test maintenance time caused by minor UI changes, a common occurrence in Agile/DevOps environments with frequent deployments. It frees testers to focus on validating new functionality rather than constantly fixing old tests.

Semantic Understanding for API and Business Logic Resilience

Self-healing isn't limited to the UI. AI can extend this resilience to the backend by understanding the intent behind API calls or business processes.

- How it Works: Using Natural Language Processing (NLP) and graph neural networks, AI can map the functional purpose of a test step to the application's underlying logic. If an API endpoint changes (e.g.,

/users/createbecomes/api/v2/user_management/add_new_user), the AI, understanding the semantic intent of "creating a user," can suggest or automatically update the API call within the test script. Similarly, if a sequence of business steps is altered (e.g., an extra confirmation step is added), the AI can infer the new correct path. - Example: An API test might call

/api/v1/products/addwith a JSON payload. If the API is refactored to/api/v2/inventory/itemsand requires a slightly different payload structure, an AI with semantic understanding could recognize that both endpoints serve the purpose of "adding a product." It could then suggest or automatically apply the necessary changes to the endpoint URL and payload structure in the test script. - Practical Impact: This extends self-healing beyond the UI to the backend, making API and integration tests significantly more robust and less prone to breaking due to refactors.

Reinforcement Learning for Adaptive Test Paths

For complex user journeys where the exact steps might vary or evolve, Reinforcement Learning (RL) offers a powerful solution for adaptive test paths.

- How it Works: RL agents can be trained to navigate an application, learning the optimal sequence of actions to achieve a certain test goal. The agent interacts with the application, receiving "rewards" for successful navigation and "penalties" for errors or dead ends. Over time, it learns a policy that allows it to adapt to changes in the application's flow or UI. If a path becomes blocked or changes, the agent can explore and find a new valid sequence of actions to reach its objective.

- Example: Consider a multi-step checkout process. If a new promotional pop-up appears or an intermediate confirmation screen is added, an RL agent, trained to complete a purchase, would learn to interact with or dismiss these new elements to continue its journey, rather than failing because of an unexpected UI element.

- Practical Impact: Excellent for critical, complex user journeys that are prone to frequent updates, ensuring that core workflows remain testable even as the application evolves.

Practical Applications for AI Practitioners and Enthusiasts

The implications of AI-powered generative and self-healing tests are profound, offering tangible benefits across the software development lifecycle.

-

Accelerated Test Development:

- Scenario: A new feature is being developed, involving complex user interactions and data validations. Instead of manually writing hundreds of test cases and generating diverse test data, an AI (LLM) can ingest the feature's requirements, user stories, and API documentation. It then generates a comprehensive suite of functional, integration, and even performance test scenarios, complete with Gherkin steps and synthetic data, in a fraction of the time.

- Tooling: Explore integrating LLM APIs (e.g., OpenAI, Hugging Face models) with test frameworks like Playwright or Cypress. Prompt engineering becomes a key skill to guide the AI in generating high-quality, relevant test code.

-

Reduced Test Maintenance Overhead:

- Scenario: A development team frequently refactors UI components or API endpoints as part of continuous delivery. Traditionally, this leads to a cascade of failing tests and significant time spent updating locators or API calls. With self-healing tests, the automation suite automatically adapts to these changes. Testers can then focus their expertise on validating new features and exploring complex scenarios, rather than endlessly fixing brittle tests.

- Tooling: Investigate commercial tools like Applitools Eyes, Testim.io, or open-source projects exploring visual testing with libraries like OpenCV or TensorFlow for visual element recognition and self-healing capabilities.

-

Enhanced Test Coverage and Bug Discovery:

- Scenario: AI-driven model inference and intelligent fuzzing can explore application states and input combinations that human testers or traditional automation might miss. This leads to the discovery of obscure bugs, performance bottlenecks, and security vulnerabilities that would otherwise remain hidden.

- Tooling: Experiment with property-based testing libraries (e.g., Hypothesis in Python) and integrate AI-guided fuzzers (e.g., AFL++, LibFuzzer with ML enhancements, or specialized commercial fuzzing solutions).

-

Smart Test Prioritization:

- Scenario: In large projects with thousands of tests, running the entire suite after every small code change is inefficient. AI can analyze code changes, commit history, and historical defect data to identify which tests are most relevant to the current changes and prioritize their execution. This ensures faster feedback loops and optimizes CI/CD pipeline efficiency.

- Tooling: Implement machine learning models (e.g., classification algorithms like Random Forest or Gradient Boosting) to predict test failure likelihood based on factors like code churn, developer activity, and historical defect patterns. Integrate these models into your CI/CD system to dynamically select and prioritize tests.

-

Automated Accessibility Testing:

- Scenario: Ensuring software is accessible to all users is crucial but often overlooked. AI can significantly enhance accessibility testing by analyzing UI components for compliance with accessibility standards (e.g., contrast ratios, missing alt text, keyboard navigability). It can visually inspect the UI and analyze the DOM, going beyond simple static analysis to identify real-world accessibility issues.

- Tooling: Explore the integration of visual AI with established accessibility checkers like Axe-core or Lighthouse. AI can help automate the visual verification aspects that these tools might miss or require manual validation for.

Challenges and Future Directions

While the promise of AI in QA is immense, several challenges need to be addressed for widespread adoption and maximum impact.

- Explainability and Trust: One of the biggest hurdles is the "black box" nature of some AI models. Understanding why an AI generated a particular test case, suggested a specific repair, or flagged a certain defect is crucial for developer trust, effective debugging, and regulatory compliance. Future research needs to focus on more interpretable AI models.

- False Positives/Negatives: AI models, especially in their nascent stages, can still misinterpret UI changes, generate irrelevant test cases, or miss critical defects. Continual refinement of models, robust validation processes, and human oversight remain essential to mitigate false positives and negatives.

- Data Dependency: Training robust AI models for self-healing and generation requires large, diverse, and high-quality datasets of application states, test scripts, user interaction logs, and historical defect data. Acquiring and curating such data can be a significant undertaking for many organizations.

- Integration Complexity: Seamlessly integrating these advanced AI capabilities into existing CI/CD pipelines, test automation frameworks, and development workflows remains a significant engineering challenge. Standardized APIs and modular architectures will be key.

- Ethical Considerations: As AI takes on more responsibility, we must ensure that AI-generated tests don't inadvertently introduce biases, overlook critical user groups, or perpetuate existing flaws in the system. Ethical AI development principles must be applied to QA.

Conclusion

AI-Powered Generative Test Case Generation and Self-Healing Tests represent a pivotal moment in the evolution of QA & QC automation. By intelligently automating the traditionally tedious and error-prone tasks of test creation and maintenance, AI empowers development teams to deliver higher quality software faster, with greater confidence, and significantly reduce the technical debt associated with fragile test suites. This field is ripe with innovation, offering ample opportunities for AI practitioners and enthusiasts to research, develop new tools, and implement transformative solutions in modern software development lifecycles. The future of quality assurance is not just automated; it's intelligent, adaptive, and self-improving.