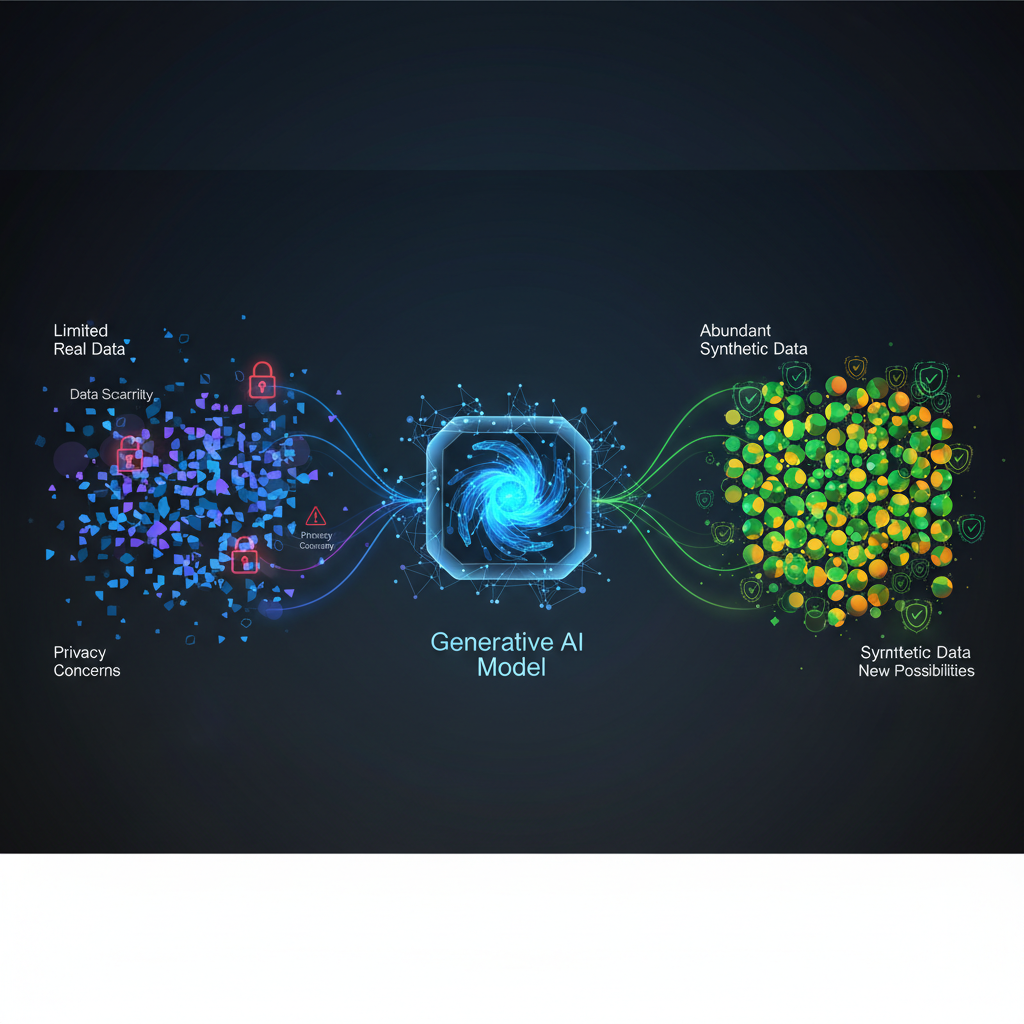

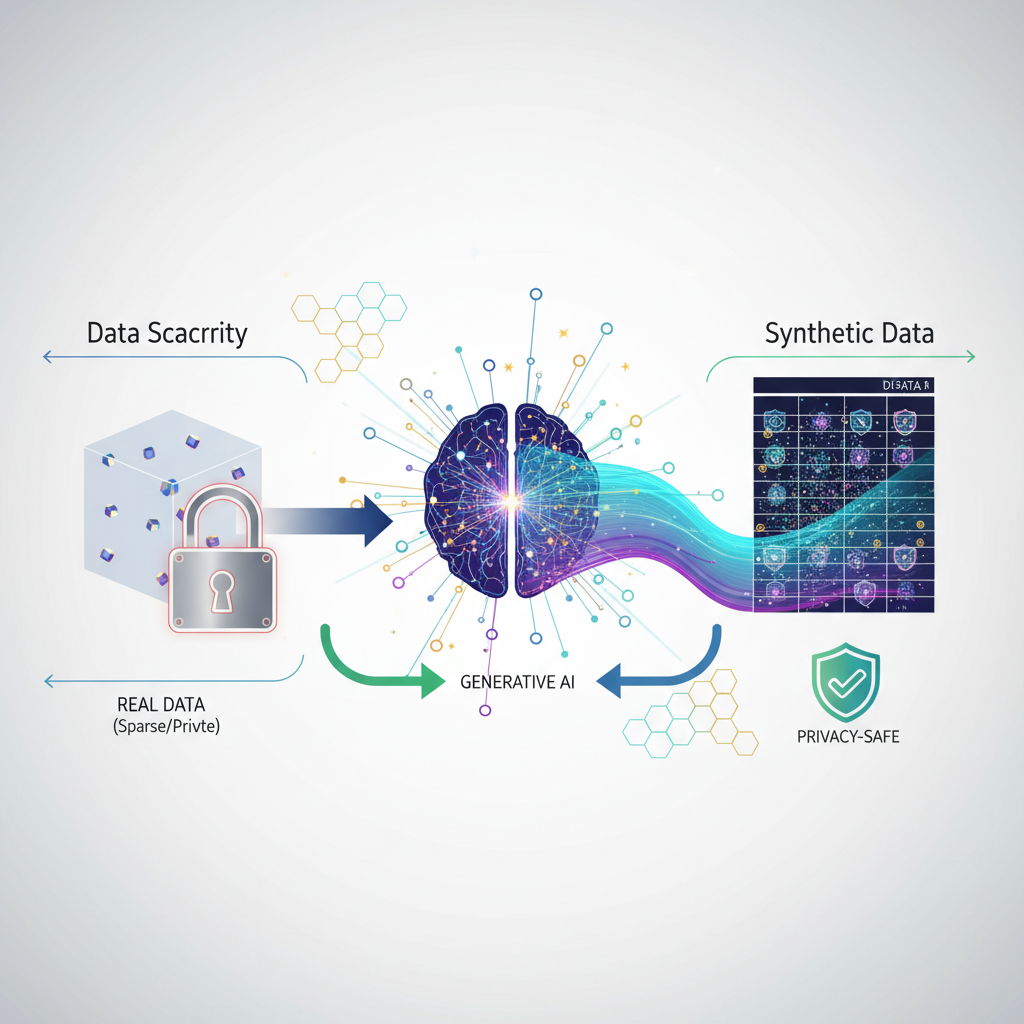

Generative AI for Synthetic Data: Solving Data Scarcity and Privacy Challenges

Discover how Generative AI is revolutionizing data creation by producing artificial datasets that mimic real-world data, addressing critical issues of data scarcity and privacy in AI development.

The world of artificial intelligence is insatiably hungry for data. From training groundbreaking large language models to refining computer vision systems, the mantra has always been: "more data, better models." Yet, this insatiable appetite runs headlong into two significant modern challenges: data scarcity and stringent privacy regulations. High-quality, diverse, and representative real-world data is often hard to come by, expensive to acquire, or legally restricted due to sensitive information.

Enter Generative AI for Synthetic Data Generation. This burgeoning field leverages the power of advanced generative models to create artificial datasets that statistically resemble real-world data without containing any actual sensitive information. It's not just a niche application; it's rapidly becoming a critical pillar for building robust, ethical, and scalable AI solutions in an increasingly data-constrained and privacy-conscious world.

The Data Dilemma: Scarcity Meets Privacy

The past few years have witnessed an unprecedented surge in the capabilities of AI, largely fueled by the development of sophisticated deep learning architectures and the availability of massive datasets. However, this progress has exposed several vulnerabilities:

- Data Scarcity: For many real-world applications – especially in specialized domains like rare disease detection, fraud prevention, or industrial anomaly detection – obtaining sufficient labeled data is a monumental task. Manual annotation is costly and time-consuming, and some events are simply too infrequent to capture adequately.

- Data Quality and Diversity: Even when data is available, it might be imbalanced, biased, or lack the diversity needed for a model to generalize effectively across various scenarios.

- Privacy Regulations: Laws like GDPR, CCPA, and HIPAA have fundamentally reshaped how organizations handle personal and sensitive data. Sharing, processing, and even storing real data for AI development now carries significant legal and ethical risks, often leading to data silos and hindering collaboration.

- Security Concerns: Real data, especially in testing and development environments, poses a security risk. A breach could expose sensitive information, leading to reputational damage and financial penalties.

Synthetic data generation offers a compelling solution to these challenges, providing a bridge between the need for data and the imperative for privacy and security.

The Generative AI Revolution: Powering Synthetic Data

The ability to create realistic synthetic data has been dramatically accelerated by breakthroughs in generative AI. These models learn the underlying patterns and distributions of real data and then use this learned knowledge to generate new, unseen examples that share those characteristics. Let's explore the key players:

1. Generative Adversarial Networks (GANs)

GANs, introduced by Ian Goodfellow in 2014, consist of two neural networks: a generator and a discriminator, locked in a zero-sum game.

- Generator: Tries to create synthetic data that looks real enough to fool the discriminator.

- Discriminator: Tries to distinguish between real data and the generator's synthetic data.

Through this adversarial process, both networks improve. The generator learns to produce increasingly realistic data, while the discriminator becomes better at identifying fakes.

Technical Details:

- Architecture: Typically uses deep convolutional neural networks (CNNs) for image generation, but can adapt to other data types.

- Variants:

- Conditional GANs (cGANs): Allow generation based on specific conditions (e.g., generating a specific digit in MNIST).

- StyleGANs: Known for producing highly realistic and controllable image synthesis.

- BigGANs: Capable of generating high-fidelity images at high resolutions.

- Strengths: Can produce incredibly sharp and realistic outputs, especially for images.

- Challenges: Prone to "mode collapse" (where the generator produces only a limited variety of outputs) and notoriously difficult to train stably.

Example (Tabular Data):

Consider a dataset of customer transactions. A GAN can learn the correlations between features like age, income, transaction_amount, and product_category. The generator would then produce new synthetic customer profiles and transactions that maintain these statistical relationships, making them useful for training fraud detection models without exposing real customer details.

2. Variational Autoencoders (VAEs)

VAEs are a type of generative model that learn a compressed, latent representation of the input data. They consist of an encoder and a decoder.

- Encoder: Maps input data to a lower-dimensional latent space, typically by outputting parameters (mean and variance) of a probability distribution.

- Decoder: Samples from this latent distribution and reconstructs the original data.

By sampling from the learned latent space, VAEs can generate new, diverse data points.

Technical Details:

- Architecture: Often uses fully connected layers or CNNs.

- Strengths: More stable to train than GANs, provide a well-structured latent space that can be used for interpolation and understanding data variations.

- Challenges: Outputs can sometimes be blurrier or less sharp than GANs, particularly for complex data like high-resolution images.

Example (Healthcare Data): A VAE could be trained on electronic health records (EHRs) containing patient demographics, diagnoses, and treatment plans. The latent space would capture underlying patient characteristics. By sampling from this space and decoding, researchers could generate synthetic patient records for studying disease progression or testing new treatment algorithms, all while protecting patient privacy.

3. Diffusion Models

Currently the state-of-the-art for high-fidelity image and audio generation, diffusion models operate by progressively adding noise to data until it becomes pure noise, and then learning to reverse this process.

- Forward Diffusion: Gradually adds Gaussian noise to an image over several steps, transforming it into random noise.

- Reverse Diffusion: A neural network (often a U-Net) learns to denoise the data step-by-step, starting from pure noise and gradually reconstructing a coherent image.

Technical Details:

- Architecture: Often uses U-Net architectures for the denoising network.

- Strengths: Produce exceptionally high-quality and diverse outputs, stable to train, and avoid mode collapse.

- Challenges: Can be computationally intensive during inference (generation), requiring many sequential denoising steps.

Example (Satellite Imagery): Diffusion models can generate synthetic satellite images for training land-use classification models. If real satellite data is scarce for certain regions or specific events (e.g., disaster zones), synthetic images can augment the training set, allowing models to better identify features like infrastructure damage or deforestation patterns.

4. Large Language Models (LLMs)

While primarily known for text generation, LLMs like GPT-3/4, Llama, and others are incredibly versatile for synthetic data generation, especially for structured data descriptions, code, and dialogue. Their ability to understand context and follow instructions makes them powerful tools.

Technical Details:

- Architecture: Transformer-based, leveraging self-attention mechanisms.

- Strengths: Excellent at generating coherent and contextually relevant text, can follow complex instructions, and generate diverse outputs.

- Challenges: Can "hallucinate" or generate factually incorrect information, may perpetuate biases present in their training data, and are computationally expensive.

Example (Synthetic Customer Reviews): An LLM can be prompted to generate synthetic customer reviews for a new product, specifying sentiment, length, and key features to mention. This synthetic data can then be used to train sentiment analysis models or product recommendation systems before the product even launches, providing early insights and reducing reliance on initial real reviews.

# Example of using an LLM (conceptual, using a hypothetical API call)

import openai # or similar library

def generate_synthetic_review(product_name, sentiment, features_to_mention):

prompt = f"Write a {sentiment} customer review for the '{product_name}'. " \

f"Make sure to mention: {', '.join(features_to_mention)}. " \

f"The review should be realistic and about 100 words long."

response = openai.Completion.create(

engine="text-davinci-003", # or a more recent LLM

prompt=prompt,

max_tokens=150,

n=1,

stop=None,

temperature=0.7,

)

return response.choices[0].text.strip()

# Generate a positive review for a new smartphone

review = generate_synthetic_review(

"QuantumPhone X",

"positive",

["camera quality", "battery life", "sleek design"]

)

print(review)

# Expected output: "I am absolutely thrilled with my new QuantumPhone X! The camera quality is simply breathtaking, capturing stunning details even in low light. Battery life is phenomenal, easily lasting me through two full days of heavy use. And let's not forget the sleek design – it feels premium in hand and turns heads wherever I go. Highly recommend this masterpiece!"

# Example of using an LLM (conceptual, using a hypothetical API call)

import openai # or similar library

def generate_synthetic_review(product_name, sentiment, features_to_mention):

prompt = f"Write a {sentiment} customer review for the '{product_name}'. " \

f"Make sure to mention: {', '.join(features_to_mention)}. " \

f"The review should be realistic and about 100 words long."

response = openai.Completion.create(

engine="text-davinci-003", # or a more recent LLM

prompt=prompt,

max_tokens=150,

n=1,

stop=None,

temperature=0.7,

)

return response.choices[0].text.strip()

# Generate a positive review for a new smartphone

review = generate_synthetic_review(

"QuantumPhone X",

"positive",

["camera quality", "battery life", "sleek design"]

)

print(review)

# Expected output: "I am absolutely thrilled with my new QuantumPhone X! The camera quality is simply breathtaking, capturing stunning details even in low light. Battery life is phenomenal, easily lasting me through two full days of heavy use. And let's not forget the sleek design – it feels premium in hand and turns heads wherever I go. Highly recommend this masterpiece!"

Evaluating Synthetic Data: The Triad of Quality

Generating synthetic data is only half the battle; ensuring its utility is paramount. Evaluation typically focuses on three key aspects:

- Fidelity: How well does the synthetic data statistically resemble the real data?

- Metrics:

- Statistical Similarity: Comparing distributions of individual features, correlations between features (e.g., using Kolmogorov-Smirnov test, Jensen-Shannon divergence, or visual inspection of histograms/scatter plots).

- Machine Learning Efficacy: Training a downstream model on both real and synthetic data and comparing their performance on a held-out real test set. This is often the most practical measure of utility.

- FID (Frechet Inception Distance) / KID (Kernel Inception Distance): Commonly used for image generation to measure the similarity of generated images to real images in a feature space.

- Metrics:

- Diversity: Does the synthetic data cover the full range of variability present in the real data, including rare events and edge cases?

- Metrics:

- Coverage Metrics: Ensuring that all classes or categories present in the real data are also represented in the synthetic data.

- Visual Inspection: For images, manually reviewing a sample of synthetic outputs.

- Clustering: Applying clustering algorithms to both real and synthetic data to see if the synthetic data forms similar clusters and covers the same "modes."

- Metrics:

- Privacy Guarantees: How well does the synthetic data protect the privacy of the individuals in the original dataset?

- Metrics:

- Differential Privacy (DP): A mathematical framework that quantifies privacy loss. Generating data with DP guarantees ensures that no individual's data significantly impacts the output.

- Membership Inference Attacks: Testing whether an attacker can determine if a specific record from the original dataset was used to train the generative model.

- Reconstruction Attacks: Testing if an attacker can reconstruct original data points from the synthetic data.

- Metrics:

Practical Applications and Use Cases

The potential applications of generative AI for synthetic data are vast and transformative across industries:

-

Privacy-Preserving Data Sharing:

- Healthcare: Hospitals can generate synthetic patient records to share with researchers or pharmaceutical companies for drug discovery and clinical trials without violating HIPAA.

- Finance: Banks can create synthetic transaction data to share with fintech startups for developing fraud detection algorithms, avoiding the exposure of customer financial details.

- Government: Agencies can release synthetic demographic data for public research while protecting individual privacy.

-

Data Augmentation & Imbalance Mitigation:

- Computer Vision: Generating additional images of rare objects (e.g., specific defects in manufacturing, rare medical conditions) to improve the robustness of object detection and classification models.

- Autonomous Vehicles: Synthesizing rare driving scenarios (e.g., specific weather conditions, unusual road obstacles) to train self-driving car AI without needing to encounter them in the real world.

- Fraud Detection: Creating synthetic examples of rare fraud patterns to balance highly skewed datasets, enabling models to better identify fraudulent activities.

-

Testing & Development:

- Software Testing: Generating synthetic user behavior data to stress-test applications, simulate peak loads, or identify edge-case bugs.

- Robotics & IoT: Creating synthetic sensor data (e.g., temperature, pressure, lidar scans) for simulating complex environments, allowing robots to learn and adapt in virtual settings before real-world deployment.

-

Bias Mitigation:

- If a real dataset is biased against certain demographic groups (e.g., underrepresentation of specific ethnicities in medical images), synthetic data can be generated to balance these groups, leading to fairer and more equitable AI models.

-

LLM Training and Fine-tuning:

- Generating high-quality, diverse prompts and responses to fine-tune LLMs for specific tasks or domains, reducing the reliance on expensive human-annotated data. This is particularly useful for creating specialized chatbots or assistants.

Challenges and Ethical Considerations

While promising, synthetic data generation is not without its hurdles:

- Fidelity vs. Privacy Trade-off: Achieving high data utility while maintaining strong privacy guarantees is a delicate balance. Overly aggressive privacy mechanisms can degrade the quality of synthetic data, making it less useful.

- Evaluation Complexity: Quantifying the "goodness" of synthetic data is non-trivial and often application-specific. There's no single metric that perfectly captures all aspects of quality and utility.

- Computational Cost: Training sophisticated generative models, especially diffusion models and large LLMs, can be very resource-intensive, requiring significant computational power and time.

- Mode Collapse (GANs): As mentioned, GANs can suffer from mode collapse, failing to capture the full diversity of the real data.

- Bias Amplification: If the real data contains biases, the generative model might learn and even amplify these biases in the synthetic data, requiring careful monitoring and mitigation strategies.

- Misinformation and Deepfakes: The same generative technologies that create useful synthetic data can also be misused to generate misleading content (deepfakes, fake news), raising significant ethical concerns about authenticity and trust.

The Future: Emerging Trends

The field is evolving rapidly, with several exciting trends on the horizon:

- Foundation Models for Synthetic Data: Leveraging large pre-trained generative models (like LLMs or large image models) as a base for synthetic data generation, potentially reducing the need for extensive domain-specific training.

- Hybrid Approaches: Combining synthetic data generation with other privacy-enhancing technologies like differential privacy (DP) or federated learning to achieve stronger privacy guarantees.

- Synthetic Data as a Service (SDaaS): The rise of commercial platforms offering specialized synthetic data generation solutions, making the technology more accessible to businesses without deep AI expertise.

- Human-in-the-Loop: Incorporating human feedback to refine and improve synthetic data quality, especially for subjective tasks or when dealing with complex edge cases.

- Beyond Data: Synthetic Environments: Generating entire synthetic environments for training autonomous agents, from virtual cities for self-driving cars to simulated biological systems for drug discovery.

Conclusion

Generative AI for synthetic data generation represents a paradigm shift in how we approach data for AI development. It offers a powerful solution to the twin challenges of data scarcity and privacy, enabling organizations to unlock the full potential of AI while adhering to ethical and regulatory standards. As generative models continue to advance, synthetic data will move from a niche solution to an indispensable tool in the AI practitioner's toolkit, paving the way for more robust, fair, and innovative AI systems across every sector. Understanding this domain is not just beneficial; it's becoming essential for anyone involved in building the next generation of intelligent applications.