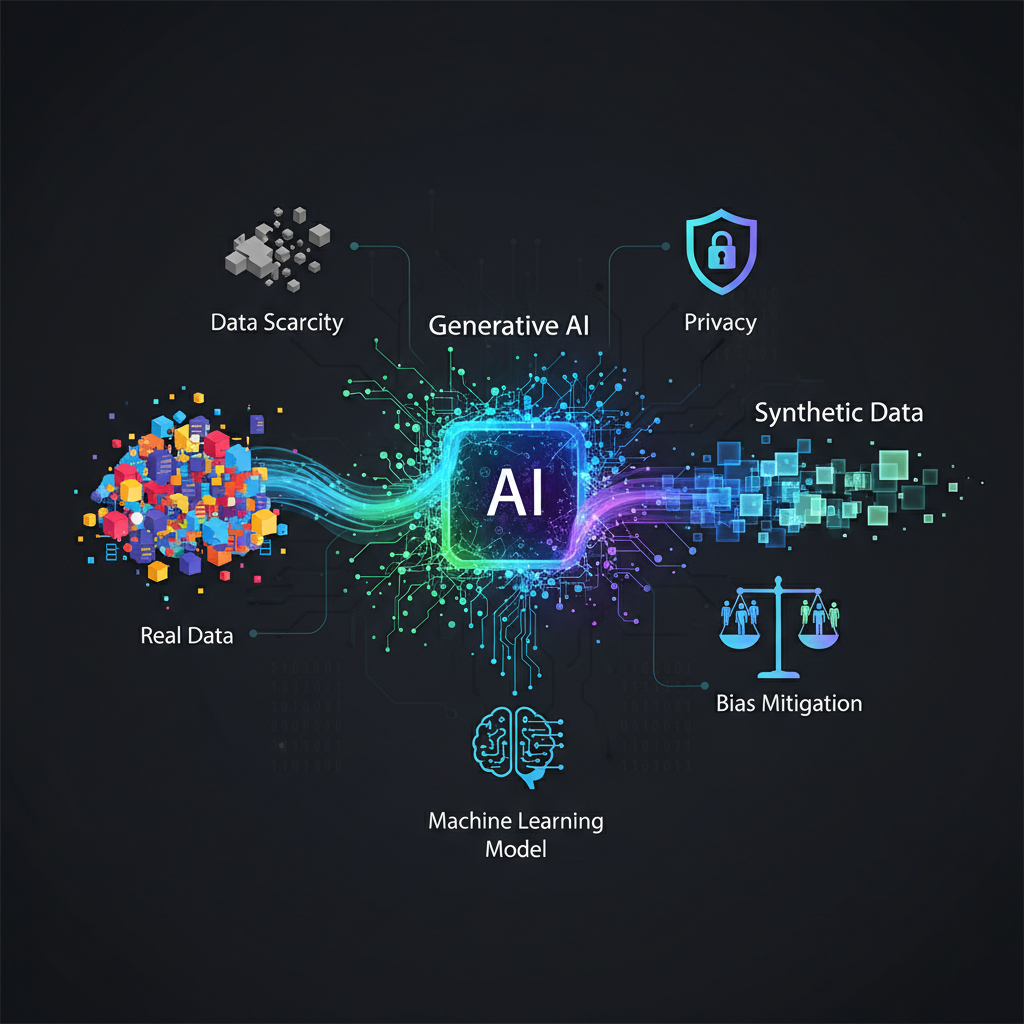

Generative AI: Overcoming Data Scarcity and Privacy with Synthetic Data

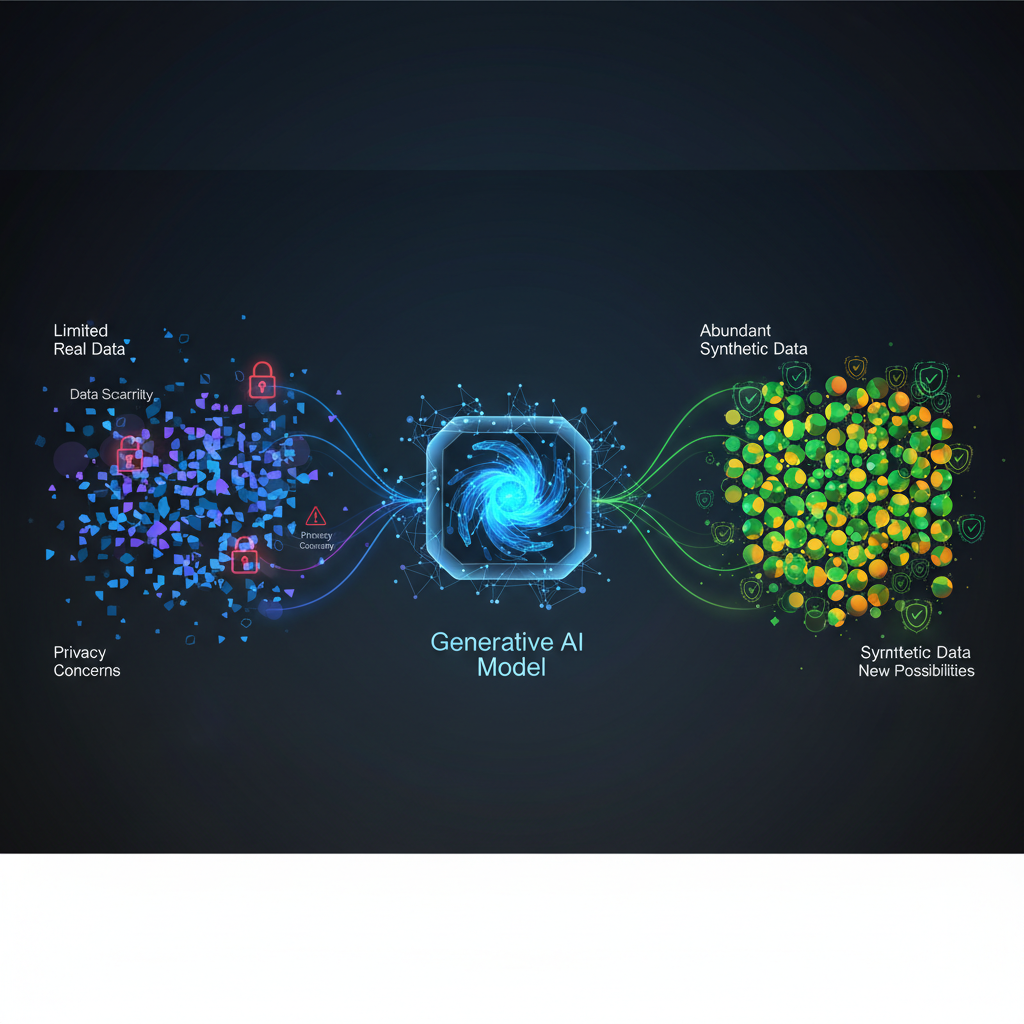

Generative AI is revolutionizing how we handle data challenges. This post explores how synthetic data, created by generative models, addresses issues like data scarcity, privacy concerns, and bias, unlocking new possibilities for machine learning.

In the rapidly evolving landscape of artificial intelligence, data remains the lifeblood of innovation. Yet, for all its power, data presents formidable challenges: it's often scarce, riddled with privacy concerns, burdened by bias, and expensive to acquire and label. Enter generative AI – a revolutionary paradigm that's not just creating art or text, but also forging a path to overcome these data bottlenecks through the creation of synthetic data. This isn't merely a theoretical breakthrough; it's a practical, transformative tool that's bridging the gap between data scarcity and privacy, unlocking new possibilities for machine learning across industries.

The Persistent Data Conundrum

Before diving into the solution, let's clearly articulate the problems that synthetic data generation aims to solve:

- Data Scarcity: Many critical domains suffer from a severe lack of sufficient real-world data. Imagine training an AI to detect extremely rare diseases, predict failures in highly specialized industrial equipment, or develop new products where historical data simply doesn't exist. Traditional ML models falter without enough examples.

- Data Privacy and Security: Regulations like GDPR, HIPAA, and CCPA have rightly emphasized the importance of protecting sensitive personal information. This makes sharing, storing, and using real-world data, especially in healthcare, finance, or government, incredibly complex and risky. The fear of re-identification or data breaches can halt innovation.

- Data Bias: Real-world datasets often mirror societal inequalities and historical biases. If an ML model is trained on such data, it will perpetuate and even amplify these biases, leading to unfair or discriminatory outcomes, particularly in critical applications like credit scoring, hiring, or criminal justice.

- Data Labeling Costs: Acquiring raw data is one thing; transforming it into a usable format with accurate labels is another. This process is often manual, expensive, time-consuming, and a significant bottleneck in the ML development lifecycle.

These challenges collectively hinder the progress and deployment of AI, making the quest for robust, ethical, and accessible data more urgent than ever.

Generative AI: The Engine of Synthetic Data

Generative AI models are designed to learn the underlying patterns and distributions of a given dataset and then generate new, original samples that resemble the real data but are not identical copies. This capability is precisely what makes them ideal for synthetic data generation.

Let's explore the key generative models at play:

1. Generative Adversarial Networks (GANs)

GANs, introduced by Ian Goodfellow in 2014, revolutionized generative modeling. They consist of two neural networks, a Generator and a Discriminator, locked in a zero-sum game:

- Generator (G): Takes random noise as input and tries to produce synthetic data that looks like real data.

- Discriminator (D): Takes samples from both the real dataset and the generator, and tries to distinguish between real and fake data.

The two networks are trained simultaneously. The generator learns to fool the discriminator, while the discriminator learns to get better at identifying fakes. This adversarial process drives both networks to improve, eventually leading to a generator capable of producing highly realistic synthetic data.

Types of GANs for Synthetic Data:

- Conditional GANs (cGANs): Allow for generating data conditioned on specific attributes (e.g., generating a synthetic image of a "cat" or "dog"). This is crucial for targeted synthetic data generation.

- CTGAN (Conditional Tabular GAN): Specifically designed for tabular data, CTGAN addresses the challenges of mixed data types (continuous, discrete, categorical) and highly imbalanced distributions common in real-world tables. It uses a conditional generator and a mode-specific normalization technique to handle diverse column types effectively.

2. Variational Autoencoders (VAEs)

VAEs offer a different approach based on probabilistic modeling. They consist of an Encoder and a Decoder:

- Encoder: Maps input data to a lower-dimensional latent space, typically representing the data as a probability distribution (mean and variance).

- Decoder: Samples from this latent distribution and reconstructs the original data.

The VAE is trained to minimize the reconstruction error while ensuring the latent space representation is well-structured (e.g., close to a standard normal distribution). To generate new data, we simply sample from the learned latent distribution and pass it through the decoder. VAEs are known for producing smoother, more diverse samples compared to GANs, though sometimes at the cost of fidelity.

3. Diffusion Models

Initially making waves in image generation (DALL-E, Stable Diffusion), Diffusion Models are now rapidly being adapted for structured data. They operate on a two-phase process:

- Forward Diffusion (Noising Process): Gradually adds Gaussian noise to the data over several steps, transforming it into pure noise.

- Reverse Diffusion (Denoising Process): A neural network is trained to reverse this process, predicting and removing the noise at each step, effectively learning to generate data from pure noise.

Diffusion models excel at generating high-fidelity and diverse samples due to their iterative denoising process. Their ability to model complex data distributions makes them incredibly promising for tabular, time-series, and even graph data, often outperforming GANs in certain metrics for these data types.

Example: Diffusion for Tabular Data

For tabular data, a diffusion model might learn to denoise a noisy version of a row of data. Each feature (column) could be diffused independently or jointly, with the model learning the dependencies between them. The iterative refinement process allows for capturing intricate relationships that might be harder for other models.

# Conceptual pseudo-code for a diffusion process on tabular data

import torch

import torch.nn as nn

class TabularDenoisingModel(nn.Module):

def __init__(self, input_dim, hidden_dim):

super().__init__()

# Simple MLP for denoising

self.net = nn.Sequential(

nn.Linear(input_dim + 1, hidden_dim), # +1 for time step embedding

nn.ReLU(),

nn.Linear(hidden_dim, input_dim)

)

def forward(self, x_t, t):

# x_t: noisy data at time t

# t: current time step (scalar, needs embedding)

t_embed = self.time_embedding(t) # Assume some time embedding

input_vec = torch.cat([x_t, t_embed], dim=-1)

predicted_noise = self.net(input_vec)

return predicted_noise

# Training loop (simplified)

# for t in range(T, 0, -1): # Reverse diffusion

# noise = torch.randn_like(x_T) # Start with pure noise

# predicted_noise = model(x_t, t)

# x_t_minus_1 = x_t - alpha * predicted_noise # Denoise step

# ...

# Conceptual pseudo-code for a diffusion process on tabular data

import torch

import torch.nn as nn

class TabularDenoisingModel(nn.Module):

def __init__(self, input_dim, hidden_dim):

super().__init__()

# Simple MLP for denoising

self.net = nn.Sequential(

nn.Linear(input_dim + 1, hidden_dim), # +1 for time step embedding

nn.ReLU(),

nn.Linear(hidden_dim, input_dim)

)

def forward(self, x_t, t):

# x_t: noisy data at time t

# t: current time step (scalar, needs embedding)

t_embed = self.time_embedding(t) # Assume some time embedding

input_vec = torch.cat([x_t, t_embed], dim=-1)

predicted_noise = self.net(input_vec)

return predicted_noise

# Training loop (simplified)

# for t in range(T, 0, -1): # Reverse diffusion

# noise = torch.randn_like(x_T) # Start with pure noise

# predicted_noise = model(x_t, t)

# x_t_minus_1 = x_t - alpha * predicted_noise # Denoise step

# ...

Note: This is a highly simplified conceptual representation. Actual diffusion models for tabular data involve more complex architectures, time embeddings, and sampling schedules.

Recent Developments & Emerging Trends

The field of synthetic data generation is dynamic, with several key trends shaping its future:

1. Privacy-Preserving Synthetic Data

Generating synthetic data is a powerful step towards privacy, but it's not inherently privacy-preserving. A synthetic dataset could still inadvertently leak information about individual records if the original dataset is very small or contains unique outliers. This is where Differential Privacy (DP) comes in.

- Differential Privacy (DP) Integration: DP provides a mathematical guarantee that the presence or absence of any single individual's data in the original dataset does not significantly alter the outcome of an analysis or model training. When integrated with generative models, DP ensures that the synthetic data generation process itself is privacy-preserving. This means that even if an attacker has full knowledge of the synthetic data and the generation algorithm, they cannot confidently infer whether a specific individual's data was part of the original training set. This is often achieved by adding carefully calibrated noise during the training of the generative model or during the data sampling process.

- Federated Learning & Synthetic Data: Federated learning allows multiple parties to collaboratively train an ML model without sharing their raw data. Synthetic data can further enhance privacy in this context. Instead of sharing model updates (which can sometimes still leak information), parties could share synthetic versions of their local data, or use synthetic data to augment their local training sets, reducing the need for direct data exchange.

2. Synthetic Data for Model Robustness and Fairness

Synthetic data isn't just about filling data gaps; it's also a potent tool for improving the quality and ethical standing of ML models.

- Data Augmentation: Beyond simple transformations (rotation, scaling), generative models can create entirely new, realistic variations of existing data. This expands the dataset, helps models generalize better to unseen data, and reduces overfitting, especially when real data is limited. For example, generating synthetic images of rare medical conditions from a few examples can significantly improve diagnostic model performance.

- Bias Mitigation: Real-world datasets often underrepresent certain demographic groups or contain historical biases. Synthetic data can be strategically generated to:

- Rebalance Skewed Datasets: Create more samples for underrepresented classes or groups to achieve a more balanced dataset.

- Generate Edge Cases: Simulate rare but important scenarios that might be missing from real data, making models more robust to unusual inputs.

- Debias Datasets: Generate synthetic data that explicitly removes or reduces correlations between sensitive attributes (e.g., race, gender) and target variables, leading to fairer model predictions.

3. Evaluation Metrics for Synthetic Data Utility

The true value of synthetic data lies in its ability to serve as a faithful and useful proxy for real data. Robust evaluation is critical. Beyond subjective assessment, quantitative metrics are essential to measure:

- Fidelity: How statistically similar is the synthetic data to the real data?

- Distribution Matching: Comparing marginal and joint distributions of features (e.g., using statistical tests like KS-test, Wasserstein distance).

- Correlation Preservation: Ensuring that the relationships between features are maintained (e.g., correlation matrices, mutual information).

- Feature-wise Similarity: Metrics like Mean Squared Error (MSE) for continuous features or F1-score for categorical features.

- Utility: How well do ML models trained on synthetic data perform on real data? This is often the most critical metric.

- Predictive Performance: Train a classifier or regressor on the synthetic data and evaluate its performance (accuracy, F1, RMSE, etc.) on a real test set. This directly assesses if the synthetic data captured the predictive signals.

- Downstream Task Accuracy: For specific applications, evaluate the synthetic data's utility in that context (e.g., if synthetic customer data helps a recommendation engine perform better on real customers).

- Privacy: How well does the synthetic data protect the privacy of the original data?

- Membership Inference Attacks: Testing if an attacker can determine if a specific record was part of the original training data.

- Re-identification Risk: Assessing the probability of linking synthetic records back to real individuals using external information.

- Attribute Disclosure Risk: Evaluating if sensitive attributes of individuals can be inferred from the synthetic data.

4. Synthetic Data for Simulation and "Digital Twins"

Generative AI is also pushing the boundaries of simulation. By creating highly realistic synthetic environments or data streams, we can:

- Train Reinforcement Learning Agents: Generate endless variations of game states, robotic environments, or control scenarios without costly real-world trials.

- Test Autonomous Systems: Simulate diverse driving conditions, pedestrian behaviors, and sensor inputs for self-driving cars, allowing for rigorous testing of algorithms in a safe, controlled, and scalable manner, including rare and dangerous edge cases.

- Digital Twins: Create virtual replicas of physical assets, processes, or systems. Synthetic data can feed these digital twins, allowing for predictive maintenance, process optimization, and scenario planning without impacting real-world operations.

Practical Applications and Use Cases

The theoretical advancements in generative AI translate directly into tangible benefits across numerous industries:

-

Healthcare:

- Problem: Patient data is highly sensitive and regulated (HIPAA). Data scarcity for rare diseases.

- Solution: Generate synthetic patient records (demographics, diagnoses, lab results, treatment plans) for drug discovery, clinical trial design, or training diagnostic AI models. This allows researchers to work with realistic data without compromising patient privacy.

- Example: A pharmaceutical company uses synthetic data to simulate patient cohorts for a new drug, allowing them to optimize trial parameters before recruiting real patients.

-

Finance:

- Problem: Strict regulations (PCI DSS, GDPR), high stakes for fraud detection, rare fraud patterns.

- Solution: Create synthetic transaction data for training fraud detection models, risk assessment, or anti-money laundering (AML) systems. This is particularly useful for generating examples of rare fraud types that are scarce in real datasets.

- Example: A bank generates synthetic credit card transactions, including rare fraudulent patterns, to improve the accuracy of its fraud detection algorithms without exposing real customer financial data.

-

Autonomous Vehicles:

- Problem: Training self-driving cars requires vast amounts of diverse, real-world driving data, including hazardous and rare scenarios, which are difficult and dangerous to collect.

- Solution: Simulate diverse driving scenarios, weather conditions (rain, snow, fog), traffic patterns, and edge cases (e.g., sudden pedestrian appearance, unusual road obstacles) using generative models. This allows for safe and efficient training and testing of self-driving algorithms.

- Example: Waymo uses synthetic data generated in simulated environments to train its autonomous driving system on millions of miles of virtual driving, including situations too dangerous or rare to encounter frequently on real roads.

-

E-commerce and Retail:

- Problem: Personalization requires user behavior data, but privacy concerns limit its direct use. A/B testing can be slow.

- Solution: Generate synthetic user behavior data (browsing history, purchase patterns, clicks) to test recommendation engines, personalize user experiences, or optimize website layouts without using real customer data. This also enables rapid experimentation.

- Example: An e-commerce platform generates synthetic customer journeys to test new recommendation algorithms, ensuring they perform well before deploying them to real users, thereby protecting customer privacy.

-

Manufacturing and IoT:

- Problem: Sensor data from industrial machinery can be proprietary, sensitive, or scarce for specific failure modes.

- Solution: Create synthetic sensor data from industrial machinery to predict failures, optimize maintenance schedules, or improve quality control, especially for rare fault conditions that are critical but seldom observed.

- Example: A factory uses synthetic sensor data to train predictive maintenance models for a critical machine, generating data for rare but catastrophic failure modes that would be too costly or dangerous to simulate in the real world.

The Road Ahead: Challenges and Opportunities

While the promise of synthetic data is immense, the journey isn't without its challenges:

- Fidelity vs. Diversity vs. Privacy Trade-offs: Achieving high fidelity, diverse samples, and strong privacy guarantees simultaneously remains a complex research area. Often, improving one might come at the cost of another.

- Evaluation Complexity: Quantitatively assessing the quality and utility of synthetic data is non-trivial, requiring sophisticated metrics and domain expertise.

- Generalization Beyond Training Data: While synthetic data can augment, it's crucial that it doesn't just replicate existing biases or limitations. Generating truly novel, yet realistic, data that helps models generalize to entirely new scenarios is an ongoing goal.

- Computational Resources: Training state-of-the-art generative models, especially diffusion models, can be computationally intensive.

Despite these challenges, the opportunities presented by generative AI for synthetic data are transformative. For AI practitioners and enthusiasts, this field offers:

- A Solution to Real-World Data Bottlenecks: Directly address data scarcity and privacy in your projects.

- Enhanced Privacy Compliance: Navigate complex regulations with a practical, ethical approach to data usage.

- Accelerated Model Development: Reduce time and cost by bypassing traditional data acquisition and labeling hurdles.

- Improved Model Performance and Fairness: Build more robust, generalizable, and equitable AI systems.

- New Career Paths: The demand for expertise in synthetic data generation, validation, and deployment is rapidly growing, creating exciting new roles.

- A Deep Dive into Advanced Generative Models: Gain hands-on experience with the cutting edge of AI.

Conclusion

Generative AI for synthetic data generation is more than just a technological marvel; it's a paradigm shift in how we approach data in the age of AI. By offering a powerful means to overcome data scarcity, protect privacy, mitigate bias, and accelerate innovation, it is democratizing access to high-quality data and enabling the development of more robust, ethical, and impactful machine learning solutions across every sector. As these models continue to evolve, synthetic data will undoubtedly become an indispensable tool in the AI developer's toolkit, unlocking a future where data limitations no longer constrain the boundless potential of artificial intelligence.