Generative AI for Synthetic Data: Unlocking New Frontiers in Machine Learning

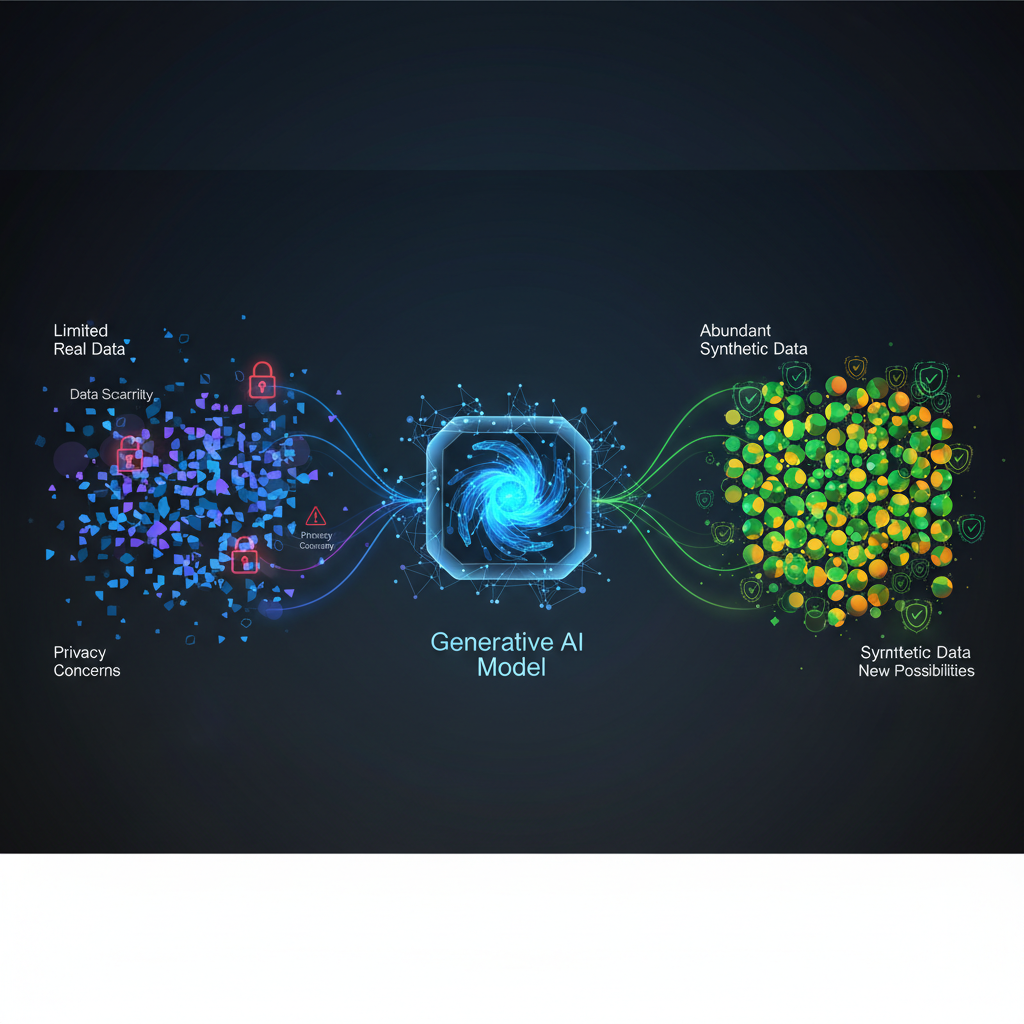

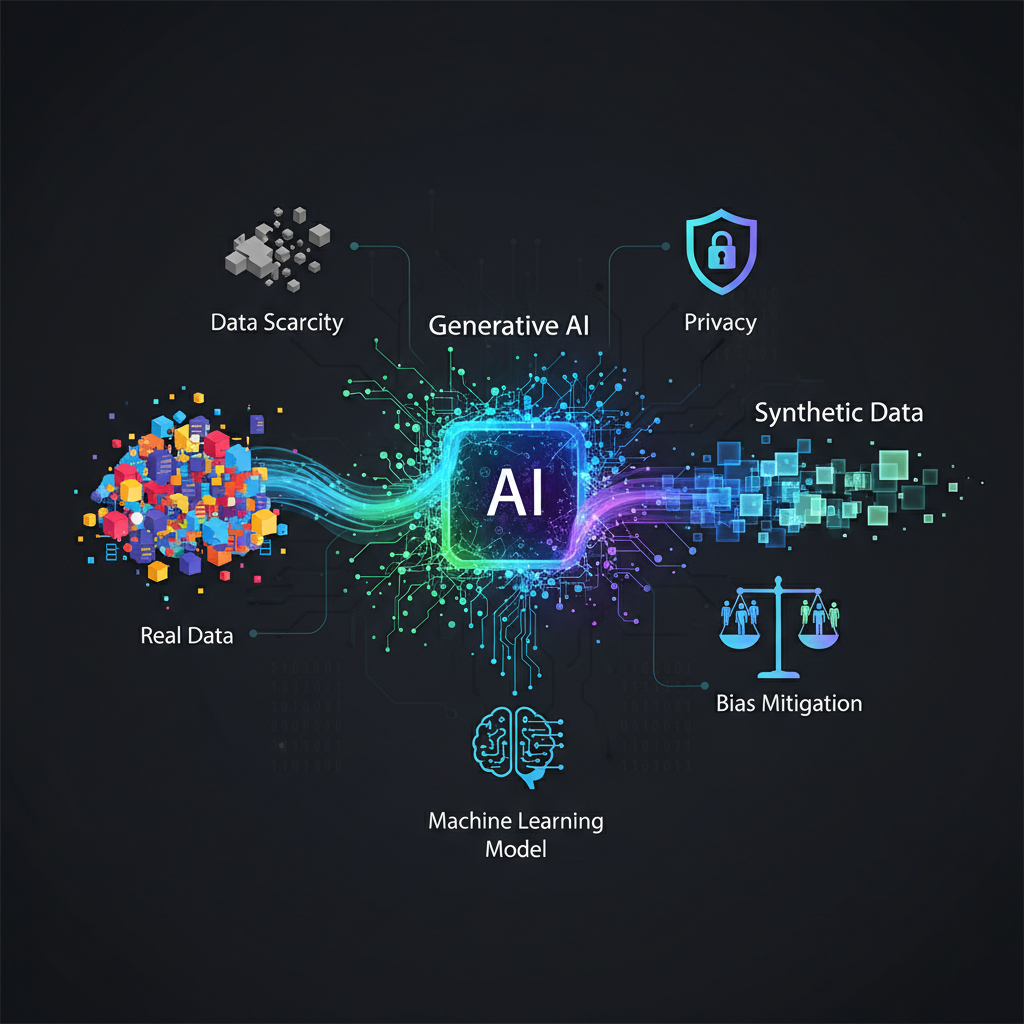

Explore how Generative AI is revolutionizing machine learning by creating artificial data that mimics real-world properties. This game-changing technology addresses data scarcity, privacy, and bias, offering a powerful solution for AI development.

The insatiable appetite of Artificial Intelligence for data is well-known. From training robust models to validating their performance, data is the lifeblood of AI. However, the real world often presents significant hurdles: data scarcity, stringent privacy regulations, inherent biases, and the sheer cost and effort of collection. Enter Generative AI for Synthetic Data Generation – a rapidly evolving field that promises to unlock new frontiers in machine learning by creating artificial data that mirrors the statistical properties of real data, without compromising sensitive information.

This isn't just about creating pretty pictures or clever text; it's about solving fundamental challenges in the AI lifecycle, making it an indispensable tool for practitioners and a fascinating area for enthusiasts.

The Data Dilemma: Why Synthetic Data is a Game-Changer

Before diving into the "how," let's understand the "why." The need for synthetic data stems from several critical pain points in modern AI development:

- Data Scarcity and Cold Start Problems: Many AI projects, especially in emerging domains or for niche applications, suffer from a lack of sufficient real-world data to train effective models. Synthetic data can provide a crucial initial dataset, bridging the "cold start" gap.

- Privacy and Compliance (GDPR, CCPA, HIPAA): Handling sensitive personal, financial, or health data comes with immense regulatory burdens. Synthetic data allows developers to build and test models without ever touching real, identifiable information, ensuring compliance and mitigating privacy risks.

- Bias Mitigation: Real-world datasets often reflect societal biases, leading to models that perform poorly or unfairly on under-represented groups. Synthetic data offers a powerful mechanism to generate balanced datasets, explicitly addressing and correcting these imbalances.

- Edge Cases and Anomaly Detection: Rare events, anomalies, or specific failure modes are critical for robust system performance (e.g., autonomous vehicles, industrial fault detection). However, these are, by definition, difficult to collect in sufficient quantities. Synthetic data can generate these crucial "black swan" scenarios.

- Cost and Time of Data Collection: Acquiring, labeling, and cleaning real-world data is often the most expensive and time-consuming part of an AI project. Synthetic data can significantly reduce this overhead.

- Data Augmentation Beyond Simple Transformations: While traditional data augmentation involves simple transformations (rotation, cropping), generative models can create truly novel, yet representative, data points, enhancing model generalization.

The ability to generate high-quality, diverse, and privacy-preserving data on demand is transforming how we approach AI development, making it more accessible, ethical, and robust.

The Evolution of Generative Models for Synthetic Data

The journey of synthetic data generation has been significantly propelled by advancements in generative AI. While early methods relied on statistical modeling, modern approaches leverage deep learning to capture complex data distributions.

1. Generative Adversarial Networks (GANs)

GANs, introduced by Ian Goodfellow in 2014, revolutionized generative modeling. They operate on a fascinating adversarial principle:

- Generator (G): A neural network that takes random noise as input and tries to produce synthetic data that resembles real data.

- Discriminator (D): Another neural network that acts as a critic, trying to distinguish between real data and the synthetic data produced by the Generator.

The two networks are trained simultaneously in a zero-sum game. The Generator aims to "fool" the Discriminator, while the Discriminator aims to accurately identify fakes. This competition drives both networks to improve, with the Generator eventually learning to produce highly realistic samples.

Technical Details & Examples:

- Architecture: Often use convolutional layers (DCGAN) for images, or fully connected layers for tabular data. More advanced GANs like StyleGAN have introduced progressive growing and adaptive instance normalization for unprecedented image realism.

- Conditional GANs (cGANs): Allow for controlled generation by providing additional information (e.g., class labels, text descriptions) to both the Generator and Discriminator. This is crucial for targeted synthetic data generation, such as "generate an image of a cat" or "generate a synthetic patient record for a 45-year-old male."

- Challenges: GANs are notoriously difficult to train due to mode collapse (the generator producing limited varieties of output) and training instability. Evaluating GAN output is also complex.

Code Snippet (Conceptual GAN training loop):

# Simplified conceptual training loop for a GAN

for epoch in range(num_epochs):

for batch in real_data_loader:

# 1. Train Discriminator

discriminator_optimizer.zero_grad()

# Real data

real_labels = torch.ones(batch_size, 1)

output_real = discriminator(batch)

loss_real = criterion(output_real, real_labels)

loss_real.backward()

# Fake data

noise = torch.randn(batch_size, latent_dim)

fake_data = generator(noise).detach() # Detach to stop gradients from flowing to Generator

fake_labels = torch.zeros(batch_size, 1)

output_fake = discriminator(fake_data)

loss_fake = criterion(output_fake, fake_labels)

loss_fake.backward()

discriminator_optimizer.step()

d_loss = loss_real + loss_fake

# 2. Train Generator

generator_optimizer.zero_grad()

noise = torch.randn(batch_size, latent_dim)

fake_data = generator(noise)

output = discriminator(fake_data)

g_loss = criterion(output, real_labels) # Generator wants discriminator to think fakes are real

g_loss.backward()

generator_optimizer.step()

print(f"Epoch {epoch}: D Loss = {d_loss.item()}, G Loss = {g_loss.item()}")

# Simplified conceptual training loop for a GAN

for epoch in range(num_epochs):

for batch in real_data_loader:

# 1. Train Discriminator

discriminator_optimizer.zero_grad()

# Real data

real_labels = torch.ones(batch_size, 1)

output_real = discriminator(batch)

loss_real = criterion(output_real, real_labels)

loss_real.backward()

# Fake data

noise = torch.randn(batch_size, latent_dim)

fake_data = generator(noise).detach() # Detach to stop gradients from flowing to Generator

fake_labels = torch.zeros(batch_size, 1)

output_fake = discriminator(fake_data)

loss_fake = criterion(output_fake, fake_labels)

loss_fake.backward()

discriminator_optimizer.step()

d_loss = loss_real + loss_fake

# 2. Train Generator

generator_optimizer.zero_grad()

noise = torch.randn(batch_size, latent_dim)

fake_data = generator(noise)

output = discriminator(fake_data)

g_loss = criterion(output, real_labels) # Generator wants discriminator to think fakes are real

g_loss.backward()

generator_optimizer.step()

print(f"Epoch {epoch}: D Loss = {d_loss.item()}, G Loss = {g_loss.item()}")

2. Variational Autoencoders (VAEs)

VAEs offer a different, more stable approach to generative modeling. They are built on the principles of autoencoders but introduce a probabilistic twist:

- Encoder: Maps input data to a latent space, but instead of a single point, it learns a distribution (mean and variance) for each input.

- Decoder: Samples from this learned latent distribution and reconstructs the input data.

The VAE loss function combines a reconstruction loss (how well the output matches the input) with a regularization term (Kullback-Leibler divergence) that forces the latent space to conform to a simple prior distribution, typically a standard Gaussian. This regularization ensures that the latent space is continuous and well-structured, allowing for meaningful interpolation and generation of new samples.

Technical Details & Examples:

- Strengths: More stable training than GANs, provide an interpretable latent space, excellent for generating diverse samples, and allow for easy interpolation between data points.

- Weaknesses: Generated samples can sometimes appear blurrier or less sharp compared to GANs, especially for complex images.

- Use Cases: Often used for generating synthetic tabular data, where smoothness and diversity are prioritized over photorealism.

3. Diffusion Models (DMs)

Diffusion Models are the current state-of-the-art in generative AI, responsible for the breathtaking quality of models like DALL-E 2, Stable Diffusion, and Midjourney. Their mechanism is inspired by thermodynamics:

- Forward Diffusion Process: Gradually adds Gaussian noise to an image (or other data) over several steps until it becomes pure noise. This process is fixed and deterministic.

- Reverse Diffusion Process: A neural network is trained to learn to reverse this noise-adding process, step by step. Starting from pure noise, it iteratively denoises the input to reconstruct a coherent, realistic data sample.

Technical Details & Examples:

- Strengths: Unparalleled quality and diversity in generated samples, stable training, and highly amenable to conditional generation (e.g., text-to-image). Their ability to capture fine details and global coherence is superior.

- Weaknesses: Computationally intensive during inference, as they require many sequential steps to generate a single sample. However, research is rapidly improving sampling speed.

- Impact: Diffusion models have largely surpassed GANs in many synthetic data applications, especially for images, audio, and more recently, tabular data.

Code Snippet (Conceptual Diffusion Model Inference):

# Simplified conceptual inference loop for a Diffusion Model

def generate_sample(model, timesteps, noise_shape):

x = torch.randn(noise_shape) # Start with pure noise

for t in reversed(range(timesteps)):

# Predict the noise added at step t

predicted_noise = model(x, t)

# Apply the reverse diffusion step (denoise)

# This involves subtracting predicted_noise, scaling, and adding some learned noise

# (actual math is more complex, involving alpha_t, beta_t, etc.)

x = x - predicted_noise * some_scaling_factor # Conceptual denoise step

return x

# Simplified conceptual inference loop for a Diffusion Model

def generate_sample(model, timesteps, noise_shape):

x = torch.randn(noise_shape) # Start with pure noise

for t in reversed(range(timesteps)):

# Predict the noise added at step t

predicted_noise = model(x, t)

# Apply the reverse diffusion step (denoise)

# This involves subtracting predicted_noise, scaling, and adding some learned noise

# (actual math is more complex, involving alpha_t, beta_t, etc.)

x = x - predicted_noise * some_scaling_factor # Conceptual denoise step

return x

4. Other Notable Models:

- Autoregressive Models (e.g., Transformers): Excellent for sequential data like text or time series, generating data element by element based on previous ones.

- Flow-based Models: Learn an invertible transformation from a simple distribution to the complex data distribution, allowing for exact likelihood calculation.

Evaluating Synthetic Data: Beyond Visual Inspection

Generating synthetic data is only half the battle; ensuring its utility and fidelity is crucial. Evaluation metrics are evolving to be more rigorous:

- Fidelity: How closely does the synthetic data resemble the real data in terms of statistical properties, distribution, and visual/semantic quality?

- Metrics: FID (Fréchet Inception Distance) for images, statistical tests (e.g., Kolmogorov-Smirnov, Jensen-Shannon divergence) for tabular data, distribution plots.

- Utility: How well does a model trained on synthetic data perform on real data? This is often the most critical metric.

- Metrics: Downstream task performance (e.g., accuracy of a classifier trained on synthetic data and tested on real data), R-squared for regression models.

- Diversity: Does the synthetic data cover the full range of variations present in the real data, avoiding mode collapse?

- Metrics: Coverage metrics, visual inspection for variety.

- Privacy: Does the synthetic data inadvertently reveal sensitive information from the real data?

- Metrics: Membership inference attacks, differential privacy guarantees.

Practical Applications and Use Cases

The impact of synthetic data generation spans across virtually every industry:

Healthcare:

- Privacy-Preserving Research: Generating synthetic patient records (demographics, diagnoses, treatments) for drug discovery, clinical trials, and epidemiological studies without exposing real patient identities.

- Medical Imaging: Creating synthetic X-rays, MRIs, or CT scans, especially for rare diseases, to augment datasets for diagnostic AI models.

- Drug Discovery: Simulating molecular structures or protein interactions to accelerate drug development.

Finance:

- Fraud Detection: Generating synthetic transaction data, including rare fraud patterns, to train more robust fraud detection systems.

- Credit Scoring: Creating synthetic customer profiles for credit risk modeling, adhering to strict privacy regulations.

- Market Simulation: Simulating stock market movements or financial instrument behavior for algorithmic trading strategy development.

Autonomous Vehicles:

- Scenario Generation: Creating diverse synthetic driving environments, including hazardous weather conditions, rare pedestrian behaviors, or unusual road incidents, to train and test self-driving algorithms safely and efficiently.

- Sensor Data Simulation: Generating synthetic LiDAR, radar, and camera data to complement real-world sensor streams.

E-commerce & Retail:

- Product Catalog Expansion: Generating synthetic product images for different variations (colors, textures, angles) without needing extensive photo shoots.

- Recommendation Systems: Creating synthetic customer behavior data (browsing history, purchases) to train personalized recommendation engines.

- Demand Forecasting: Simulating various market conditions and customer responses to predict demand for new products.

Manufacturing & Industrial IoT:

- Anomaly Detection: Generating synthetic sensor data that includes rare equipment failures or operational anomalies, crucial for predictive maintenance.

- Process Optimization: Simulating production line variations to identify bottlenecks and optimize manufacturing processes.

Cybersecurity:

- Intrusion Detection: Generating synthetic network traffic with embedded attack patterns to train and evaluate intrusion detection systems.

- Malware Analysis: Creating synthetic malware samples or phishing emails for security research and testing without risking real systems.

Fairness & Bias Mitigation:

- Debiasing Datasets: Identifying under-represented groups in real data and generating synthetic samples for those groups to create balanced datasets, leading to more equitable AI models. For example, generating synthetic images of diverse skin tones for facial recognition systems.

The Road Ahead: Emerging Trends

The field is dynamic, with several exciting trends shaping its future:

- Conditional Generation Dominance: The ability to generate data based on specific attributes or conditions is becoming standard, making synthetic data highly customizable and targeted.

- Multi-Modal Synthesis: Generating synthetic data that spans multiple modalities (e.g., an image with a corresponding text description, or video with audio) is crucial for complex AI tasks.

- Privacy-Preserving Generative Models: Integrating differential privacy mechanisms directly into generative models to offer provable privacy guarantees for the synthetic output.

- Foundation Models for Synthetic Data: Leveraging large pre-trained generative models (like large language models or vision transformers) as powerful bases for fine-tuning to generate highly specific, high-quality synthetic data for niche domains.

- Synthetic Data for Tabular Data: While image generation often grabs headlines, the generation of high-quality synthetic tabular data (e.g., customer databases, financial records) is a massive growth area, with specialized models like CTGAN, TVAE, and adapted diffusion models gaining traction.

Conclusion

Generative AI for Synthetic Data Generation is no longer a futuristic concept; it's a present-day necessity. It directly addresses some of the most pressing challenges in AI development: data access, privacy, bias, and cost. By leveraging the power of models like GANs, VAEs, and especially Diffusion Models, practitioners can unlock unprecedented opportunities to build more robust, ethical, and performant AI systems. For enthusiasts, it offers a fascinating intersection of cutting-edge AI research and tangible, real-world impact. As data continues to be the fuel for AI, synthetic data will be the engine that keeps it running, efficiently and responsibly.