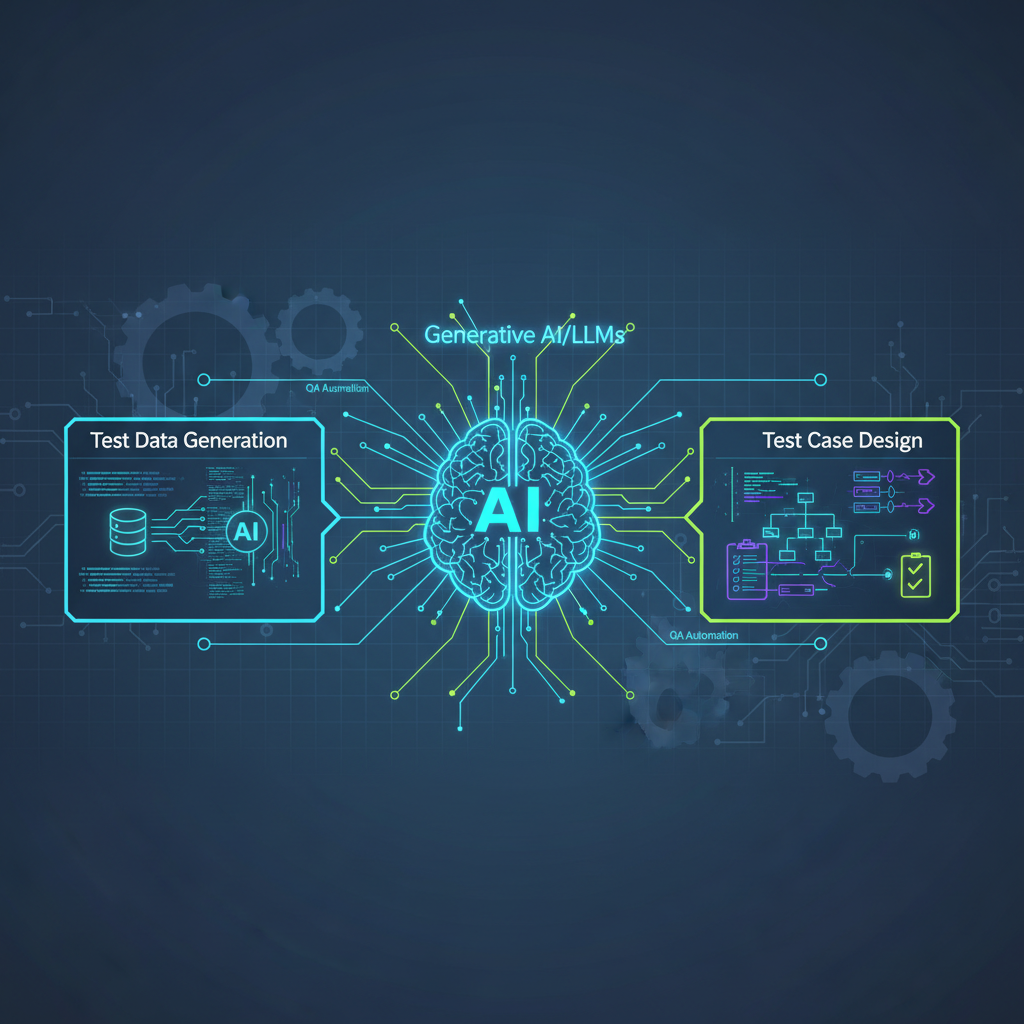

Generative AI in QA: Revolutionizing Test Data and Case Generation

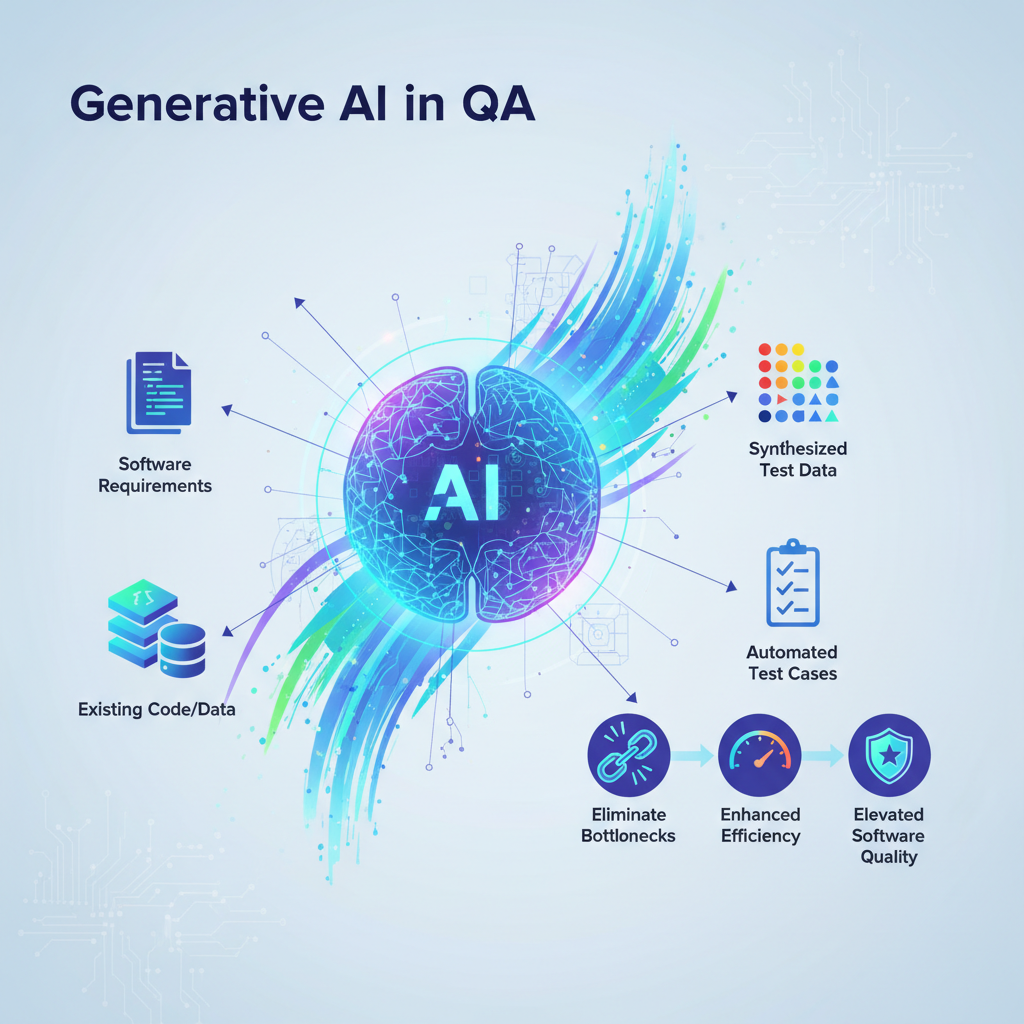

Discover how Generative AI, particularly Large Language Models (LLMs), is transforming Quality Assurance by automating the creation of realistic test data and comprehensive test cases. This innovation promises to eliminate traditional bottlenecks, enhance efficiency, and elevate software quality in the development lifecycle.

The landscape of software development is in perpetual motion, driven by an insatiable demand for faster delivery, higher quality, and increased complexity. At the heart of ensuring this quality lies Quality Assurance (QA) and Quality Control (QC), disciplines that have traditionally been resource-intensive, often becoming bottlenecks in the development lifecycle. Among the most challenging and time-consuming aspects of QA are the creation of realistic test data and the generation of comprehensive test cases. These tasks demand not only meticulous attention to detail but also a deep understanding of system behavior, user interactions, and potential edge cases – a blend of analytical rigor and creative foresight.

Enter Generative AI. The recent explosion of powerful Large Language Models (LLMs) and other sophisticated generative techniques has ushered in a new era, promising to revolutionize these foundational QA activities. No longer are we confined to manual data entry, rigid scripting, or cumbersome anonymization processes. Instead, we can harness AI to synthesize complex, contextually relevant, and even novel scenarios that mirror real-world usage, pushing the boundaries of what automated testing can achieve. This isn't merely an incremental improvement; it's a paradigm shift, transforming QA from a reactive bottleneck into a proactive accelerator for innovation.

The Persistent Challenge of Test Data

Test data is the lifeblood of effective software testing. Without it, even the most meticulously crafted test cases are hollow. Yet, acquiring or creating suitable test data has always been fraught with challenges:

- Manual Creation: Time-consuming, error-prone, and often fails to cover diverse scenarios or edge cases.

- Production Data Anonymization: While realistic, it's a complex process to ensure true anonymization and compliance with privacy regulations (like GDPR, HIPAA). It can also be difficult to modify for specific test scenarios.

- Scripted Generation: Requires significant upfront effort to write and maintain scripts, and often struggles with generating complex, interconnected data sets that reflect real-world relationships.

- Lack of Realism: Simplified or generic test data often misses subtle bugs that only manifest with nuanced, realistic inputs.

- Scalability Issues: As systems grow, the volume and variety of test data required can quickly become unmanageable.

These challenges collectively hinder test coverage, slow down release cycles, and ultimately impact software quality.

Generative AI's Answer: Intelligent Test Data Synthesis (TDS)

Generative AI offers a compelling solution to these long-standing problems by enabling the intelligent synthesis of test data. Instead of merely randomizing values, generative models can learn the underlying distributions, relationships, and constraints of real data to produce statistically similar, yet entirely synthetic, datasets.

1. LLMs for Structured and Unstructured Data

Large Language Models (LLMs) are proving to be incredibly versatile for generating various forms of test data. Given a schema, a data model, or even a natural language description, LLMs can produce highly realistic and contextually appropriate data.

How it works:

- Schema-driven Generation: Provide an LLM with a JSON Schema, OpenAPI specification, or a database schema, and it can generate valid data instances. It understands data types, constraints (e.g.,

min_length,max_value,enum), and relationships between fields. - Natural Language Descriptions: Describe the desired data characteristics in plain English. For example, "Generate 10 customer profiles for an e-commerce site, including names, email addresses, realistic shipping addresses in different US states, and purchase histories for electronics." The LLM can infer common patterns and generate diverse, plausible entries.

- Contextual Inference: LLMs can infer relationships. If a

user_typeis 'premium', it might generatediscount_ratevalues that are higher, orsupport_tieras 'priority'.

Example: Generating Customer Profiles with an LLM

Let's say we need test data for a new user registration flow. We want diverse users, some with specific characteristics.

{

"schema": {

"type": "object",

"properties": {

"id": {"type": "string", "format": "uuid"},

"firstName": {"type": "string", "description": "Common first name"},

"lastName": {"type": "string", "description": "Common last name"},

"email": {"type": "string", "format": "email"},

"phoneNumber": {"type": "string", "pattern": "^\\+?[1-9]\\d{1,14}$", "description": "E.164 format"},

"address": {

"type": "object",

"properties": {

"street": {"type": "string"},

"city": {"type": "string"},

"state": {"type": "string", "enum": ["NY", "CA", "TX", "FL", "IL"]},

"zipCode": {"type": "string", "pattern": "^\\d{5}(-\\d{4})?$"}

},

"required": ["street", "city", "state", "zipCode"]

},

"registrationDate": {"type": "string", "format": "date-time"},

"userType": {"type": "string", "enum": ["standard", "premium", "admin"], "default": "standard"},

"lastLogin": {"type": "string", "format": "date-time", "nullable": true}

},

"required": ["id", "firstName", "lastName", "email", "registrationDate", "userType"]

},

"instructions": "Generate 5 diverse user profiles. Ensure one user is 'premium' and registered within the last month. Another user should have an invalid email format. Include a user from California and one from New York. Vary the lastLogin dates, some null."

}

{

"schema": {

"type": "object",

"properties": {

"id": {"type": "string", "format": "uuid"},

"firstName": {"type": "string", "description": "Common first name"},

"lastName": {"type": "string", "description": "Common last name"},

"email": {"type": "string", "format": "email"},

"phoneNumber": {"type": "string", "pattern": "^\\+?[1-9]\\d{1,14}$", "description": "E.164 format"},

"address": {

"type": "object",

"properties": {

"street": {"type": "string"},

"city": {"type": "string"},

"state": {"type": "string", "enum": ["NY", "CA", "TX", "FL", "IL"]},

"zipCode": {"type": "string", "pattern": "^\\d{5}(-\\d{4})?$"}

},

"required": ["street", "city", "state", "zipCode"]

},

"registrationDate": {"type": "string", "format": "date-time"},

"userType": {"type": "string", "enum": ["standard", "premium", "admin"], "default": "standard"},

"lastLogin": {"type": "string", "format": "date-time", "nullable": true}

},

"required": ["id", "firstName", "lastName", "email", "registrationDate", "userType"]

},

"instructions": "Generate 5 diverse user profiles. Ensure one user is 'premium' and registered within the last month. Another user should have an invalid email format. Include a user from California and one from New York. Vary the lastLogin dates, some null."

}

An LLM, given this input, could produce JSON objects adhering to the schema and instructions, including the specific edge case of an invalid email. This level of controlled, yet varied, generation is a game-changer.

2. VAEs and GANs for High-Dimensional Data

For more complex data types like images, audio, or time-series data, where simple schema definitions aren't sufficient, Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) shine. These models learn the intricate underlying distributions of real data and can generate new, unseen, but statistically similar data points.

- VAEs: Learn a compressed, latent representation of the input data. By sampling from this latent space and decoding, VAEs can generate new, diverse data points. They are excellent for generating variations of existing data.

- GANs: Consist of two neural networks – a generator and a discriminator – locked in a continuous adversarial game. The generator tries to create realistic data, while the discriminator tries to distinguish real from fake. This competition results in a generator capable of producing highly realistic synthetic data.

Use Cases:

- Computer Vision: Generating diverse image sets for testing object detection models under various lighting conditions, occlusions, or with rare objects. This is crucial for testing the robustness and fairness of AI models themselves.

- Audio Processing: Synthesizing different accents, background noises, or speech patterns to test speech recognition systems.

- Time-Series Data: Generating realistic sensor readings, financial market data, or IoT device logs to test anomaly detection systems or predictive maintenance algorithms.

3. Privacy-Preserving Synthesis

One of the most significant benefits of generative AI for test data is its ability to create synthetic data that retains the statistical properties and relationships of real data without containing any original sensitive information. This directly addresses stringent privacy regulations like GDPR, HIPAA, and CCPA, allowing organizations to test with realistic data without risking compliance breaches.

The synthetic data is a new creation, not a masked or anonymized version of existing sensitive data, making it inherently privacy-preserving.

The Art and Science of Test Case Generation

Beyond data, the creation of test cases themselves is a substantial undertaking. A good test case is a meticulously defined sequence of actions, preconditions, and expected outcomes designed to verify a specific piece of functionality or system behavior. This requires:

- Deep Understanding: Of requirements, user stories, and system architecture.

- Creativity: To envision various user flows, edge cases, and error conditions.

- Precision: To document steps and expected results unambiguously.

- Maintenance: Test cases need to evolve as the software changes.

This process is often manual, repetitive, and prone to human oversight, leading to gaps in test coverage and slower development cycles.

Generative AI's Answer: Intelligent Test Case Generation (TCG)

Generative AI, particularly LLMs, can automate and enhance the creation of test cases, transforming it from a manual craft into an intelligent, assisted process.

1. LLMs for Natural Language Test Cases

LLMs excel at understanding and generating human-readable text, making them ideal for creating test cases from various inputs.

How it works:

- From Requirements/User Stories: Given a user story like "As a registered user, I want to be able to reset my password so I can regain access to my account," an LLM can generate detailed test cases covering positive flows, negative flows (e.g., invalid email, non-existent user), boundary conditions, and security considerations.

- From API Specifications: Provide an OpenAPI (Swagger) definition, and an LLM can generate test cases for each endpoint, covering valid requests, invalid parameters, authentication failures, and various response scenarios.

- From Code Snippets/Documentation: Even with just code or internal documentation, LLMs can infer intended behavior and suggest tests.

Example: Generating Gherkin Test Cases from a User Story

Consider the user story: "As a customer, I want to be able to add multiple items to my shopping cart so that I can purchase them together."

An LLM could generate:

Feature: Shopping Cart Functionality

Scenario: Add a single item to cart

Given I am on the product details page for "Laptop X"

When I click "Add to Cart"

Then "Laptop X" should be added to my cart

And the cart icon should show "1" item

Scenario: Add multiple distinct items to cart

Given I am on the product details page for "Laptop X"

When I click "Add to Cart"

And I navigate to the product details page for "Mouse Y"

And I click "Add to Cart"

Then "Laptop X" and "Mouse Y" should be in my cart

And the cart icon should show "2" items

Scenario: Add the same item multiple times to cart

Given I am on the product details page for "Laptop X"

When I click "Add to Cart"

And I click "Add to Cart"

Then "Laptop X" should be in my cart with quantity "2"

And the cart icon should show "2" items

Scenario: Attempt to add an out-of-stock item

Given I am on the product details page for "Limited Edition Z" which is out of stock

When I click "Add to Cart"

Then I should see an error message "Item is currently out of stock"

And "Limited Edition Z" should not be added to my cart

Scenario: Add item with invalid quantity (e.g., zero or negative)

Given I am on the product details page for "Headphones A"

When I try to add "0" quantity of "Headphones A" to cart

Then I should see an error message "Quantity must be at least 1"

And "Headphones A" should not be added to my cart

Feature: Shopping Cart Functionality

Scenario: Add a single item to cart

Given I am on the product details page for "Laptop X"

When I click "Add to Cart"

Then "Laptop X" should be added to my cart

And the cart icon should show "1" item

Scenario: Add multiple distinct items to cart

Given I am on the product details page for "Laptop X"

When I click "Add to Cart"

And I navigate to the product details page for "Mouse Y"

And I click "Add to Cart"

Then "Laptop X" and "Mouse Y" should be in my cart

And the cart icon should show "2" items

Scenario: Add the same item multiple times to cart

Given I am on the product details page for "Laptop X"

When I click "Add to Cart"

And I click "Add to Cart"

Then "Laptop X" should be in my cart with quantity "2"

And the cart icon should show "2" items

Scenario: Attempt to add an out-of-stock item

Given I am on the product details page for "Limited Edition Z" which is out of stock

When I click "Add to Cart"

Then I should see an error message "Item is currently out of stock"

And "Limited Edition Z" should not be added to my cart

Scenario: Add item with invalid quantity (e.g., zero or negative)

Given I am on the product details page for "Headphones A"

When I try to add "0" quantity of "Headphones A" to cart

Then I should see an error message "Quantity must be at least 1"

And "Headphones A" should not be added to my cart

This demonstrates the LLM's ability to not only generate the primary flow but also anticipate negative and edge cases, significantly boosting coverage.

2. Test Scenario Exploration

LLMs can go beyond direct translation of requirements into test cases. They can analyze system descriptions, business rules, and even past bug reports to propose diverse and creative test scenarios, including:

- Positive Scenarios: Happy paths, expected user flows.

- Negative Scenarios: Invalid inputs, unauthorized access, error conditions.

- Boundary Conditions: Testing at the limits of input ranges (e.g., minimum/maximum values, empty strings).

- Performance/Load Scenarios: Suggesting types of load tests based on expected user traffic.

- Security Scenarios: Identifying potential vulnerabilities based on system description.

This proactive scenario exploration helps uncover potential issues much earlier in the development cycle.

3. Automated Test Script Generation

The ultimate goal for many is to move beyond just human-readable test cases to actual executable test scripts. While challenging, this is an active area of research and development. LLMs can generate code snippets or even full test scripts in frameworks like Selenium, Playwright, Cypress, or Appium, given sufficient context.

Example: Generating a Playwright Script

Given the Gherkin scenario: "Add a single item to cart", an LLM could generate:

from playwright.sync_api import Page, expect

def test_add_single_item_to_cart(page: Page):

# Given I am on the product details page for "Laptop X"

page.goto("https://www.example.com/products/laptop-x")

expect(page.locator("h1")).to_have_text("Laptop X")

# When I click "Add to Cart"

page.locator("button:has-text('Add to Cart')").click()

# Then "Laptop X" should be added to my cart

page.locator(".cart-items-list").wait_for() # Wait for cart to update

expect(page.locator(".cart-items-list >> text=Laptop X")).to_be_visible()

# And the cart icon should show "1" item

expect(page.locator(".cart-icon .item-count")).to_have_text("1")

from playwright.sync_api import Page, expect

def test_add_single_item_to_cart(page: Page):

# Given I am on the product details page for "Laptop X"

page.goto("https://www.example.com/products/laptop-x")

expect(page.locator("h1")).to_have_text("Laptop X")

# When I click "Add to Cart"

page.locator("button:has-text('Add to Cart')").click()

# Then "Laptop X" should be added to my cart

page.locator(".cart-items-list").wait_for() # Wait for cart to update

expect(page.locator(".cart-items-list >> text=Laptop X")).to_be_visible()

# And the cart icon should show "1" item

expect(page.locator(".cart-icon .item-count")).to_have_text("1")

This requires the LLM to understand not only the test logic but also the syntax and common practices of a specific testing framework and potentially the UI elements of the application under test (though this often requires additional context like UI component libraries or previous UI scans).

Practical Applications & Value for AI Practitioners

The implications of Generative AI in QA are profound, offering tangible benefits across the software development lifecycle:

- Accelerated Test Cycles: The most immediate impact is the drastic reduction in time and effort spent on manual test data and test case creation. This directly translates to faster feedback loops and quicker release cycles.

- Enhanced Test Coverage: By intelligently exploring scenarios and generating diverse data, AI can uncover edge cases and obscure bugs that human testers might miss, leading to more robust and reliable software. This is particularly valuable for complex systems with numerous interdependencies.

- Cost Reduction: Automating these labor-intensive tasks reduces the reliance on expensive manual effort, freeing up human testers to focus on more complex exploratory testing, test strategy, and AI validation.

- Privacy Compliance: Synthetic data generation allows organizations to test thoroughly with realistic data without compromising sensitive user information, critical for industries like healthcare, finance, and government.

- Testing AI Systems (AI for AI): This is a meta-application where generative AI becomes crucial for testing other AI models. For instance:

- Generating adversarial examples to test the robustness of computer vision models.

- Creating diverse conversational flows and edge-case queries to test chatbots and NLP models.

- Synthesizing biased or unbiased datasets to evaluate fairness and mitigate bias in AI systems.

- Shift-Left Testing: Generative AI enables the creation of test data and cases much earlier in the development lifecycle, even before the application is fully built. Developers can use generated data for unit and integration tests, catching defects closer to the source and reducing the cost of fixing them.

- Self-Healing Tests (Future Vision): Imagine a future where AI not only generates tests but also observes system changes, adapts existing tests, and perhaps even suggests code fixes based on test failures. This moves towards a truly autonomous and adaptive testing ecosystem.

Challenges & Future Directions

While the promise is immense, the path to fully autonomous, AI-driven QA is not without its hurdles:

- Hallucinations and Accuracy: Generative models, especially LLMs, can sometimes produce plausible-sounding but incorrect or nonsensical data/test cases. Human oversight and validation remain critical to ensure the generated artifacts are accurate and relevant.

- Controllability and Constraints: Ensuring the generated data or tests strictly adhere to complex business rules, data integrity constraints, and specific testing objectives can be challenging. Advanced prompt engineering, fine-tuning, and integration with formal specification languages are needed.

- Contextual Understanding: While LLMs are powerful, deep domain-specific understanding (e.g., nuances of a highly regulated financial system) is still often required for truly intelligent and comprehensive test generation. Hybrid approaches combining human expertise with AI assistance are likely to prevail.

- Integration Complexity: Integrating generative AI tools into existing QA pipelines, CI/CD workflows, and requirement management systems can be complex, requiring robust APIs and flexible frameworks.

- Ethical Considerations: When generating synthetic data, especially for testing AI systems, it's crucial to ensure fairness and mitigate bias. If the training data for the generative AI itself is biased, it could perpetuate or even amplify those biases in the generated test data, leading to flawed evaluations of the target AI system.

- Explainability: Understanding why an AI generated a particular test case or data point can be difficult. For trust and effective debugging, more transparent and explainable generative models are needed.

- Cost of Inference and Training: Running powerful generative models, especially large LLMs, can be computationally expensive, impacting the economic viability for some organizations.

Future directions will focus on addressing these challenges through:

- Hybrid AI-Human Workflows: Tools that augment human testers rather than fully replacing them, allowing for human guidance and refinement of AI suggestions.

- Specialized Models: Fine-tuning smaller, domain-specific LLMs for particular industries or application types to improve accuracy and reduce computational overhead.

- Advanced Prompt Engineering & Guardrails: Developing more sophisticated techniques to guide generative models and enforce strict constraints.

- Self-Correction and Feedback Loops: Integrating mechanisms where the generative AI learns from human corrections or test execution results to improve future generations.

- Standardization: Developing industry standards for how generative AI integrates with QA tools and processes.

Conclusion

Generative AI for test data synthesis and test case generation is not merely a futuristic concept; it's rapidly maturing into a practical, indispensable tool that is poised to redefine the landscape of software quality assurance. By automating some of the most tedious, time-consuming, and creativity-intensive aspects of testing, it promises to accelerate development cycles, enhance test coverage, reduce costs, and bolster privacy compliance.

For AI practitioners and enthusiasts, this field offers a fertile ground for innovation. Understanding the underlying models – be it the contextual prowess of LLMs or the data distribution learning capabilities of VAEs and GANs – alongside the practical integration strategies, will be a significant competitive advantage. This shift from manually crafting tests to intelligently generating them marks a pivotal moment, promising a future of more efficient, comprehensive, and resilient software development, where quality assurance becomes an enabler of speed and innovation, rather than a brake. The era of intelligent testing is here, and generative AI is leading the charge.