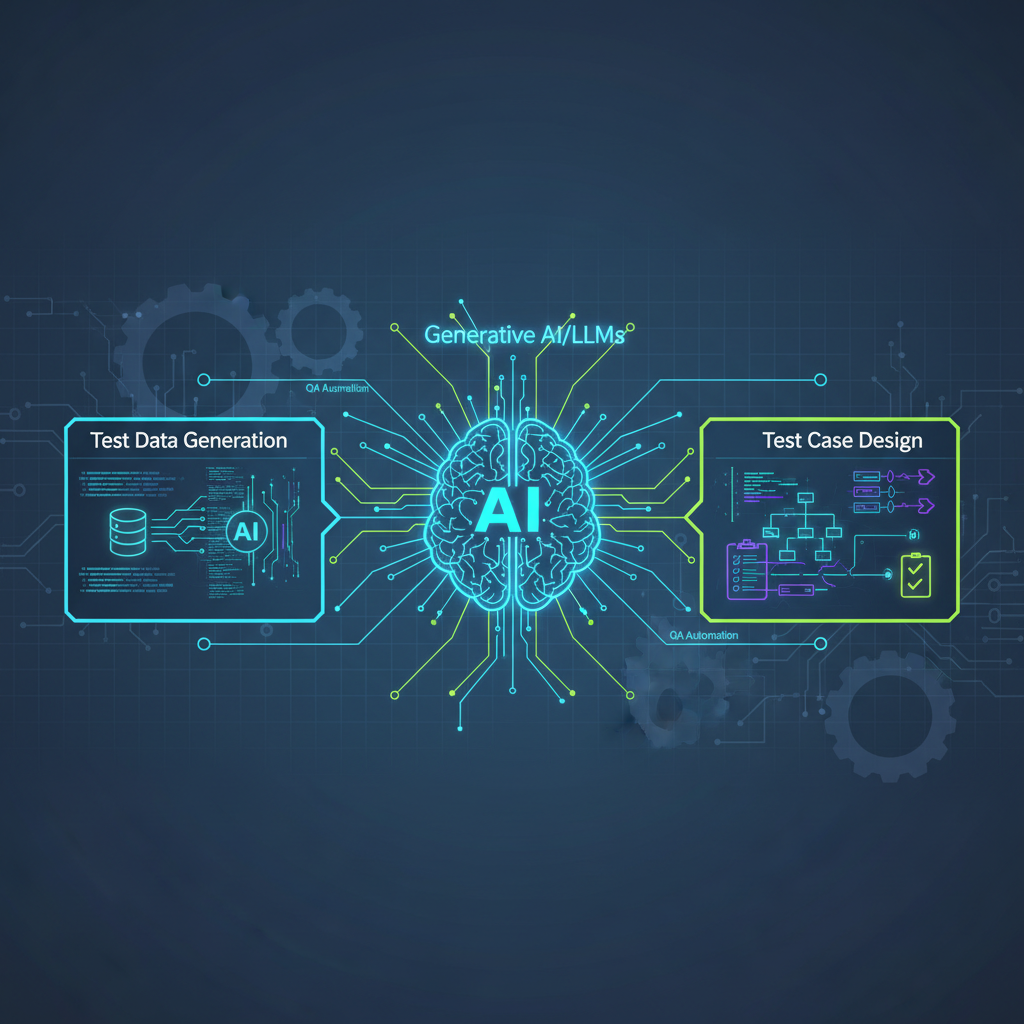

Generative AI in QA: Revolutionizing Test Data and Test Case Generation

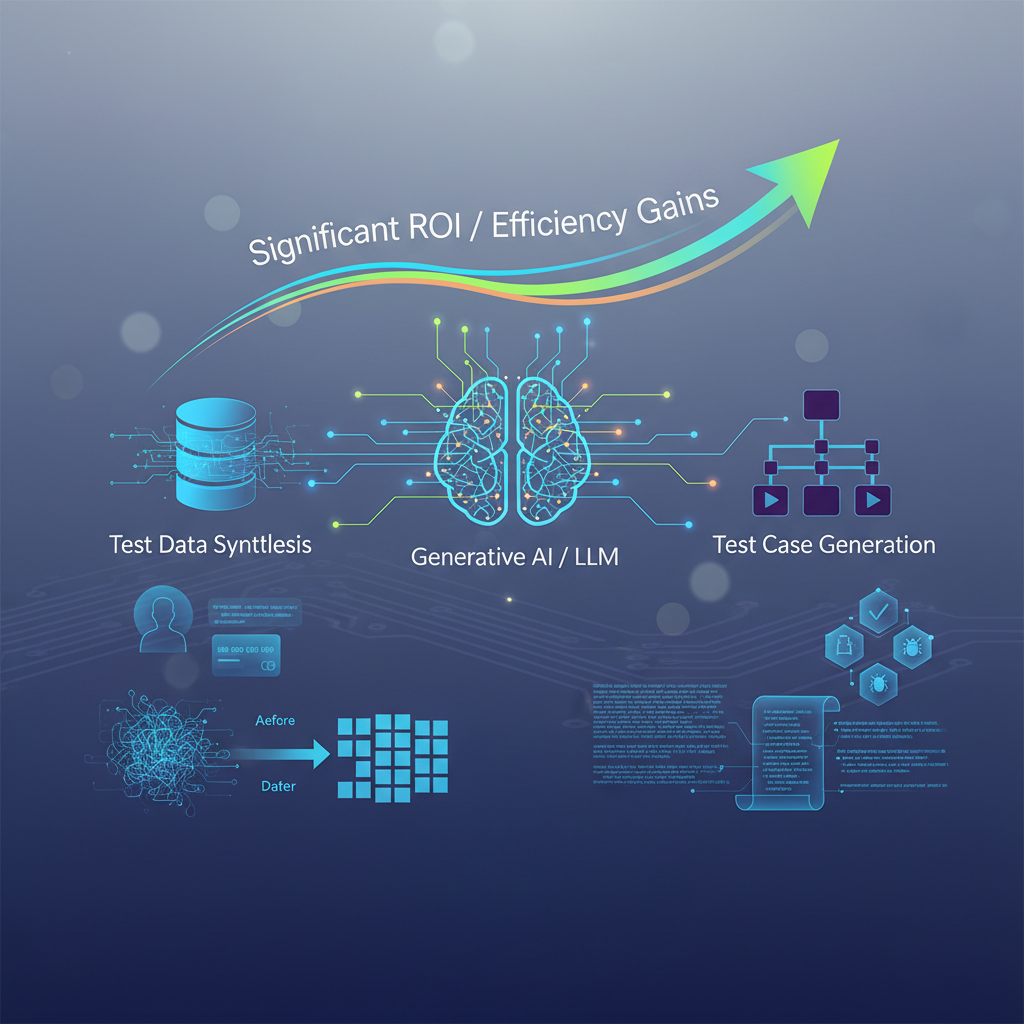

Discover how Generative AI, powered by large language models, is transforming software quality assurance. This paradigm shift offers significant ROI by addressing persistent pain points in test data synthesis and test case generation.

The landscape of software quality assurance (QA) and quality control (QC) is undergoing a profound transformation, driven by the relentless march of artificial intelligence. While AI has long been a valuable ally in areas like defect prediction, intelligent test selection, and UI automation through computer vision, a new frontier is rapidly emerging, promising to redefine how we approach software quality: Generative AI for Test Data Synthesis and Test Case Generation.

This isn't just another incremental improvement; it's a paradigm shift. Leveraging the power of cutting-edge large language models (LLMs) and other generative models, this application of AI directly addresses some of the most persistent and costly pain points in the software development lifecycle. For AI practitioners and enthusiasts, it represents a compelling intersection of advanced AI research with immediate, high-impact practical applications, offering significant return on investment (ROI) potential.

The Core Concept: What It Is and How It Works

At its heart, Generative AI for Test Data Synthesis and Test Case Generation involves employing sophisticated AI models to automatically create the essential ingredients for robust software testing:

-

Synthetic Test Data: This refers to artificially generated data that meticulously mimics the statistical properties, patterns, and relationships found in real-world production data, without containing any actual sensitive information. Imagine needing customer profiles, financial transaction records, sensor readings, or log files for testing. Instead of using anonymized production data (which still carries risks) or manually crafting limited datasets, generative AI can conjure up vast, diverse, and realistic synthetic datasets. This can involve:

- LLMs: Used for generating natural language data like names, addresses, comments, or even complex narratives that fit specific scenarios.

- Generative Adversarial Networks (GANs): Particularly effective for generating structured data (e.g., tabular data, images, time series) that maintains complex correlations between features. A generator network learns to produce realistic data, while a discriminator network tries to distinguish between real and synthetic data, pushing the generator to improve.

- Variational Autoencoders (VAEs): Excellent for learning the underlying distribution of complex data and then sampling from that distribution to create new, similar data points.

- Rule-based Generators augmented by AI: AI can learn the rules and constraints from existing data or specifications and then apply them to generate new data.

-

Test Cases/Scenarios: Beyond just data, generative AI can craft the actual instructions for testing. This can range from natural language descriptions of user interactions and expected system behaviors to fully executable test scripts.

- Natural Language Descriptions: LLMs can take requirements, user stories, or API specifications and translate them into human-readable test scenarios, often in formats like Gherkin (Given/When/Then).

- Executable Code: More advanced applications can generate code snippets for automated testing frameworks (e.g., Python Selenium scripts, Playwright, Cypress, JUnit tests) based on high-level descriptions or even by analyzing existing application code. This includes positive tests (happy paths), negative tests (invalid inputs, error conditions), edge cases (boundary conditions), and exploratory scenarios.

The magic lies in the models' ability to understand context, learn patterns, and then creatively generate new, relevant artifacts that adhere to specified constraints and objectives.

Why Now? The Confluence of Factors Making This Timely and Important

The sudden prominence of generative AI in QA isn't accidental; it's the result of several converging trends and technological breakthroughs:

- The LLM Revolution: The unprecedented capabilities of models like GPT-3/4, LLaMA, Claude, and Gemini have democratized sophisticated text generation and understanding. These models can process vast amounts of information, reason about complex relationships, and produce coherent, contextually relevant output, making them ideal for interpreting requirements and generating test artifacts.

- Escalating Data Privacy Regulations: With GDPR, CCPA, and similar regulations worldwide, using production data for testing has become a legal and ethical minefield. Synthetic data offers a privacy-by-design solution, allowing developers and testers to work with realistic data without exposing sensitive information or incurring compliance risks.

- The Imperative of "Shift-Left" Testing: Modern development methodologies emphasize finding and fixing defects as early as possible. Generative AI facilitates this by enabling the creation of test data and cases even before the application is fully built, based on design documents or API contracts. This proactive approach drastically reduces the cost of defect remediation.

- Expanding Test Coverage and Diversity: Human testers, no matter how skilled, are prone to cognitive biases and can overlook obscure edge cases or unusual data combinations. AI can systematically explore a much wider parameter space, generate highly diverse data, and uncover scenarios that humans might not conceive, leading to more comprehensive test coverage.

- Addressing Cost and Time Bottlenecks: Manual test data creation and test case writing are notoriously time-consuming, tedious, and expensive. Automating these processes with generative AI promises significant efficiency gains, freeing up human testers to focus on more complex, exploratory, and value-added activities.

- Tackling Data Scarcity: For entirely new features, greenfield projects, or systems dealing with novel data types, real production data simply doesn't exist. Generative AI can bridge this gap by creating initial datasets that allow development and testing to proceed.

- Complexity of Modern Systems: The proliferation of microservices, intricate API ecosystems, and complex business logic makes manual data and scenario generation increasingly difficult to manage and scale. AI offers a scalable solution for these distributed and interconnected systems.

Recent Developments & Emerging Trends: Pushing the Boundaries

The field is evolving at a breakneck pace, with several exciting developments shaping its future:

- Advanced Contextual Understanding: Modern LLMs can ingest and synthesize information from diverse sources – user stories, API specifications (e.g., OpenAPI/Swagger), design documents, architectural diagrams, and even existing codebases. This allows them to generate test cases and data that are not just syntactically correct but also semantically aligned with the system's intended behavior and business logic.

- Sophisticated Prompt Engineering for Testing: Crafting effective prompts is becoming an art and science. Researchers and practitioners are developing specialized prompt engineering techniques to guide LLMs towards generating specific types of test data (e.g., boundary conditions, invalid inputs, specific data distributions, data with particular statistical properties) and test cases (e.g., performance tests, security tests, accessibility tests). This involves few-shot learning, chain-of-thought prompting, and integrating external tools.

- Seamless CI/CD Integration: The goal is to make generative AI an integral part of the development pipeline. Tools are emerging that allow for on-demand test data and test case generation within CI/CD workflows. Imagine a new pull request triggering an AI model to generate relevant test data and scenarios, which are then automatically executed as part of the build process.

- Quantifying Synthetic Data Quality: A critical area of research is developing robust metrics to assess the "realism," "utility," and "privacy" of synthetic data. This ensures that the generated data accurately reflects the statistical properties and edge cases of real data, is fit for purpose, and doesn't inadvertently leak sensitive information. Techniques involve comparing statistical distributions, machine learning model performance on real vs. synthetic data, and privacy differential analysis.

- Domain-Specific Model Fine-tuning: While general-purpose LLMs are powerful, fine-tuning them on domain-specific testing data (e.g., financial transactions, healthcare records, IoT sensor data, automotive software logs) significantly improves the accuracy, relevance, and nuance of the generated artifacts. This creates specialized "testing copilots" for particular industries.

- Augmenting Self-Healing Tests: While not purely generative, LLMs are being explored to analyze test failures, pinpoint the root cause, and even suggest or automatically generate fixes for brittle test scripts. This dramatically reduces the maintenance overhead associated with large test suites.

- "Test Oracles" Generation: Beyond just inputs, LLMs can be trained to predict the expected outputs or behaviors of a system for a given set of inputs. This allows the AI to act as a "test oracle," verifying the correctness of the system under test, a notoriously difficult problem in automated testing.

- Code-Generating LLMs for Test Scripts: Models like GitHub Copilot have demonstrated LLMs' ability to generate functional code. This capability is being extended to generate executable test scripts directly from natural language descriptions or user stories, accelerating the creation of automated tests for UI (Selenium, Playwright), API (RestAssured, Postman collections), and unit testing frameworks.

Practical Applications and Use Cases: Where the Rubber Meets the Road

The theoretical promise of generative AI translates into tangible benefits across various testing disciplines:

-

API Testing:

- Data Generation: An LLM can parse an OpenAPI/Swagger specification and generate diverse JSON or XML payloads for API requests. This includes valid inputs, invalid data types, missing required parameters, malformed structures, and boundary values, ensuring comprehensive coverage of the API contract.

- Test Case Generation: From the same specification, the AI can generate test cases for different HTTP methods (GET, POST, PUT, DELETE), expected status codes (200 OK, 400 Bad Request, 500 Internal Server Error), authentication scenarios (valid/invalid tokens), and error handling.

- Example: Given an endpoint

/users/{id}for aGETrequest, the AI might generate test data forid=1,id=999999(boundary),id=abc(invalid type),id=-5(negative), and corresponding test cases to verify the response structure and status codes.

-

UI Testing:

- Data Generation: For web forms, the AI can populate fields with realistic user data (names, addresses, emails, phone numbers) for various locales, ensuring internationalization and localization testing. It can also generate data for edge cases like extremely long strings, special characters, or empty required fields.

- Test Case Generation: From user stories or UI mockups, LLMs can generate Gherkin scenarios (Given/When/Then) describing user interactions (e.g., "Given I am on the login page, When I enter valid credentials, Then I should be redirected to the dashboard").

- Script Generation: Potentially, the AI could generate Selenium or Playwright code snippets to automate these interactions, such as

driver.findElement(By.id("username")).sendKeys("testuser");.

-

Performance Testing: Generate vast volumes of synthetic user profiles, transaction data, and interaction sequences to simulate realistic load on a system. This allows for early identification of performance bottlenecks without impacting production or relying on limited real data.

-

Security Testing: Generate common attack patterns (e.g., SQL injection strings, cross-site scripting (XSS) payloads, directory traversal attempts) as test data or scenarios to probe system vulnerabilities. This can significantly augment traditional penetration testing efforts.

-

Data Migration Testing: Create synthetic datasets that precisely mimic the structure and content of a source system, including complex relationships and data types. This allows for thorough validation of data migration scripts and processes before touching live production data.

-

Edge Case & Negative Testing: This is where generative AI truly shines. It can automatically generate data and scenarios that specifically target boundary conditions, invalid inputs, and error paths that human testers might overlook due to cognitive biases or lack of time. For example, testing an age input field with

0,1,120,121,-1, and non-numeric values. -

Privacy-Preserving Testing: The most straightforward application: create fully synthetic datasets for all development, staging, and testing environments, completely eliminating the need to use sensitive production data and ensuring compliance with privacy regulations.

-

Early-Stage Testing: Generate initial test cases and data from high-level requirements or design documents even before the application is fully built. This allows for early feedback on design flaws, missing requirements, and potential integration issues.

Challenges and Considerations: Navigating the New Frontier

While the potential is immense, generative AI in QA is not without its hurdles:

- "Hallucinations" and Accuracy: LLMs can sometimes generate plausible but factually incorrect or irrelevant data/test cases. This necessitates careful validation and human oversight to ensure the generated artifacts are truly useful and don't introduce false positives or negatives.

- Bias in Training Data: If the underlying LLM's training data contains biases (e.g., gender, racial, or cultural biases), these can be inadvertently propagated into the generated test data or scenarios, leading to blind spots in testing and potentially discriminatory system behavior.

- Complexity of Real-World Constraints: Generating data that adheres to intricate inter-field dependencies, complex business rules, and referential integrity across multiple tables or services remains a significant challenge. For instance, generating a valid order that requires a valid customer ID, existing product IDs, and a payment method with sufficient funds.

- Performance and Cost: Generating large volumes of high-quality synthetic data or complex test suites can be computationally intensive, requiring substantial processing power and incurring costs associated with API calls to commercial LLMs.

- Integration Overhead: Integrating generative AI tools into existing QA frameworks, CI/CD pipelines, and legacy systems requires careful planning, development effort, and potentially significant architectural adjustments.

- Trust and Explainability: Understanding why a model generated a particular test case or data point can be difficult. The black-box nature of some generative models can impact trust, especially when dealing with critical systems. Explainable AI (XAI) techniques are being explored to address this.

Value for AI Practitioners and Enthusiasts: A Fertile Ground for Innovation

For those deeply involved in AI, this domain offers a rich tapestry of opportunities and challenges:

- Cutting-Edge LLM Application: It's a prime example of applying state-of-the-art generative AI to a real-world, high-impact problem, pushing the boundaries of what LLMs can achieve beyond chatbots and content creation.

- Data Engineering Focus: Success hinges on understanding data distributions, mastering anonymization and synthetic data generation techniques, and developing robust data quality metrics. It's a goldmine for data scientists and engineers.

- Prompt Engineering Mastery: Crafting effective prompts to guide generative models is a critical skill. This domain demands creativity and precision in prompt design to achieve desired test outcomes.

- System Integration Expertise: Building end-to-end solutions requires knowledge of APIs, CI/CD pipelines, and various testing frameworks, blending AI expertise with software engineering best practices.

- Ethical AI Considerations: This field directly confronts crucial ethical considerations like data privacy, bias detection, and fairness in AI-driven development, offering opportunities to build more responsible AI systems.

- Tangible ROI and Business Impact: Unlike some theoretical AI applications, generative AI in QA offers clear, measurable business value through reduced costs, faster time-to-market, improved software quality, and enhanced compliance. It's a domain where AI can directly contribute to the bottom line.

Conclusion

Generative AI for test data synthesis and test case generation is not merely a theoretical concept; it's rapidly transitioning from research labs to practical adoption in enterprises worldwide. It represents a significant leap forward in QA automation, promising to make testing more efficient, comprehensive, and compliant with increasingly stringent regulations.

For AI practitioners and enthusiasts, this field offers an unparalleled opportunity for innovation. It blends advanced natural language processing, deep learning, and data science with the practical demands of software engineering, all aimed at solving critical industry challenges. As models become more sophisticated and integration tools mature, generative AI will undoubtedly become an indispensable component of the modern software development toolkit, ushering in an era of intelligent, autonomous, and highly effective quality assurance. The future of testing is generative, and it's here now.