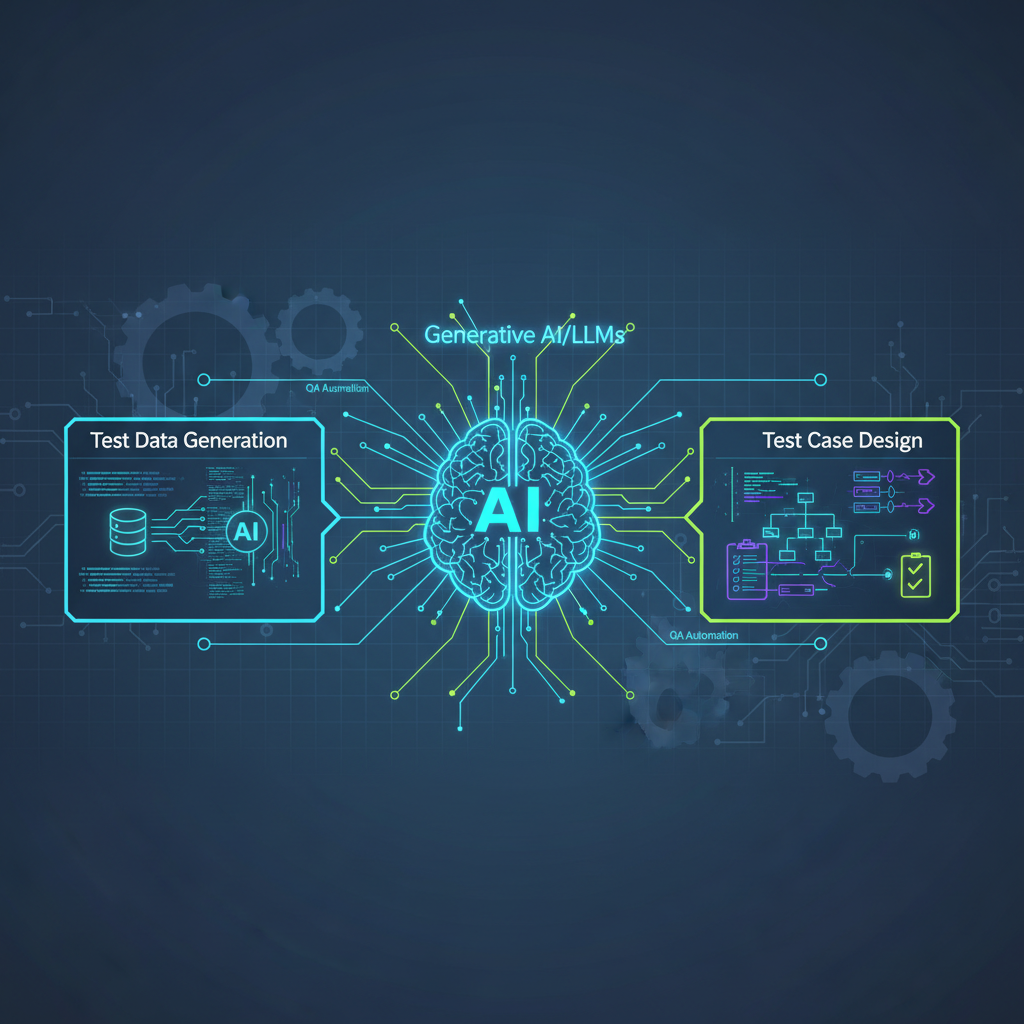

Generative AI in QA: Revolutionizing Test Data Synthesis & Test Case Generation

Discover how Generative AI is transforming Quality Assurance by empowering AI systems to understand, imagine, and create test data and test cases. This paradigm shift moves beyond manual methods, addressing the complexities of modern software development.

The landscape of Quality Assurance (QA) and Quality Control (QC) is undergoing a profound transformation, propelled by the relentless march of Artificial Intelligence. While AI has long been a powerful ally in areas like defect prediction and anomaly detection, a new frontier is emerging that promises to redefine how we approach software testing: Generative AI for Test Data Synthesis and Test Case Generation. This isn't just an incremental improvement; it's a paradigm shift, empowering AI systems to understand, imagine, and create the very artifacts needed to rigorously test complex software.

The Dawn of Creative AI in QA

For decades, test data generation and test case creation have been largely manual, rule-based, or reliant on simple randomization. This process is often tedious, error-prone, and struggles to keep pace with the velocity and complexity of modern software development. Enter generative AI – large language models (LLMs), generative adversarial networks (GANs), variational autoencoders (VAEs), and diffusion models – which are now demonstrating an unprecedented ability to produce novel, realistic, and contextually relevant content.

Imagine an AI that can read your software requirements and instantly draft a suite of comprehensive test cases, complete with preconditions, steps, and expected outcomes. Or an AI that can conjure up millions of synthetic customer records, indistinguishable from real data, to stress-test your systems without ever touching sensitive personal information. This is the promise of generative AI in QA, moving beyond mere analysis to active creation, fundamentally altering the "shift-left" testing paradigm.

Why Now? The Confluence of Need and Capability

Several factors converge to make generative AI in QA not just a fascinating concept, but a timely and impactful necessity:

-

Explosive Growth in Generative AI Capabilities: The past few years have witnessed a Cambrian explosion in generative AI. Models like GPT-3/4, Llama, and Gemini have showcased astonishing abilities to understand natural language, generate coherent text, write code, and even produce structured data. This leap in capability makes them ideal candidates for tasks that require contextual understanding and creative output, such as generating test scenarios or synthesizing data.

-

Battling Data Scarcity and Privacy Constraints: In regulated industries like healthcare, finance, or government, obtaining sufficient real-world test data is a monumental challenge. Privacy regulations (GDPR, HIPAA, CCPA) severely restrict the use of actual customer data for testing. Generative AI offers a powerful solution by creating synthetic datasets that mimic the statistical properties and distributions of real data without containing any sensitive, personally identifiable information. This allows for robust testing of critical systems without compromising privacy or incurring legal risks.

-

Closing the Test Coverage Gap: Manual test case design and data generation are inherently limited. Human testers, no matter how skilled, can only conceive of a finite number of scenarios. This often leads to incomplete test coverage, particularly for obscure edge cases, complex interactions, or rare failure modes. Generative AI, with its ability to explore vast state spaces and identify novel combinations, can significantly expand test coverage, uncovering issues that human-centric approaches might miss.

-

Accelerating "Shift-Left" and Agile Development: The philosophy of "shift-left" testing advocates for integrating testing activities earlier in the software development lifecycle. Generative AI perfectly aligns with this. By automating the creation of test assets (data, cases) from early requirements or design documents, it enables testers to begin their work much sooner, reducing bottlenecks and accelerating development cycles. This agility is crucial for modern DevOps and continuous delivery pipelines.

-

Taming the Complexity of Modern Systems: Today's software architectures – microservices, complex APIs, highly interactive UIs, and distributed systems – present a combinatorial explosion of inputs and states. Traditional testing struggles to cope with this complexity. Generative AI can intelligently navigate this labyrinth, generating diverse inputs and scenarios tailored to the intricate dependencies and interactions within these systems.

Key Developments and Emerging Trends

The application of generative AI in QA is rapidly evolving, with several key areas showing immense promise:

1. LLM-driven Test Case Generation from Requirements

Concept: This involves feeding natural language requirements (e.g., user stories, functional specifications, design documents) into an LLM and prompting it to generate detailed, executable test cases. The LLM's understanding of context, intent, and common software patterns allows it to infer preconditions, steps, expected outcomes, and even suggest relevant test data parameters.

Practical Application: Imagine a development team using Jira. An LLM could be integrated to automatically parse a user story like: "As a customer, I want to be able to reset my password securely via email, so I can regain access to my account if I forget it." The LLM could then generate a suite of test cases covering:

- Positive Scenario: User enters valid email, receives password reset link, clicks link, sets new password successfully.

- Negative Scenario (Invalid Email): User enters unregistered email, system responds with "email not found."

- Negative Scenario (Expired Link): User waits too long to click link, link expires, user is prompted to request a new one.

- Security Scenario: User tries to reset password for another user's account (using known email), system prevents this.

This not only saves significant time but also improves traceability between requirements and tests, and ensures a broader coverage of scenarios, including edge cases that might be overlooked manually.

2. Synthetic Data Generation for Privacy-Preserving Testing

Concept: This is arguably one of the most impactful applications. Generative models like GANs, VAEs, and diffusion models are trained on real, sensitive datasets (e.g., medical records, financial transactions, customer demographics). Instead of memorizing the original data, they learn the underlying statistical distributions, correlations, and patterns. Once trained, they can generate entirely new, artificial data points that possess the same statistical properties as the real data but are not linked to any actual individuals.

Practical Application:

- Healthcare: A GAN trained on anonymized patient records could generate synthetic patient data (demographics, diagnoses, treatment histories, lab results) for testing new AI models designed for disease prediction or personalized treatment plans, all without violating HIPAA.

- Finance: Banks can use synthetic transaction data to test fraud detection algorithms, anti-money laundering systems, or credit scoring models. This allows them to simulate rare fraud patterns or market conditions that are difficult to reproduce with real data, while adhering to strict financial regulations.

- E-commerce: Generating synthetic user profiles and purchase histories to test recommendation engines or targeted advertising algorithms without using real customer behavior data.

This approach is critical for industries where data privacy is paramount, enabling robust testing that was previously impossible or prohibitively risky.

3. Fuzz Testing with Generative AI

Concept: Fuzz testing traditionally involves feeding a program with large amounts of semi-random data to uncover crashes, vulnerabilities, or unexpected behavior. Generative AI elevates this by creating "smart" or "grammar-aware" fuzzed inputs. Instead of purely random bytes, an LLM or a specialized generative model can produce inputs that are syntactically valid but semantically unusual, or malformed in ways known to exploit common vulnerabilities.

Practical Application:

- API Security: An LLM could generate variations of SQL injection attempts, cross-site scripting (XSS) payloads, or command injection strings, tailored to the context of a specific API endpoint. For example, if an API expects a JSON payload, the LLM could generate JSON with deeply nested structures, unexpected data types, or excessively long strings to test parsing robustness.

- Protocol Fuzzing: For network protocols, a generative model could produce packets that conform to the protocol's structure but contain unusual values or invalid sequences, potentially uncovering vulnerabilities in network stacks or device firmware.

- File Format Parsers: Generating malformed but plausible image, document, or archive files to test the robustness of applications that process these formats.

This "intelligent fuzzing" significantly increases the likelihood of finding critical bugs and security flaws compared to traditional random fuzzing.

4. Automated UI Test Data and Scenario Generation

Concept: Generative models can analyze user interface designs (e.g., Figma files, Sketch designs, or even rendered HTML/CSS) or user interaction logs to infer possible user flows and generate corresponding test data for UI elements. This includes populating form fields, selecting dropdown options, or defining sequences of user actions.

Practical Application:

- Web Forms: For a complex registration form with numerous fields and validation rules, a generative model could create thousands of unique input combinations, including valid, invalid, boundary, and edge cases (e.g., extremely long names, special characters in addresses, dates far in the past/future).

- User Journeys: By analyzing common navigation patterns or specified user stories, an AI could generate sequences of UI interactions that simulate different user journeys through an application, ensuring all paths are thoroughly tested.

- Responsive Design: Generating data that tests how UI elements behave under various screen sizes, orientations, and input methods (touch, keyboard, mouse).

This capability ensures comprehensive UI testing, catching layout issues, validation bugs, and usability problems under a vast array of conditions.

5. Code-Aware Test Data Generation

Concept: This advanced technique integrates generative AI with static code analysis, dynamic execution traces, or symbolic execution. The AI doesn't just guess; it understands the internal logic and data structures of the System Under Test (SUT). This allows it to generate test data that specifically targets certain code paths, boundary conditions, error handling routines, or even specific branches of business logic.

Practical Application:

- Unit Testing: For a function that calculates discounts based on various criteria (customer loyalty, purchase volume, promotional codes), a code-aware generative model could identify all possible branches and generate minimal input sets to achieve 100% code coverage, including inputs that hit boundary conditions (e.g., minimum purchase for a discount, maximum discount allowed).

- Integration Testing: For a module that processes financial transactions, the AI could analyze the code to understand how different transaction types, amounts, and user roles affect the processing logic, then generate integration test data that exercises all these variations.

- Mutation Testing: Generative AI could be used to create mutation operators or to generate test data that specifically "kills" mutants, further enhancing the effectiveness of mutation testing.

This level of intelligence in data generation moves beyond surface-level testing, probing the deeper logic of the application.

Navigating the Challenges and Considerations

While the promise is immense, the path to fully realizing generative AI's potential in QA is not without its hurdles:

- Hallucinations and Accuracy: Generative models, especially LLMs, can "hallucinate" – producing plausible but factually incorrect or irrelevant information. This means human oversight and validation of AI-generated test assets remain crucial. A generated test case might look perfect but miss a critical business rule or contain an impossible scenario.

- Bias Amplification: If the training data used for generative models contains biases (e.g., skewed demographic representation, historical prejudices), the synthetic data or test cases they produce can perpetuate or even amplify these biases. This can lead to incomplete or unfair testing, especially for AI systems themselves. Ethical AI practices are paramount.

- Computational Cost: Training and running sophisticated generative models, particularly for large datasets or complex tasks, can be resource-intensive, requiring significant computational power and specialized infrastructure.

- Domain Expertise Integration: While AI can generate, human domain experts are indispensable. They are needed to guide the models, define constraints, provide feedback, and ultimately validate the relevance and effectiveness of the generated outputs. The AI is a powerful assistant, not a replacement for deep domain knowledge.

- Integration Complexity: Integrating these advanced AI capabilities into existing QA pipelines, test management systems, and CI/CD workflows requires significant engineering effort and careful planning.

- Over-reliance and False Sense of Security: There's a risk of blindly trusting AI-generated tests without critical human review. This could lead to a false sense of security, where the AI has generated many tests, but they fail to cover the most critical or subtle bugs.

Practical Insights for AI Practitioners and Enthusiasts

For those looking to dive into this exciting field, here are some actionable insights:

- Start Small and Iterate: Don't aim to automate all test case generation overnight. Begin with well-defined, constrained problems. For example, focus on generating specific types of form data, or creating test cases for a single, well-understood feature, before tackling full end-to-end scenarios.

- Leverage Pre-trained Models: Training large generative models from scratch is prohibitively expensive for most organizations. Instead, leverage powerful existing LLMs (via APIs like OpenAI's GPT or Google's Gemini) and fine-tune them for specific QA tasks. This allows you to benefit from their vast pre-training while tailoring them to your domain.

- Prioritize Evaluation Metrics: How do you know if your AI-generated tests are good? Develop robust metrics to evaluate the quality, diversity, realism, and effectiveness of the generated test data and cases. This includes measures like code coverage, defect detection rate, adherence to requirements, and statistical similarity to real data. This is crucial for building trust and demonstrating value.

- Embrace the Human-in-the-Loop Paradigm: Design systems where AI assists human testers and QA engineers, rather than aiming for full replacement. The human-in-the-loop approach is vital for validation, refinement, handling truly novel edge cases, and providing the critical domain expertise that AI currently lacks. Think of AI as a force multiplier for your QA team.

- Champion Ethical AI Practices: Be acutely aware of potential biases in your training data and actively work to mitigate them in the generated outputs. This is especially critical when dealing with sensitive information or testing systems that impact diverse user groups. Implement fairness metrics and bias detection in your evaluation.

- Explore Hybrid Approaches: The most effective solutions often combine generative AI with traditional testing techniques. For instance, use generative AI for data synthesis, then execute those tests using existing test automation frameworks (Selenium, Playwright, Cypress). Or use AI to suggest test cases, which are then refined and implemented by human testers. This hybrid approach maximizes benefits while leveraging established tools and processes.

- Stay Informed and Experiment: The field of generative AI is moving at breakneck speed. Continuously follow research, experiment with new models and techniques, and participate in the community. The "best practice" of today might be obsolete tomorrow.

Conclusion: The Future is Generative

Generative AI for test data synthesis and test case generation is no longer a futuristic concept; it's rapidly becoming a practical necessity for organizations striving for higher quality, faster release cycles, and more comprehensive testing in the face of increasingly complex software systems. It represents a fundamental shift from reactive testing to proactive, intelligent test asset creation.

For AI practitioners, this area offers fertile ground for innovation, demanding expertise in natural language processing, deep learning, data science, and software engineering. It's a domain where cutting-edge AI research directly translates into tangible business value. For enthusiasts, it represents a compelling and impactful application of AI that directly addresses critical, long-standing challenges in software development. By embracing these technologies responsibly and strategically, we can unlock unprecedented levels of quality and efficiency in the software we build, ultimately delivering more robust and reliable experiences to users worldwide. The future of QA is not just automated; it's generative.