Revolutionizing QA: Generative AI's Impact on Test Data & Test Case Generation

Explore how Generative AI is transforming Quality Assurance by tackling critical bottlenecks in software development. Learn about its unprecedented capabilities in synthesizing realistic test data and generating comprehensive test cases, leveraging LLMs to redefine QC automation.

Revolutionizing QA: How Generative AI is Reshaping Test Data and Test Case Generation

The landscape of software development is constantly evolving, driven by an insatiable demand for speed, quality, and innovation. Yet, one critical area often struggles to keep pace: Quality Assurance (QA). Manual efforts in creating test data and designing test cases remain significant bottlenecks, slowing down development cycles and often failing to uncover subtle bugs. Enter Generative AI – a transformative force poised to redefine how we approach QA and QC automation, offering unprecedented capabilities in synthesizing realistic test data and generating comprehensive test cases.

This isn't just a theoretical concept; it's a rapidly maturing field leveraging the power of Large Language Models (LLMs) and other generative models to tackle some of the most persistent challenges in software testing. For AI practitioners and enthusiasts, understanding and implementing these techniques is no longer optional but essential for staying at the forefront of intelligent automation.

The Core of the Revolution: What is Generative AI in QA?

At its heart, this domain applies advanced generative AI models to automate and enhance two cornerstone activities of software testing:

-

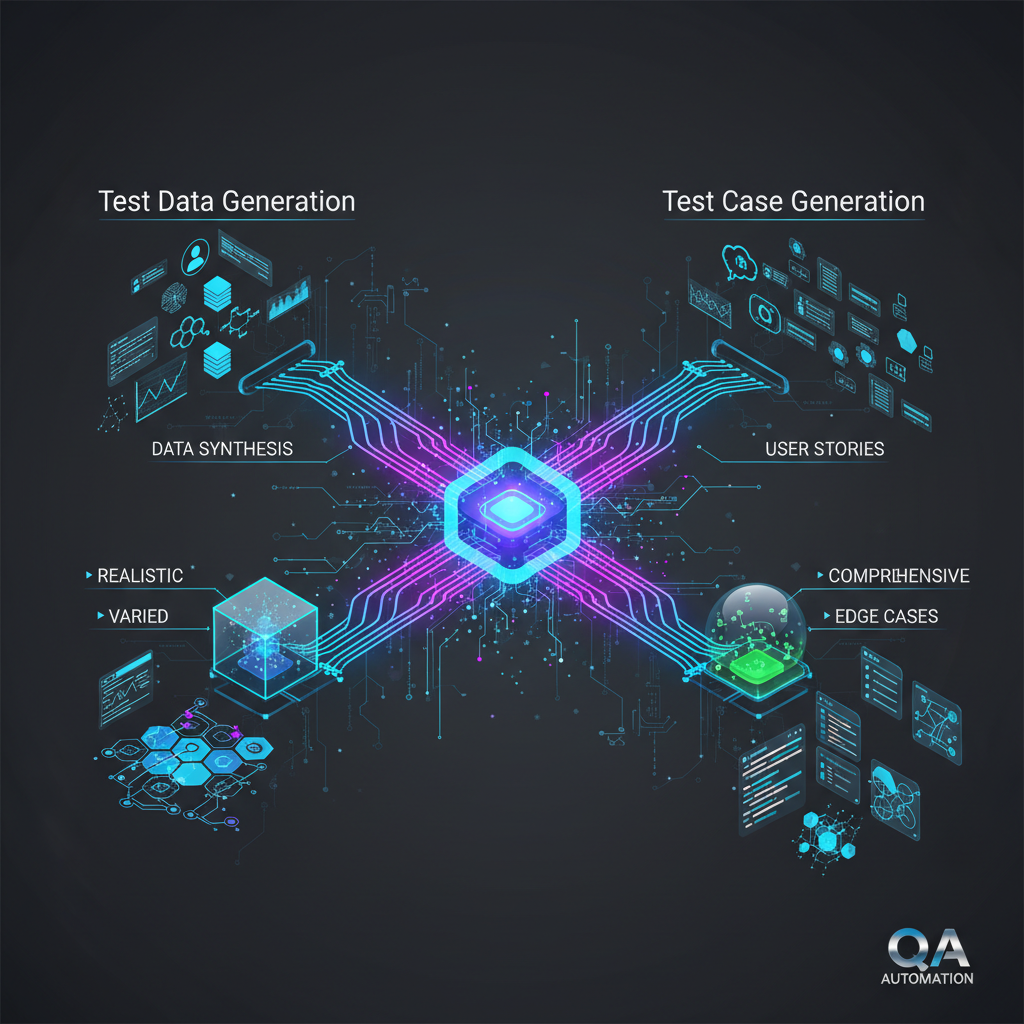

Test Data Synthesis: This involves the automatic creation of diverse, realistic, and relevant test data across various testing phases (unit, integration, system, performance, security). This isn't limited to simple structured data; it extends to complex scenarios involving unstructured text, images, audio, and intricate database relationships. The goal is to mimic real-world data distributions without exposing sensitive information.

-

Test Case Generation: Here, generative AI automatically derives test cases – including inputs, expected outputs, preconditions, postconditions, and step-by-step instructions – from a variety of sources. These sources can range from high-level requirements and user stories to existing codebases or even historical test artifacts. The output can vary from abstract scenario descriptions to fully executable test scripts ready for automation frameworks.

While LLMs like GPT-4, Claude, and Llama are currently leading the charge due to their exceptional capabilities in understanding and generating human-like text and code, other generative models such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) also play crucial roles, particularly in synthesizing non-textual data like images or complex tabular datasets.

Why Now? The Timeliness and Impact of Generative AI in QA

The current surge in interest and capability in this area is no accident. Several converging factors make Generative AI for test data and test case generation not just timely, but critical:

-

The Generative AI Explosion: The past few years have witnessed an unprecedented leap in generative AI capabilities. Powerful LLMs can now understand context, infer complex patterns, and generate coherent, syntactically correct, and semantically relevant content at scale. This makes them ideal candidates for generating diverse test inputs, expected outputs, and even entire test scripts. Specialized code-generating models further amplify this, producing functional code snippets for test automation.

-

Addressing Data Scarcity and Privacy: Obtaining sufficient, diverse, and privacy-compliant test data is a perpetual headache for developers and testers. Real production data is often sensitive, subject to strict regulations (e.g., GDPR, HIPAA), and challenging to anonymize effectively. Generative AI offers a powerful alternative by creating synthetic data that closely mirrors real-world distributions without containing any actual sensitive information. This is a game-changer for industries like healthcare, finance, and government, where data privacy is paramount.

-

Accelerating Shift-Left Testing and DevOps: Modern development methodologies like Agile and DevOps demand rapid feedback loops and continuous integration/delivery (CI/CD). Manual creation of test data and test cases becomes a significant bottleneck, delaying testing until later stages. Generative AI can dramatically accelerate the creation of these test assets much earlier in the development lifecycle, enabling true "shift-left" testing, where quality is built in from the start. This ensures that testing keeps pace with rapid development.

-

Bridging the Test Automation Pyramid Gap: While unit tests are often well-automated, the higher levels of the test automation pyramid – integration, system, and end-to-end tests – still heavily rely on manual effort for scenario definition and data preparation. These tests are complex, requiring intricate data setups and multi-step interactions. Generative AI can bridge this gap by automating the creation of these more complex, higher-level test scenarios and their associated data, pushing automation further up the pyramid.

-

Enhanced Test Coverage and Diversity: Human testers, often unconsciously, tend to focus on "happy paths" and common scenarios. Generative AI, with its ability to explore vast combinatorial spaces, can uncover a much wider range of edge cases, negative scenarios, and permutations that human testers might overlook. This leads to significantly more comprehensive test coverage and the discovery of obscure, yet critical, bugs. Imagine an AI systematically generating inputs that push system boundaries in ways a human might not conceive.

-

Personalization and Context-Aware Testing: Generative models can be fine-tuned on specific application domains, user behaviors, or historical bug data. This allows them to create highly relevant and personalized test data and scenarios that accurately reflect real user interactions and business logic, leading to more effective and targeted testing.

-

Towards Self-Healing Tests (Future Outlook): As generative models become even more sophisticated, the vision of "self-healing" tests becomes increasingly plausible. These models could not only generate tests but also adapt them automatically when application UIs or APIs change, significantly reducing the perennial burden of test maintenance, which often consumes a large portion of QA resources.

Practical Applications and Value for AI Practitioners

For AI practitioners, this field offers a rich playground to apply cutting-edge AI techniques to solve tangible, high-impact engineering problems. Here are some practical applications:

1. Automated API Test Generation

Scenario: You have a new microservice with an OpenAPI/Swagger specification, and you need to thoroughly test its endpoints.

Generative AI Approach: An LLM can ingest the OpenAPI specification (which defines endpoints, request/response schemas, parameters, authentication, etc.). Based on this, the LLM can:

- Generate diverse request bodies: For each endpoint, it can create valid JSON/XML payloads, including edge cases (e.g., missing required fields, invalid data types, boundary values for numbers, long strings).

- Derive expected responses: Based on the schema, it can predict expected status codes (200, 400, 401, 500) and even generate sample valid response bodies for validation.

- Create test assertions: It can suggest assertions to validate the structure and content of the response.

- Output executable code: The LLM can generate actual code snippets for popular API testing frameworks (e.g., Python

requestslibrary, Java RestAssured, Postman collections, JavaScript with Axios/Fetch).

Example Prompt (simplified):

"Generate Python `requests` test cases for the following OpenAPI endpoint:

Path: /users/{id}

Method: GET

Parameters:

- name: id, in: path, type: integer, required: true, description: User ID

Responses:

- 200:

description: User details

schema:

type: object

properties:

id: {type: integer}

name: {type: string}

email: {type: string}

- 404:

description: User not found

"

"Generate Python `requests` test cases for the following OpenAPI endpoint:

Path: /users/{id}

Method: GET

Parameters:

- name: id, in: path, type: integer, required: true, description: User ID

Responses:

- 200:

description: User details

schema:

type: object

properties:

id: {type: integer}

name: {type: string}

email: {type: string}

- 404:

description: User not found

"

LLM Output (conceptual):

import requests

import pytest

BASE_URL = "http://api.example.com"

def test_get_user_success():

user_id = 123

response = requests.get(f"{BASE_URL}/users/{user_id}")

assert response.status_code == 200

data = response.json()

assert data['id'] == user_id

assert 'name' in data

assert 'email' in data

assert isinstance(data['name'], str)

assert isinstance(data['email'], str)

def test_get_user_not_found():

user_id = 99999 # Assuming this ID does not exist

response = requests.get(f"{BASE_URL}/users/{user_id}")

assert response.status_code == 404

# Optionally assert on error message structure if defined in spec

def test_get_user_invalid_id_type():

user_id = "abc" # Invalid type

response = requests.get(f"{BASE_URL}/users/{user_id}")

assert response.status_code == 400 # Or 404 depending on API implementation

# Assert on error message

import requests

import pytest

BASE_URL = "http://api.example.com"

def test_get_user_success():

user_id = 123

response = requests.get(f"{BASE_URL}/users/{user_id}")

assert response.status_code == 200

data = response.json()

assert data['id'] == user_id

assert 'name' in data

assert 'email' in data

assert isinstance(data['name'], str)

assert isinstance(data['email'], str)

def test_get_user_not_found():

user_id = 99999 # Assuming this ID does not exist

response = requests.get(f"{BASE_URL}/users/{user_id}")

assert response.status_code == 404

# Optionally assert on error message structure if defined in spec

def test_get_user_invalid_id_type():

user_id = "abc" # Invalid type

response = requests.get(f"{BASE_URL}/users/{user_id}")

assert response.status_code == 400 # Or 404 depending on API implementation

# Assert on error message

Value: This significantly reduces the manual effort and time required to set up comprehensive API tests, especially crucial in microservices architectures with numerous APIs.

2. Synthetic Data Generation for Machine Learning Models

Scenario: An AI team is developing a fraud detection model but has limited access to real fraud data due to privacy and scarcity.

Generative AI Approach:

- GANs or VAEs for Tabular Data: For structured data like financial transactions, GANs or VAEs can learn the underlying distributions and correlations from a small, anonymized real dataset. They can then generate vast quantities of synthetic transactions that statistically resemble real ones, including features like transaction amount, merchant category, time of day, and even subtle indicators of fraudulent activity.

- LLMs for Text Data: For NLP tasks, LLMs can generate synthetic customer reviews, support tickets, or news articles based on specific topics or sentiment. This can augment real datasets, helping to balance class distributions or explore edge cases.

- Diffusion Models for Image Data: For computer vision tasks, diffusion models can generate synthetic images of objects, faces, or medical scans, which can be used to train robust object detection or classification models, particularly when real image data is scarce or requires extensive labeling.

Example: Generating synthetic customer transaction data for a fraud detection model.

The model learns from a sanitized dataset of real transactions (e.g., amount, merchant_category, location, is_fraud). It then generates new, unseen transactions that maintain the statistical properties and relationships, including the rare "fraudulent" patterns.

Value: Overcomes critical data privacy issues, reduces the exorbitant cost and time of data collection and labeling, and enables experimentation with diverse data distributions, leading to more robust and generalizable ML models.

3. UI Test Scenario Generation from User Stories

Scenario: A new feature is defined by a user story: "As a registered user, I want to be able to update my profile information (name, email, password) so that my details are always current."

Generative AI Approach: An LLM can parse this natural language user story and:

- Identify key entities and actions: User, profile, update, name, email, password.

- Generate high-level test scenarios:

- Successful update of all fields.

- Successful update of a single field.

- Attempt to update with invalid email format.

- Attempt to update with password not meeting complexity requirements.

- Attempt to update with empty required fields.

- Attempt to update profile as an unauthenticated user.

- Detail steps and expected outcomes: For each scenario, it can outline the UI interactions (e.g., "Navigate to Profile page," "Enter new email in 'Email' field," "Click 'Save' button") and expected outcomes (e.g., "Profile updated successfully message displayed," "Error message for invalid email displayed," "User redirected to login page").

- Suggest data variations: For fields like "name" or "email," it can suggest variations (e.g., very long name, name with special characters, international characters).

Example LLM Output (conceptual for one scenario):

**Scenario:** Update profile with invalid email format.

**Preconditions:**

1. User is logged in.

2. User is on the Profile Settings page.

**Steps:**

1. Locate the 'Email' input field.

2. Enter an invalid email address (e.g., "invalid-email").

3. Enter valid values for other fields (e.g., "John Doe" for Name).

4. Click the "Save Profile" button.

**Expected Outcome:**

1. An error message indicating "Invalid email format" is displayed next to the email field.

2. The profile information is NOT updated.

3. The user remains on the Profile Settings page.

**Scenario:** Update profile with invalid email format.

**Preconditions:**

1. User is logged in.

2. User is on the Profile Settings page.

**Steps:**

1. Locate the 'Email' input field.

2. Enter an invalid email address (e.g., "invalid-email").

3. Enter valid values for other fields (e.g., "John Doe" for Name).

4. Click the "Save Profile" button.

**Expected Outcome:**

1. An error message indicating "Invalid email format" is displayed next to the email field.

2. The profile information is NOT updated.

3. The user remains on the Profile Settings page.

Value: Automates the most time-consuming and creative part of UI testing – scenario definition – ensuring alignment with requirements and providing a solid foundation for UI automation frameworks like Selenium or Playwright.

4. Performance Test Data Generation

Scenario: You need to simulate 1 million unique users logging in and performing various actions on an e-commerce website for a load test.

Generative AI Approach: An LLM or a specialized data generator (potentially powered by GANs for more complex distributions) can:

- Generate unique user profiles: Create millions of distinct usernames, passwords, email addresses, shipping addresses, and payment details.

- Synthesize transaction histories: Generate realistic sequences of product views, cart additions, and purchases for each user, mimicking typical user behavior patterns.

- Populate product catalogs: Create large, diverse product datasets with varying attributes (price, category, description, stock levels) to simulate a dynamic inventory.

Example: Generating a CSV of 100,000 unique user accounts.

username,password,email,first_name,last_name,address,city,zipcode

user_1,P@ssw0rd1,[email protected],John,Doe,123 Main St,Anytown,12345

user_2,S3cur3P@ss,[email protected],Jane,Smith,456 Oak Ave,Otherville,67890

...

username,password,email,first_name,last_name,address,city,zipcode

user_1,P@ssw0rd1,[email protected],John,Doe,123 Main St,Anytown,12345

user_2,S3cur3P@ss,[email protected],Jane,Smith,456 Oak Ave,Otherville,67890

...

Value: Ensures performance tests accurately reflect production environments with realistic user loads and diverse data, without compromising real user data or requiring extensive manual data creation.

5. Security Test Case Generation (Fuzzing Augmentation)

Scenario: You want to find vulnerabilities in a web application's input fields beyond basic validation.

Generative AI Approach: Generative models can be used to create "intelligent" fuzzing inputs that go beyond purely random permutations:

- Contextual Fuzzing: Given the expected input format (e.g., a JSON payload for an API), an LLM can generate variations that are syntactically valid but semantically malicious (e.g., SQL injection attempts, XSS payloads, path traversal attempts embedded within valid JSON structures).

- Vulnerability-Aware Generation: Fine-tune an LLM on historical exploit data or common vulnerability patterns (e.g., OWASP Top 10). The model can then generate inputs specifically designed to trigger these known types of vulnerabilities.

- Protocol-Specific Fuzzing: For network protocols, generative models can create malformed packets or sequences of messages that violate protocol specifications in subtle ways, potentially leading to crashes or unexpected behavior.

Example Prompt:

"Generate 5 SQL injection payloads suitable for a 'username' input field in a login form."

"Generate 5 SQL injection payloads suitable for a 'username' input field in a login form."

LLM Output (conceptual):

1. ' OR '1'='1 --

2. admin' --

3. ' UNION SELECT null, null, null --

4. ' OR 1=1 LIMIT 1 --

5. ' OR 1=1 #

1. ' OR '1'='1 --

2. admin' --

3. ' UNION SELECT null, null, null --

4. ' OR 1=1 LIMIT 1 --

5. ' OR 1=1 #

Value: Enhances the effectiveness of security testing by generating more sophisticated and targeted attack vectors, potentially uncovering vulnerabilities that traditional random fuzzing might miss.

6. Code-Based Test Generation

Scenario: A developer writes a new Python function and needs to quickly generate unit tests to ensure its correctness.

Generative AI Approach: Given a piece of source code (e.g., a Python function, a Java class), an LLM can analyze its logic, identify control flows, parameters, and return types, and then generate unit tests:

- Input Combinations: Generate various input combinations, including typical values, edge cases (e.g., zero, negative numbers, empty strings, nulls), and boundary conditions.

- Assertions: Suggest appropriate assertions based on the function's expected behavior.

- Mocking/Stubbing: For functions with external dependencies, the LLM can suggest how to mock those dependencies.

Example Python Function:

def calculate_discount(price, discount_percentage):

if not isinstance(price, (int, float)) or price < 0:

raise ValueError("Price must be a non-negative number.")

if not isinstance(discount_percentage, (int, float)) or not (0 <= discount_percentage <= 100):

raise ValueError("Discount percentage must be between 0 and 100.")

discount_amount = price * (discount_percentage / 100)

return price - discount_amount

def calculate_discount(price, discount_percentage):

if not isinstance(price, (int, float)) or price < 0:

raise ValueError("Price must be a non-negative number.")

if not isinstance(discount_percentage, (int, float)) or not (0 <= discount_percentage <= 100):

raise ValueError("Discount percentage must be between 0 and 100.")

discount_amount = price * (discount_percentage / 100)

return price - discount_amount

LLM Output (conceptual Pytest tests):

import pytest

from your_module import calculate_discount

def test_calculate_discount_valid_inputs():

assert calculate_discount(100, 10) == 90.0

assert calculate_discount(50, 0) == 50.0

assert calculate_discount(200, 100) == 0.0

assert calculate_discount(75.50, 20) == 60.40

def test_calculate_discount_zero_price():

assert calculate_discount(0, 50) == 0.0

def test_calculate_discount_invalid_price_type():

with pytest.raises(ValueError, match="Price must be a non-negative number."):

calculate_discount("abc", 10)

def test_calculate_discount_negative_price():

with pytest.raises(ValueError, match="Price must be a non-negative number."):

calculate_discount(-10, 10)

def test_calculate_discount_invalid_discount_type():

with pytest.raises(ValueError, match="Discount percentage must be between 0 and 100."):

calculate_discount(100, "xyz")

def test_calculate_discount_discount_too_low():

with pytest.raises(ValueError, match="Discount percentage must be between 0 and 100."):

calculate_discount(100, -5)

def test_calculate_discount_discount_too_high():

with pytest.raises(ValueError, match="Discount percentage must be between 0 and 100."):

calculate_discount(100, 110)

import pytest

from your_module import calculate_discount

def test_calculate_discount_valid_inputs():

assert calculate_discount(100, 10) == 90.0

assert calculate_discount(50, 0) == 50.0

assert calculate_discount(200, 100) == 0.0

assert calculate_discount(75.50, 20) == 60.40

def test_calculate_discount_zero_price():

assert calculate_discount(0, 50) == 0.0

def test_calculate_discount_invalid_price_type():

with pytest.raises(ValueError, match="Price must be a non-negative number."):

calculate_discount("abc", 10)

def test_calculate_discount_negative_price():

with pytest.raises(ValueError, match="Price must be a non-negative number."):

calculate_discount(-10, 10)

def test_calculate_discount_invalid_discount_type():

with pytest.raises(ValueError, match="Discount percentage must be between 0 and 100."):

calculate_discount(100, "xyz")

def test_calculate_discount_discount_too_low():

with pytest.raises(ValueError, match="Discount percentage must be between 0 and 100."):

calculate_discount(100, -5)

def test_calculate_discount_discount_too_high():

with pytest.raises(ValueError, match="Discount percentage must be between 0 and 100."):

calculate_discount(100, 110)

Value: Significantly boosts unit test coverage, helps developers quickly create robust tests for new or refactored code, and encourages test-driven development practices.

Challenges and Future Directions

While the promise is immense, the path to full adoption of Generative AI in QA is not without its hurdles:

-

Hallucinations and Accuracy: Generative models can "hallucinate," producing plausible but incorrect or invalid test data and test cases. Ensuring the generated artifacts are accurate, valid, and truly reflect the system under test (SUT) requires robust validation mechanisms and human oversight. This is a critical area for ongoing research, focusing on grounding models in factual and domain-specific knowledge.

-

Contextual Understanding: While LLMs excel at general context, deep domain-specific knowledge, understanding of complex business rules, and intricate system architecture details can still be a limitation. Fine-tuning models with extensive domain-specific data, including internal documentation, codebases, and historical test results, is crucial for achieving high fidelity.

-

Integration with Existing Toolchains: For practical adoption, seamless integration with existing QA tools, test management systems (e.g., Jira, TestRail), and CI/CD pipelines (e.g., Jenkins, GitLab CI) is essential. This requires developing robust APIs and connectors, and potentially standardizing output formats.

-

Explainability and Trust: Understanding why a generative model produced a particular test case or data set can be challenging. Lack of explainability can hinder trust, debugging, and the ability to refine the generation process. Future research will focus on making these models more transparent and interpretable.

-

Ethical Considerations: Generative AI models are trained on vast datasets, which may contain biases. It's crucial to ensure that synthetic data doesn't inadvertently perpetuate or amplify these biases, leading to unfair or discriminatory testing outcomes. Privacy must also be genuinely maintained, ensuring synthetic data cannot be reverse-engineered to reveal real individuals.

-

Feedback Loops and Reinforcement Learning: The most exciting future direction involves sophisticated feedback loops. Imagine a system where the results of test execution (pass/fail, coverage metrics, performance data) are fed back into the generative model. This reinforcement learning approach would allow the model to continuously refine its test generation strategies, learning what kinds of tests are most effective at finding bugs, leading to increasingly intelligent and autonomous test automation.

Conclusion

Generative AI for Test Data Synthesis and Test Case Generation is not merely an academic curiosity; it's a rapidly maturing field poised to fundamentally revolutionize how software is tested. For AI practitioners, it offers a fertile ground to apply cutting-edge AI techniques to solve real-world engineering problems, leading to faster development cycles, higher software quality, and more secure applications.

By automating the creation of diverse, realistic test data and comprehensive test cases, generative AI addresses critical pain points in software development – from data scarcity and privacy concerns to the bottlenecks of manual test creation. Its direct impact on efficiency, quality, and data privacy makes it an incredibly valuable and timely area for exploration, innovation, and strategic implementation within any forward-thinking organization. The future of QA is intelligent, automated, and generative. Are you ready to build it?