The Cost Problem: Making Large Language Models Accessible and Efficient

Large Language Models (LLMs) offer incredible power but demand vast computational resources, creating a 'cost problem' that hinders widespread, cost-effective, and privacy-preserving deployment. This post explores the challenges and implications of this bottleneck.

The era of Large Language Models (LLMs) has ushered in unprecedented capabilities, transforming how we interact with technology, generate content, and process information. From sophisticated chatbots to intelligent code assistants, LLMs like GPT-4, LLaMA, and Mistral have demonstrated remarkable fluency and understanding. However, this power comes at a significant cost: immense computational resources. These models are often colossal, boasting billions or even trillions of parameters, demanding powerful GPUs, vast memory, and substantial energy consumption. This "cost problem" creates a significant bottleneck, limiting their widespread, cost-effective, and privacy-preserving deployment, especially in scenarios where cloud-based solutions are not feasible or desirable.

Imagine wanting to run a personalized AI assistant directly on your smartphone, translate speech in real-time on a wearable device, or enable an autonomous vehicle to process natural language commands without an internet connection. These "on-device" and "edge" computing scenarios are where the sheer size of current LLMs becomes a critical barrier. The demand for LLMs to operate closer to the data source or end-user is growing exponentially, driven by needs for lower latency, enhanced privacy, reduced operational costs, and improved sustainability.

Fortunately, this challenge has spurred a vibrant and rapidly evolving field of research and development focused on making LLMs smaller, faster, and more efficient. This blog post will delve into the core techniques that are democratizing LLM access and innovation: quantization, pruning, and knowledge distillation. These methods, often used in conjunction, are crucial for unlocking the full potential of LLMs beyond hyperscale data centers, enabling their deployment on consumer hardware, embedded systems, and local edge infrastructure.

The Imperative for Efficient LLMs: Why Size Matters

Before diving into the "how," let's solidify the "why." The motivations for optimizing LLMs are multifaceted and critical for their future:

- Cost Reduction: Running large LLMs incurs significant inference costs due to GPU hours, energy consumption, and data transfer. Efficiency techniques directly translate to lower operational expenses.

- Latency Improvement: Cloud round-trips introduce latency. On-device or edge processing drastically reduces response times, enabling real-time applications like voice assistants or autonomous decision-making.

- Enhanced Privacy and Security: Processing sensitive data locally, rather than sending it to third-party cloud providers, is paramount for many enterprises and individual users.

- Offline Capability: Many applications require functionality without a constant internet connection, such as in remote areas or for specific industrial use cases.

- Sustainability: The energy footprint of training and running massive LLMs is substantial. More efficient models contribute to greener AI practices.

- Democratization: Making powerful AI accessible to a broader range of developers and organizations, irrespective of their cloud budget, fosters innovation and reduces reliance on a few dominant players.

These compelling reasons underscore why techniques like quantization, pruning, and knowledge distillation are not merely optimizations but fundamental enablers for the next generation of AI applications.

Quantization: Shrinking the Numerical Footprint

At its core, quantization is about reducing the precision of the numerical representations within an LLM. Most deep learning models are trained using 32-bit floating-point numbers (FP32) for their weights and activations. Quantization converts these to lower-bit representations, such as 16-bit floating-point (FP16), 8-bit integers (INT8), or even 4-bit (INT4) or 2-bit (INT2) integers.

The Concept

Imagine a number like 3.1415926535. In FP32, it takes up 32 bits of memory. If we quantize it to a lower precision, say, an 8-bit integer, we might represent it as 3 or 4 after scaling. While this loses some information, the goal is to do so strategically, minimizing the impact on the model's overall accuracy while significantly reducing its memory footprint and computational requirements.

Benefits of Quantization:

- Reduced Memory Usage: Lower precision numbers require less storage, allowing larger models to fit into memory-constrained devices.

- Faster Computation: Processors can perform operations on lower-bit integers much faster than on floating-point numbers. This translates to faster inference and lower energy consumption.

- Lower Bandwidth: Moving smaller numbers around the memory hierarchy is quicker, reducing data transfer bottlenecks.

Types of Quantization

-

Post-Training Quantization (PTQ):

- Concept: This is the simplest approach, applying quantization to an already trained FP32 model without any further training.

- Sub-types:

- Dynamic Quantization: Quantizes weights to INT8 at model load time and activations to INT8 dynamically during inference. This is easy to implement but can be slower for activations.

- Static Quantization: Requires a small, representative calibration dataset to determine the optimal scaling factors for weights and activations before inference. This pre-computes the quantization parameters, leading to faster inference than dynamic quantization, but requires careful calibration.

- Pros: Easy to implement, no retraining required, fast.

- Cons: Can lead to accuracy degradation, especially for very low bit-widths (e.g., INT4).

- Example: Using

torch.quantization.quantize_dynamicin PyTorch for simple weight quantization.

-

Quantization-Aware Training (QAT):

- Concept: Simulates the effects of quantization during the training process itself. The model "learns" to be robust to the loss of precision by incorporating quantization noise into the forward and backward passes.

- Pros: Generally yields significantly better accuracy than PTQ, especially at lower bit-widths, as the model adapts to the quantized environment.

- Cons: More complex to implement, requires access to the training pipeline and data, and adds overhead to the training process.

- Example: Implementing custom

QuantStubandDeQuantStubmodules in PyTorch and fine-tuning the model with quantization enabled.

Challenges and Recent Developments

- Accuracy Preservation: The primary challenge is to maintain the model's performance. LLMs are particularly sensitive to quantization due to their depth and the complex interactions of their parameters.

- Hardware Support: Effective quantization often relies on hardware accelerators (e.g., TPUs, specific GPUs, NPUs) that are optimized for integer arithmetic.

- Mixed-Precision Quantization: Using different bit-widths for different layers or even different parts of a layer, based on their sensitivity to quantization.

- Grouped Quantization (e.g., GPTQ, AWQ): These are advanced PTQ methods specifically designed for LLMs. They quantize weights in small groups (e.g., 128 weights per group) and apply specific calibration techniques to minimize quantization error, often achieving INT4 or INT3 quantization with minimal accuracy loss.

- GPTQ (General-purpose Quantization): A post-training quantization method that quantizes weights layer-by-layer, minimizing the output error for each layer. It's highly effective for LLMs.

- AWQ (Activation-aware Weight Quantization): Identifies and protects "outlier" weights that are critical for performance, quantizing the rest aggressively. This helps preserve accuracy in highly sensitive models.

- SmoothQuant: A technique that addresses the issue of outlier activations by moving their magnitude to weights, making both activations and weights more amenable to INT8 quantization.

Practical Example: Imagine a LLaMA-7B model, originally FP16 (14GB). Quantizing it to INT4 using GPTQ can reduce its size to ~3.5GB, making it runnable on a consumer GPU with 8GB VRAM or even high-end smartphones.

Pruning: Trimming the Excess Fat

Pruning is a technique inspired by neuroscience, where the brain prunes unused or weak neural connections. In LLMs, pruning involves removing redundant or less important connections (weights), neurons, or even entire layers from the network. The goal is to create a "sparse" model that is smaller and faster, without significantly compromising performance.

The Concept

Many large neural networks are over-parameterized, meaning they have more parameters than strictly necessary to learn the task. Pruning identifies and eliminates these superfluous components, leaving behind a "thinner" network that can achieve similar performance.

Benefits of Pruning:

- Reduced Model Size: Directly shrinks the number of parameters, saving storage and memory.

- Faster Inference: Fewer computations are needed if pruned connections are truly removed, leading to faster execution.

- Reduced Energy Consumption: Fewer operations mean less power usage.

Types of Pruning

-

Unstructured Pruning:

- Concept: Removes individual weights based on a criterion (e.g., magnitude – removing weights closest to zero). This results in a highly sparse model where the remaining weights are scattered irregularly.

- Challenges: While effective at reducing parameter count, unstructured sparsity is often difficult to accelerate on standard hardware, which is optimized for dense matrix operations. Specialized hardware or sparse matrix libraries are needed to realize speedups.

-

Structured Pruning:

- Concept: Removes entire groups of weights, such as channels, filters, or even entire layers. This results in a "thinner" but still dense network, making it more hardware-friendly and easier to accelerate on standard platforms.

- Examples: Removing entire attention heads in a Transformer, or specific feed-forward network neurons.

- Pros: Leads to more regular and hardware-accelerator-friendly models.

- Cons: Can be more challenging to achieve high sparsity levels without accuracy drops compared to unstructured pruning, as removing entire structures has a larger impact.

Pruning Process

A typical pruning pipeline involves:

- Train a dense model: Train the full, unpruned model to convergence.

- Identify redundant components: Use a criterion (e.g., magnitude, sensitivity analysis, saliency scores) to determine which weights/neurons to remove.

- Prune: Set the identified components to zero.

- Fine-tune (optional but recommended): Retrain the pruned model for a few epochs to recover any lost accuracy. This is often called "pruning and fine-tuning."

Challenges and Recent Developments

- The Lottery Ticket Hypothesis: This influential theory suggests that dense, randomly initialized neural networks contain "subnetworks" (winning tickets) that, when trained in isolation, can achieve comparable accuracy to the original network. This implies that pruning isn't just about removing "dead weight" but finding a highly effective sub-network.

- Dynamic Pruning: Instead of a one-shot prune, dynamic pruning methods adjust the network's sparsity during training, either by gradually increasing sparsity or by allowing connections to grow back.

- Pruning for LLMs: Pruning LLMs is particularly challenging due to their scale and the intricate dependencies between layers. Recent work focuses on structured pruning of attention heads or specific feed-forward network components, often combined with knowledge distillation.

- Hardware-aware Pruning: Designing pruning strategies that align with the capabilities of target hardware (e.g., creating block-sparse models that can be efficiently processed by specialized accelerators).

Practical Example: A study might prune 50% of the weights in a BERT-like model, reducing its size significantly. While this might initially cause a slight accuracy drop, a subsequent fine-tuning phase can often recover most, if not all, of the original performance, resulting in a much lighter model.

Knowledge Distillation: Learning from the Master

Knowledge Distillation (KD) is a powerful technique where a smaller, more efficient "student" model is trained to mimic the behavior of a larger, more complex "teacher" model. Instead of learning directly from hard labels (e.g., "this is a cat"), the student learns from the teacher's "soft targets" – the probability distributions over all possible outputs.

The Concept

Imagine a seasoned expert (teacher) explaining a complex topic to a novice (student). The expert doesn't just give the final answer but provides nuanced explanations, highlighting probabilities and relationships. The student learns not just the correct answer but why it's correct and how it relates to other possibilities. Similarly, a teacher LLM's output probabilities often contain valuable information about the relative likelihood of incorrect answers, which helps the student learn a richer representation of the data.

Benefits of Knowledge Distillation:

- Smaller, Faster Models: The primary goal is to train a student model that is significantly smaller and faster than the teacher, yet retains much of its performance.

- Improved Generalization: The "soft targets" from the teacher can act as a form of regularization, helping the student generalize better than if it were trained solely on hard labels.

- Task-Specific Specialization: A large, general-purpose LLM can be distilled into a smaller model optimized for a specific task (e.g., sentiment analysis, summarization), making it highly efficient for that niche.

How it Works

- Train a Teacher Model: A large, high-performing LLM is trained on a vast dataset.

- Define a Student Model: A smaller, simpler architecture is chosen for the student (e.g., fewer layers, smaller hidden dimensions).

- Distillation Training: The student model is trained on a dataset, but its loss function includes two components:

- Soft Target Loss: Measures the difference between the student's output probabilities and the teacher's output probabilities (often using Kullback-Leibler divergence).

- Hard Target Loss (optional but common): Measures the difference between the student's output probabilities and the true labels.

- A temperature parameter is often used to soften the teacher's probabilities further, making the distillation signal richer.

Challenges and Recent Developments

- Student Architecture Selection: Choosing an appropriate student architecture that can effectively learn from the teacher is crucial. It needs to be small enough for efficiency but large enough to capture the necessary knowledge.

- Effective Distillation Objectives: Beyond simple soft targets, researchers explore distilling intermediate representations, attention mechanisms, or even the teacher's ability to generate coherent text.

- Multi-Teacher Distillation: Combining knowledge from multiple teacher models to train a single student.

- Self-Distillation: A single model acts as both teacher and student, often by distilling knowledge from a later training stage (teacher) to an earlier stage (student) or by using different views of the same data.

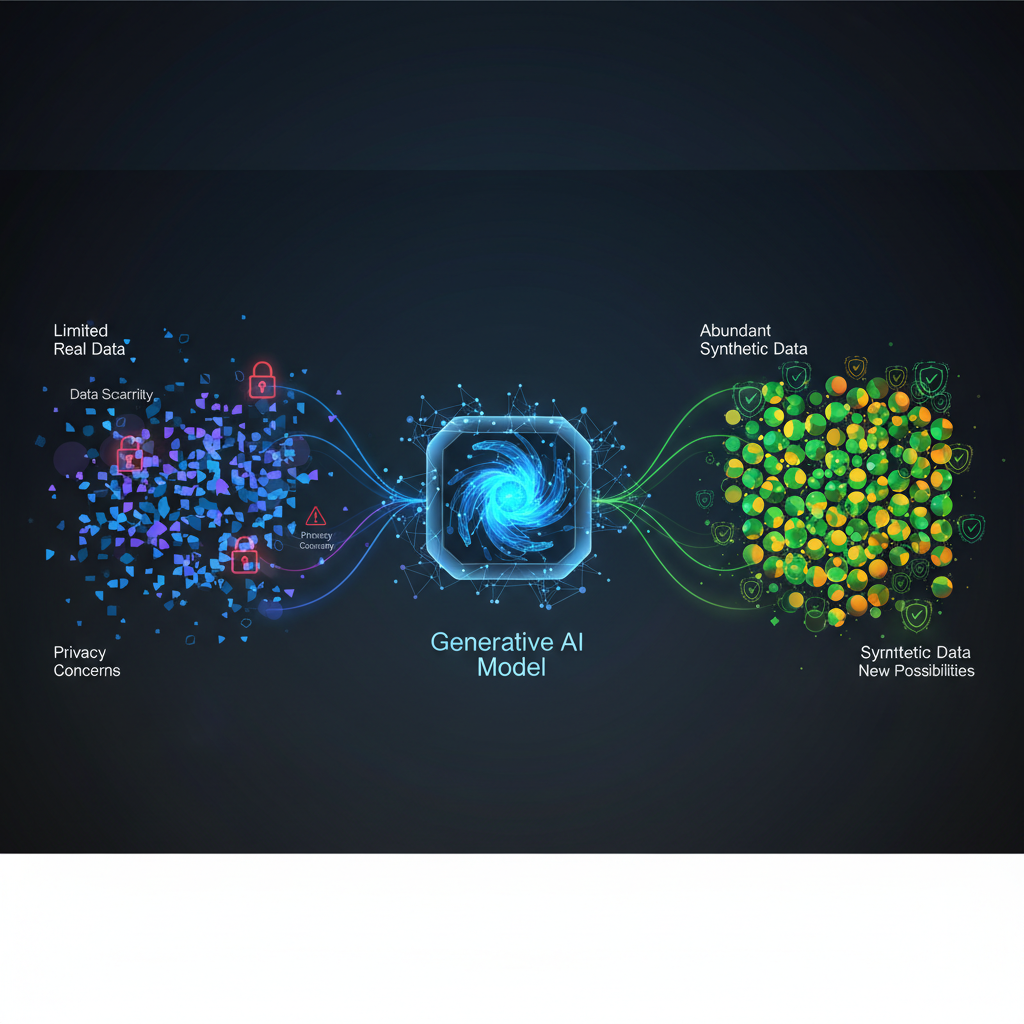

- Synthetic Data Generation: Using the teacher LLM to generate high-quality synthetic data, which is then used to train the student model, especially when real-world labeled data is scarce.

- Distilling LLMs for Specific Tasks: For example, distilling a large general-purpose LLM into a smaller, highly specialized model for code generation or medical text analysis. This allows the smaller model to achieve near-teacher performance on its specific domain while being orders of magnitude smaller.

Practical Example: Google's DistilBERT is a classic example. A 6-layer, 768-hidden-unit BERT model (student) was distilled from a 12-layer, 768-hidden-unit BERT model (teacher). DistilBERT is 40% smaller, 60% faster, and retains 97% of BERT's language understanding capabilities, making it ideal for deployment in resource-constrained environments.

Other Synergistic Optimization Techniques

While quantization, pruning, and knowledge distillation are pillars of efficient LLMs, they are often complemented by other techniques:

- Efficient Architectures: Developing LLMs from the ground up with efficiency in mind. Examples include MobileLLaMA, Phi-2, and TinyLlama, which are designed to be compact yet performant. These models often incorporate architectural changes like grouped queries, smaller hidden dimensions, or optimized attention mechanisms.

- Parameter-Efficient Fine-Tuning (PEFT): Techniques like LoRA (Low-Rank Adaptation) and QLoRA reduce the number of trainable parameters during fine-tuning. While they don't directly shrink the base model, they make adapting large models to new tasks much more efficient in terms of memory and computation, which is crucial for edge deployment where regular full fine-tuning is impractical.

- Graph-based Optimizations: Compilers and runtimes (e.g., ONNX Runtime, OpenVINO, TVM) optimize the computational graph of a model for specific hardware, fusing operations, eliminating redundancies, and leveraging hardware-specific instructions.

- Specialized Hardware: The co-design of efficient LLMs with custom AI accelerators (e.g., NPUs in smartphones, custom ASICs for inference) is a growing area. These chips are specifically designed to accelerate low-precision arithmetic and sparse computations, maximizing the benefits of quantization and pruning.

Practical Applications and Use Cases

The convergence of these optimization techniques is unlocking a new frontier for LLM deployment:

- On-Device AI:

- Smartphones: Personalized assistants, real-time language translation, advanced text prediction, local content generation (e.g., drafting emails, social media posts) without sending data to the cloud.

- Wearables: Real-time voice commands, health insights, context-aware notifications.

- Embedded Systems: Industrial IoT for anomaly detection, smart home devices for local control and natural language interaction, robotics for on-the-fly decision making.

- Edge Computing:

- Autonomous Vehicles: Real-time understanding of voice commands, processing sensor data with natural language queries, in-car content generation.

- Local Data Centers: Enterprises running LLMs on sensitive data within their own infrastructure for privacy and compliance, reducing reliance on public clouds.

- Industrial IoT: Predictive maintenance, quality control, and operational optimization using LLMs to analyze sensor data and generate actionable insights at the source.

- Private/Confidential Computing:

- Healthcare providers using LLMs to analyze patient records locally, ensuring data privacy.

- Financial institutions processing sensitive transaction data with LLMs without exposing it to external services.

- Low-Cost Cloud Deployment: Even in cloud environments, efficient LLMs mean significantly lower inference costs, allowing smaller businesses and individual developers to leverage powerful AI capabilities.

Tools and Ecosystem: The open-source community is thriving with tools that facilitate these optimizations:

- Hugging Face Optimum: Provides tools for accelerating models on various hardware, including quantization and ONNX export.

- PyTorch Quantization APIs: Native support for dynamic, static, and QAT.

- ONNX Runtime: A high-performance inference engine that supports various optimizations, including graph transformations and quantization.

- OpenVINO: Intel's toolkit for optimizing and deploying AI inference, especially on Intel hardware.

- TensorRT: NVIDIA's SDK for high-performance deep learning inference, including quantization and graph optimization.

Conclusion: The Future is Efficient

The journey of Large Language Models is far from over. While their raw power has captivated the world, their true potential will be unleashed through efficiency. Quantization, pruning, and knowledge distillation are not just academic curiosities; they are indispensable tools for any AI practitioner or enthusiast looking to deploy LLMs in the real world.

By mastering these techniques, we can move beyond the confines of hyperscale data centers, bringing the transformative power of LLMs to every device, every edge, and every user. This democratization of AI will foster unprecedented innovation, enable new applications that were previously impossible, and contribute to a more sustainable and accessible AI future. The ability to run powerful LLMs on a smartphone, a drone, or an industrial sensor is no longer a distant dream but a rapidly approaching reality, driven by the relentless pursuit of efficiency. The future of LLMs is not just about getting bigger; it's about getting smarter and leaner.